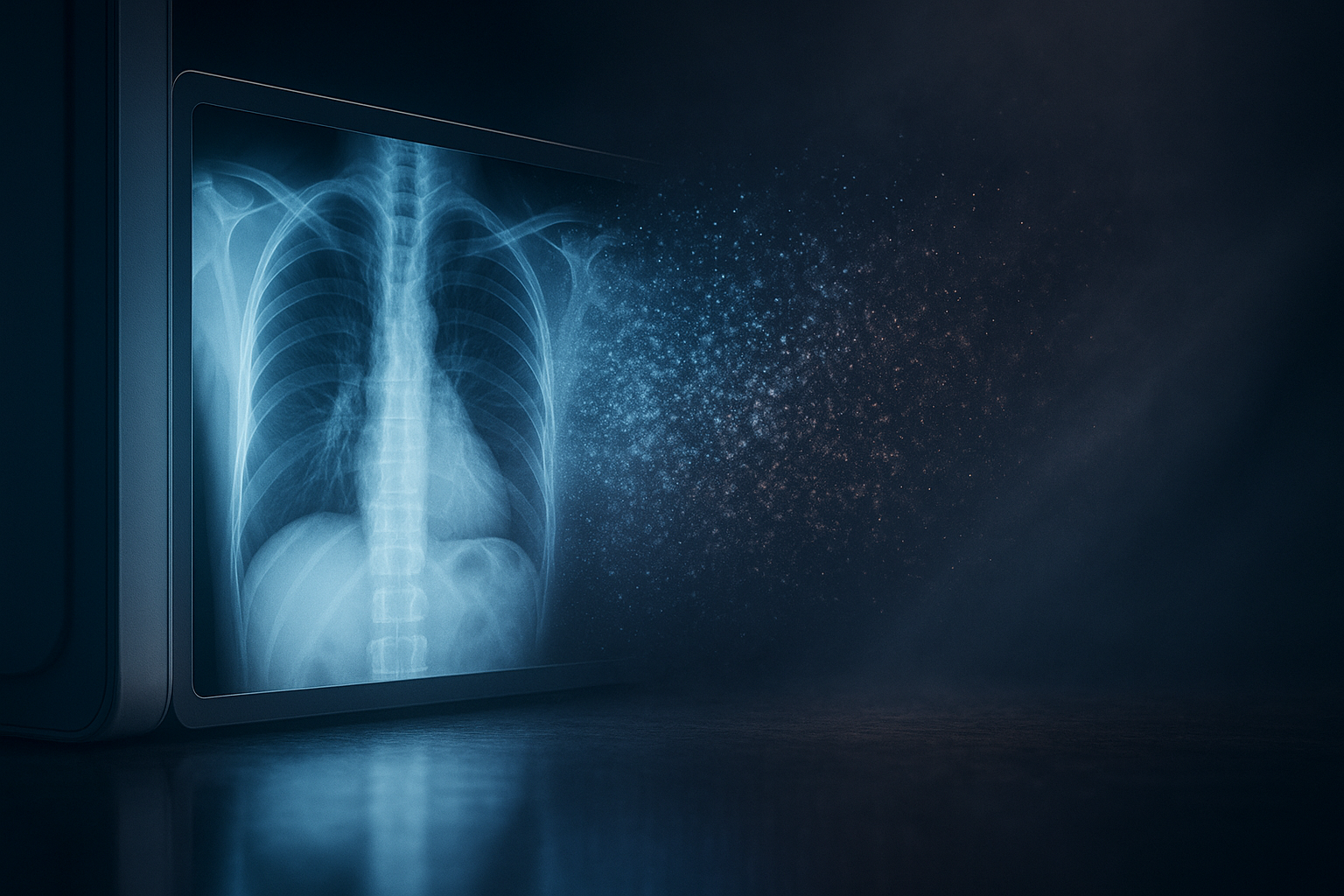

Noisy but Wise: How Simple Noise Injection Beats Shortcut Learning in Medical AI

Opening — Why this matters now In a world obsessed with bigger models and cleaner data, a modest paper from the University of South Florida offers a quiet counterpoint: what if making data noisier actually makes models smarter? In medical AI—especially when dealing with limited, privacy-constrained datasets—overfitting isn’t just a technical nuisance; it’s a clinical liability. A model that learns the quirks of one hospital’s X-ray machine instead of the biomarkers of COVID-19 could fail catastrophically in another ward. ...