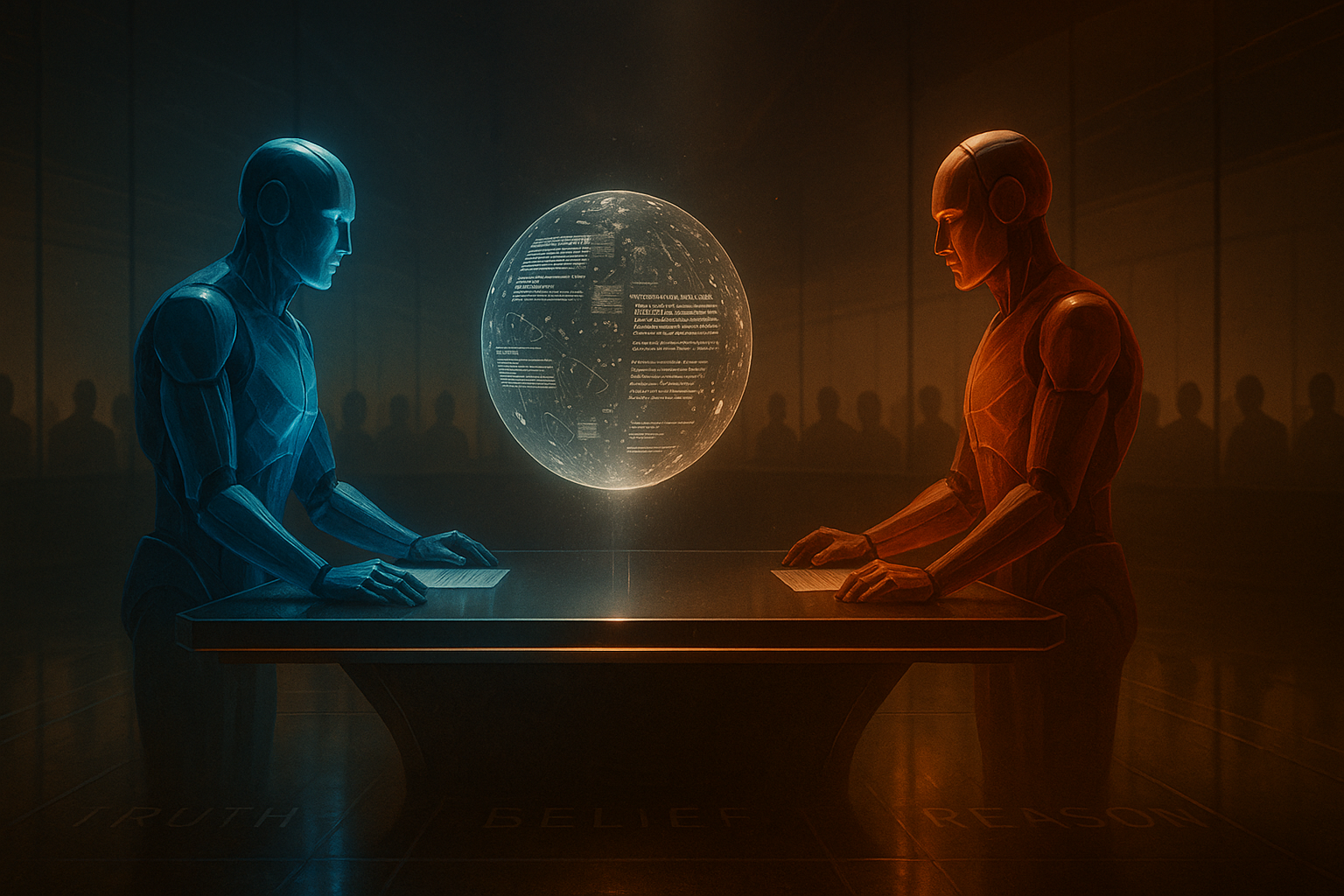

When AI Argues Back: The Promise and Peril of Evidence-Based Multi-Agent Debate

Opening — Why this matters now The world doesn’t suffer from a lack of information—it suffers from a lack of agreement about what’s true. From pandemic rumors to political spin, misinformation now spreads faster than correction, eroding trust in institutions and even in evidence itself. As platforms struggle to moderate and fact-check at scale, researchers have begun asking a deeper question: Can AI not only detect falsehoods but also argue persuasively for the truth? ...