Seeing Green: When AI Learns to Detect Corporate Illusions

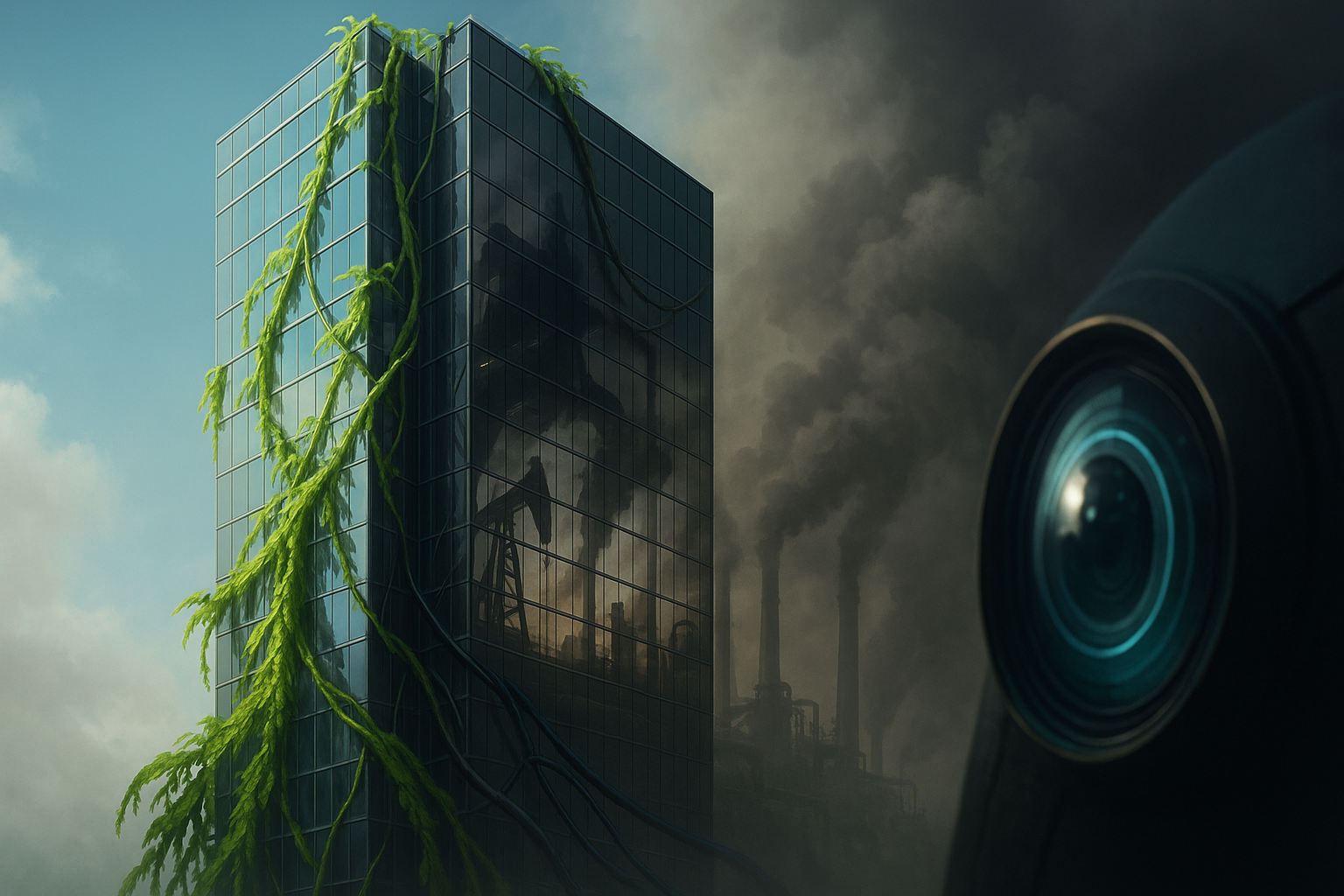

Oil and gas companies have long mastered the art of framing—selectively showing the parts of reality they want us to see. A commercial fades in: wind turbines turning under a soft sunrise, a child running across a field, the logo of an oil major shimmering on the horizon. No lies are spoken, but meaning is shaped. The message? We care. The reality? Often less so.

A new study, “A Multimodal Benchmark for Framing of Oil & Gas Advertising and Potential Greenwashing Detection” (Morio et al., 2025), aims to teach AI systems to see through this glossy façade. It’s not just another dataset—it’s a map of corporate persuasion, annotated, quantified, and ready to challenge the next generation of vision-language models (VLMs).

Framing, not Faking

Framing is the subtle weapon of modern PR. It’s not about saying false things—it’s about deciding what to say at all. The authors borrow Entman’s classic definition: framing “selects some aspects of reality… to promote problem definition, causal interpretation, moral evaluation, and treatment recommendation.” In the oil and gas context, this might mean highlighting community donations, innovation labs, or gleaming solar arrays—while omitting the carbon math behind them.

To study this systematically, the researchers built a dataset of 706 expert-annotated video ads from Facebook and YouTube, covering over 50 companies across 20 countries. Each ad is labeled across 13 framing types—ranging from “Community and Life” and “Environment” to “Green Innovation” and “Patriotism.”

Unlike earlier text-only datasets, this one is fully multimodal—it analyzes both the visual and verbal cues that construct an image of corporate virtue. About 30% of these videos have no spoken words at all—just imagery, music, and suggestion. That makes the visual modality not optional, but essential.

When AI Judges a Smile and a Wind Turbine

The paper’s benchmark tests six major VLMs—including GPT‑4.1, Qwen2.5‑VL, and DeepSeek‑VL2—on their ability to classify these corporate frames. Each model watches clips, reads transcripts (where available), and must output a set of framing labels. The results are revealing:

| Model | Average F1 (YouTube) | Strongest Frame | Weakest Frame |

|---|---|---|---|

| GPT‑4.1 | 71% | Environment | Green Innovation |

| GPT‑4o‑mini | 63% | Work | Patriotism |

| Qwen2.5‑VL (32B) | 66% | Community & Life | Economy & Business |

| DeepSeek‑VL2 | 49% | Community & Life | Green Innovation |

While GPT‑4.1 dominates, it’s the qualitative failures that intrigue. Models can detect clear cues—smiling workers, green fields—but struggle with implicit framing: the quiet claim that “we power your life,” or “our energy keeps the world safe.” The study’s ablations confirm this: removing the transcript cuts performance sharply, showing that words still anchor interpretation. But in Facebook ads—where text dominates and visuals play a supporting role—the opposite is true.

This duality reveals a subtle truth: AI sees what it is told to see, but framing works precisely because it hides what it must not see.

Measuring Moral Optics

Beyond benchmarks, the authors explore how their dataset might reveal patterns of corporate greenwashing. They correlate model predictions with time and company behavior:

- Temporal trend: After 2020, environmental framing in YouTube ads surged—a likely echo of post-pandemic climate discourse and U.S. policy shifts. Whether this reflects genuine transition or performative signaling is left open.

- Company-level contrast: Some firms (anonymized as Company X) over-index on “Environment,” while others emphasize “Community and Life.” The former look green; the latter look essential. Both divert attention from the same fossil base.

Here, AI becomes not just a classifier but a mirror. By quantifying persuasion, it exposes the choreography behind corporate storytelling. It doesn’t accuse—but it makes denial harder.

The Ethical Double Bind

Yet there’s an irony at play. The same models that can detect greenwashing can also generate it. VLMs are now adept at producing slick, emotionally persuasive imagery—exactly the kind of content they are being trained to police. The boundary between analysis and propaganda blurs.

This is why benchmarks like Morio et al.’s matter. They anchor the conversation in empirical accountability. A model that claims to understand human communication must prove it can distinguish sincerity from spin. In that sense, this dataset is not just about oil companies—it’s a test of whether AI can grasp motive.

Toward Algorithmic Transparency

The authors’ open-sourced dataset—available on Hugging Face and GitHub—is an invitation for a broader kind of climate accountability. It empowers journalists, regulators, and AI ethicists to audit not only corporate campaigns but also the algorithms interpreting them.

In the long run, such benchmarks could underpin automated social audits: continuous monitoring of corporate narratives across global media streams, tracking the shifting rhetoric of sustainability. Imagine dashboards that visualize how a company’s messaging evolves when oil prices spike or emissions targets loom.

But such tools will need careful governance. If used uncritically, they risk turning moral judgment into a metric. If built thoughtfully, they could mark the beginning of algorithmic ethics—where machines don’t just replicate persuasion but interrogate it.

Cognaptus: Automate the Present, Incubate the Future.