When the term agentic AI is used today, it often conjures images of individual, autonomous systems making plans, taking actions, and learning from feedback loops. But what if intelligence, like biology, doesn’t scale by perfecting one organism — but by building composable ecosystems of specialized agents that interact, synchronize, and co‑evolve?

That’s the thesis behind Agentic Design of Compositional Machines — a sprawling, 75‑page manifesto that reframes AI architecture as a modular society of minds, not a monolithic brain. Drawing inspiration from software engineering, systems biology, and embodied cognition, the paper argues that the next generation of LLM‑based agents will need to evolve toward compositionality — where reasoning, perception, and action emerge not from larger models, but from better‑coordinated parts.

From Neural Scaling to Structural Scaling

For the past decade, AI progress has relied on parameter scaling — bigger datasets, wider transformers, longer context windows. But compositional machines introduce a new dimension: structural scaling.

| Paradigm | Core Idea | Limitation |

|---|---|---|

| Neural Scaling | Increase parameters and data to improve generalization | Diminishing returns, opaque internal reasoning |

| Structural Scaling | Compose smaller, specialized models with coordination interfaces | Higher interpretability, modular evolution |

Structural scaling mirrors how human intelligence develops — distributed specialization with shared communication codes. Just as the brain’s sensory, motor, and linguistic regions cooperate, compositional AI divides capabilities into semi‑autonomous modules governed by higher‑order agents.

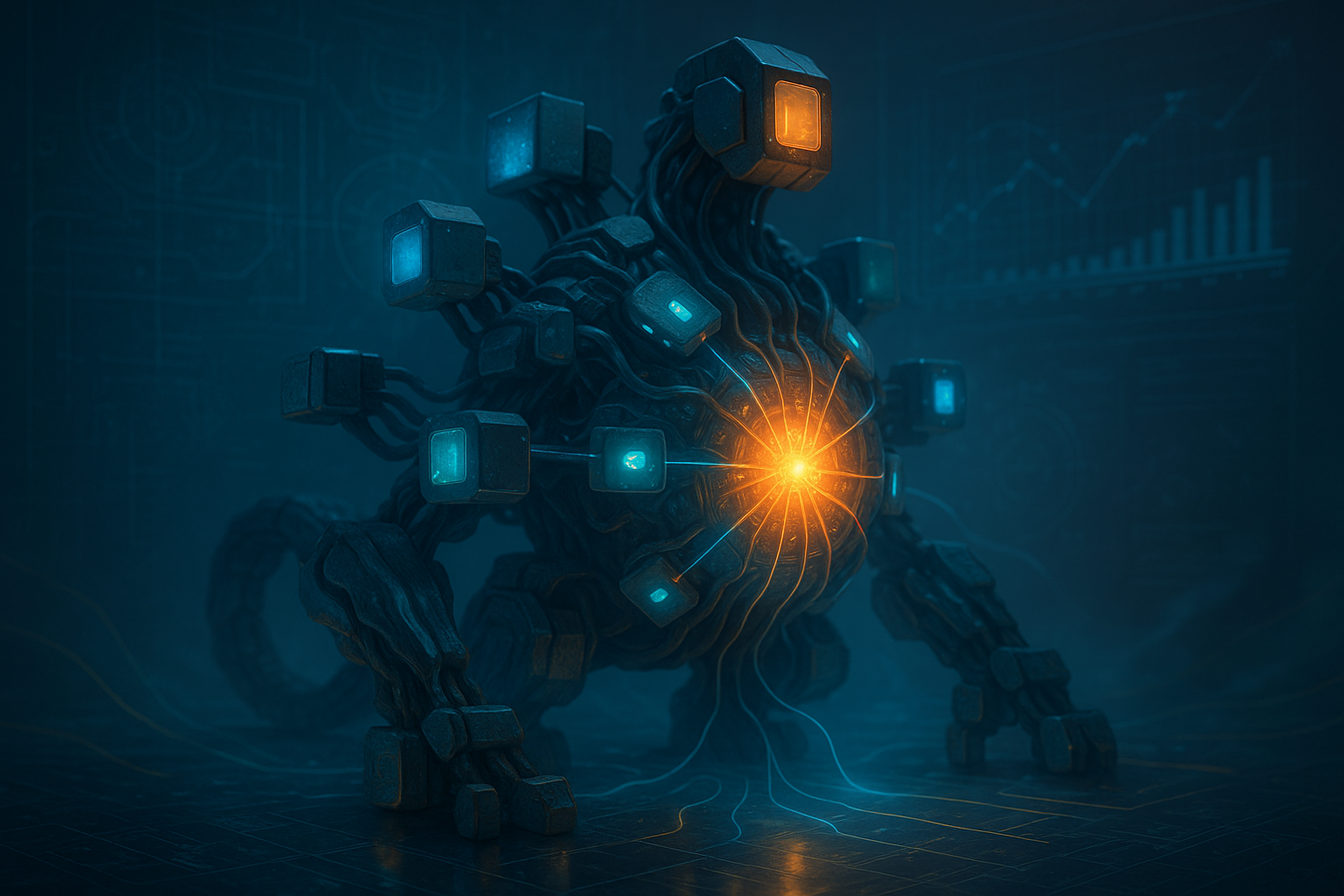

The Machine as an Organism

The paper reimagines the AI system as a cybernetic organism, comprising:

- Perceptual Modules — vision, speech, and sensor fusion units that convert environment data into structured symbols.

- Cognitive Planners — reasoning modules that evaluate goals, simulate outcomes, and allocate tasks.

- Embodied Executors — action modules interfacing with APIs, robotic actuators, or software environments.

The glue binding them is a Process Graph, where nodes represent modules and edges represent information dependencies and control policies. Crucially, these graphs are dynamically editable — agents can rewire themselves, forming new compositions to adapt to novel tasks.

This self‑rewiring ability echoes Minsky’s Society of Mind — yet unlike symbolic AI, the compositional machine leverages continuous representations and reinforcement objectives, giving rise to adaptive, gradient‑guided collectives.

Governance of Multi‑Agent Coordination

The architecture’s real breakthrough lies in how it proposes to govern modular collectives. Three layers of coordination emerge:

- Local coherence — modules learn mutual predictability (i.e., expectations of each other’s inputs and outputs).

- Systemic negotiation — meta‑controllers arbitrate conflicts and allocate computation based on uncertainty and goal priority.

- External alignment — the system interfaces with human or organizational oversight, encoding norms or ethics as soft constraints.

This mirrors the governance of complex organizations: operational, managerial, and regulatory layers — except that in machine societies, these layers can learn their own incentive structures.

Why This Matters: Beyond Single‑Agent Intelligence

Most LLM agents today simulate coordination — chaining prompts or emulating teamwork through sequential planning. But compositional machines aim for real internal diversity — networks of agents that can disagree, debate, and reconcile through structured negotiation.

This approach carries major implications:

- Robustness: failures in one module don’t collapse the system.

- Transparency: decision traces are localized, auditable, and testable.

- Scalability: new capabilities can be attached without retraining the whole model.

In short, compositionality replaces brute‑force generalization with evolutionary adaptivity.

Echoes Across the Cognaptus Landscape

For Cognaptus readers, this shift parallels what we’ve seen in business automation: the transition from monolithic enterprise systems to microservice ecosystems. Just as microservices enable agile, decentralized organizations, compositional machines may enable AI systems that govern themselves — with emergent hierarchies, internal accountability, and adaptive resource allocation.

If today’s AI agents are employees, compositional machines are companies — complete with managers, regulators, and workers. They don’t just respond to prompts; they negotiate responsibilities.

The Coming Decade of Agentic Design

We may be entering an era where the most important question in AI design isn’t how large a model is, but how well its internal society is organized. The future of artificial intelligence could depend less on inventing new architectures — and more on inventing constitutions for machine governance.

Or, as the paper’s authors put it, “Intelligence emerges not from unity, but from composition.”

Cognaptus: Automate the Present, Incubate the Future.