TL;DR

Most reflection frameworks still treat failure analysis as an afterthought. SAMULE reframes it as the core curriculum: synthesize reflections at micro (single trajectory), meso (intra‑task error taxonomy), and macro (inter‑task error clusters) levels, then fine‑tune a compact retrospective model that generates targeted reflections at inference. It outperforms prompt‑only baselines and RL‑heavy approaches on TravelPlanner, NATURAL PLAN, and Tau‑Bench. The strategic lesson for builders: design your error system first; the agent will follow.

Why the usual “reflect & retry” plateaus

Reflection prompts often produce generic platitudes (“be more careful with budgets”), because they:

- over‑index on rare successes; 2) lack consistent error language; 3) can’t generalize lessons across tasks. Result: agents keep missing the same classes of mistakes—budget caps, policy constraints, time windows, geo‑mismatch—because there’s no structured memory of failure modes.

What SAMULE actually changes

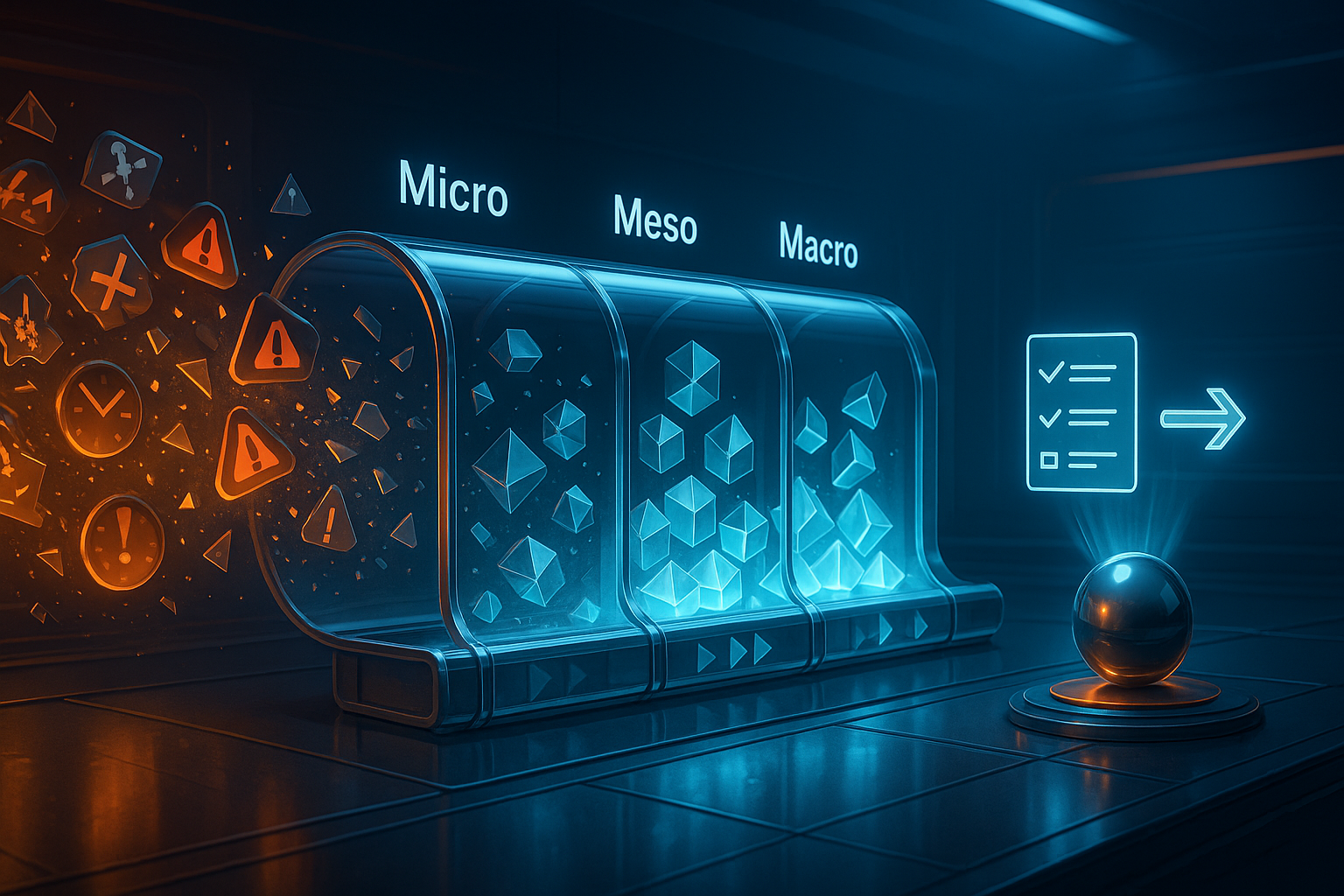

SAMULE builds a three‑layer reflection supply chain and then distills it into a small helper model.

| Level | Input | Output | Purpose |

|---|---|---|---|

| Micro (single trajectory) | One failed run + reference plan | Concrete diagnosis + corrective high‑level plan | Immediate, step‑specific fixes |

| Meso (intra‑task) | All trials for the same task | Error taxonomy + per‑step error labels | Establish a shared vocabulary of failure |

| Macro (inter‑task) | Trajectories sharing the same error type across tasks | Generalized, transferable reflections | Teach patterns that move across tasks |

Then: Reflection Merge → synthesize micro/meso/macro into one final reflection per trajectory → SFT a small retrospective model to emit these reflections at inference, without needing gold references.

Why this matters: The system converts messy trial logs into reusable abstractions. Instead of “try again with cheaper hotels,” you get: “Accommodation minimum‑stay violation → pick properties with \u22641 night policy before scheduling; re‑budget fixed costs before itinerary.” That’s operational.

The quiet breakthrough: a tiny retrospective model beats big RL

Rather than PPO‑style training on long trajectories, SAMULE trains a lightweight model (LoRA‑SFT) to generate reflections conditioned on the agent’s trajectory. Two payoffs for builders:

- Stability & cost: SFT on curated reflections is cheaper and less brittle than reward‑model + PPO loops on 10k‑token traces.

- Specificity: The model emits trajectory‑specific advice instead of static “best practices.”

Foresight reflection: adapt during the conversation

In interactive settings, SAMULE asks the agent to predict the user’s next message; when reality diverges enough, it triggers a reflection step mid‑dialogue. This is a practical recipe for enterprise bots: compare expected vs. actual user intent (price tolerance, date flexibility, policy constraints), and course‑correct before the session derails.

Numbers that matter (and why)

On realistic planning and tool‑use benchmarks, SAMULE lifts pass/accuracy rates materially. The pattern to notice: failure‑centric, taxonomized feedback scales better than success‑only learning.

Interpretation for practitioners: When tasks are complex and the base success rate is low, mining common failure types (not rare wins) yields larger, more stable gains.

Builder’s playbook: bring SAMULE’s ideas into your stack

1) Instrument your trials. Log every action with inputs/outputs and metadata (cost, policy, timing, geospatial validity).

2) Define an error ontology. Start with five high‑leverage classes:

- Budget & pricing (caps, per‑day allocation)

- Policy constraints (min‑stay, capacity, smoking/pets, eligibility)

- Temporal validity (opening hours, sequence feasibility, buffers)

- Geospatial validity (city mismatch, unreachable routes)

- API/tool protocol (parameter schema, rate/timeout handling)

3) Auto‑label errors (meso). For each trial, label steps with error types + short critiques; keep the taxonomy extensible but sparse.

4) Cluster by error type (macro). For each error type, aggregate trajectories across tasks and synthesize pattern‑level remedies (checklists, invariants, guardrails).

5) Train a small reflection head.

- Input: [instruction, context, trajectory]\u2192 Output: merged reflection.

- Use LoRA on a 3B–7B model; keep reflections concise and operational.

6) Add foresight checks in prod. Compute a simple delta‑score between predicted vs. observed user/tool responses; trigger reflection when beyond threshold.

7) Wire reflections to action. Treat reflections as control‑plane messages (plan edits, tool‑choice shifts, constraint re‑checks), not just text to read.

Where it can break—and how to guardrail it

- Static taxonomies drift. Schedule periodic ontology refresh; auto‑propose new error types from free‑text critiques.

- Reflection bloat. Enforce a 3–5 bullet limit; prefer checklists over prose.

- Overfitting to one reference plan. During micro‑level synthesis, keep references illustrative, not prescriptive; validate against multiple valid solutions when possible.

- Compute spike during synthesis. Batch trajectories; summarize early; cache per‑error “playbooks.”

What’s next for Cognaptus

For our client automations (travel, support ops, claims, KYC), we’ll pilot a Reflection Service with:

- a shared error ontology per domain,

- a log‑to‑taxonomy pipeline,

- a 3B retrospective model serving reflections via API,

- a foresight trigger middleware for live chats.

We’ll report gains not just in pass rate, but in time‑to‑correction and policy‑violation incidence. If the SAMULE thesis holds, we should see fewer repeated mistakes, faster recoveries, and calmer conversations.

Cognaptus: Automate the Present, Incubate the Future