If AI agents become the economy’s new workforce, what keeps their markets from melting into ours like solder—fast, hot, and hard to undo? DeepMind’s “Virtual Agent Economies” proposes a practical map (and a modest constitution) for that future: treat agent markets as sandboxes and tune their permeability to the human economy. Once you see permeability as the policy lever, the rest of the architecture falls into place: auctions to resolve clashes, mission-led markets to direct effort, and identity rails so agents can be trusted, priced, and sanctioned.

Below I translate the paper’s ideas into an operator’s playbook—what to copy, what to question, and where to start.

1) The two-axis map: origin × permeability

The authors lay out a clean 2×2: economies can emerge intentionally vs. accidentally, and their boundaries can be impermeable vs. permeable. The quadrant that worries them most is accidental + permeable—i.e., the default path where agents simply join human markets at machine speed. The quadrant that unlocks governance is intentional + tunably permeable—where we can thicken or thin the membrane between agent trades and real money/activity.

| Impermeable (sealed) | Permeable (porous) | |

|---|---|---|

| Intentional (designed) | Safety testbeds; regulated sandboxes; controlled exchange windows | Steerable agent markets with dials for capital/computation inflow/outflow |

| Accidental (emergent) | Fragmented enclaves with limited utility | Default risk: agent markets entangle with finance/supply chains at HFT speed |

Why it matters: Permeability is a collective property. No single actor controls it, so guardrails must be standardized and enforceable across platforms. Think “internet peering policy” more than “app setting.”

2) High‑Frequency Negotiation (HFN) is the new HFT

Expect agents to bargain, bid, and re-contract constantly. That creates HFN dynamics: the same reflex arcs that gave us 2010’s flash crash, now transplanted from equities to compute credits, API slots, and data licenses. What changes in policy design?

- Rate limit the membrane, not just the apps. Limit conversion between agent currency and fiat (size, frequency, net open positions). A liquidity firewall beats per-app throttles.

- Circuit breakers for commerce, not just prices. Pause not only trading, but tool access (e.g., cloud run quotas) when cross‑market instability thresholds trip.

- Fairness ≠ equal models. Capability gaps between personal assistants will compound at HFN speeds; equity needs to be designed at the market layer (see auctions below), not by mandating model parity.

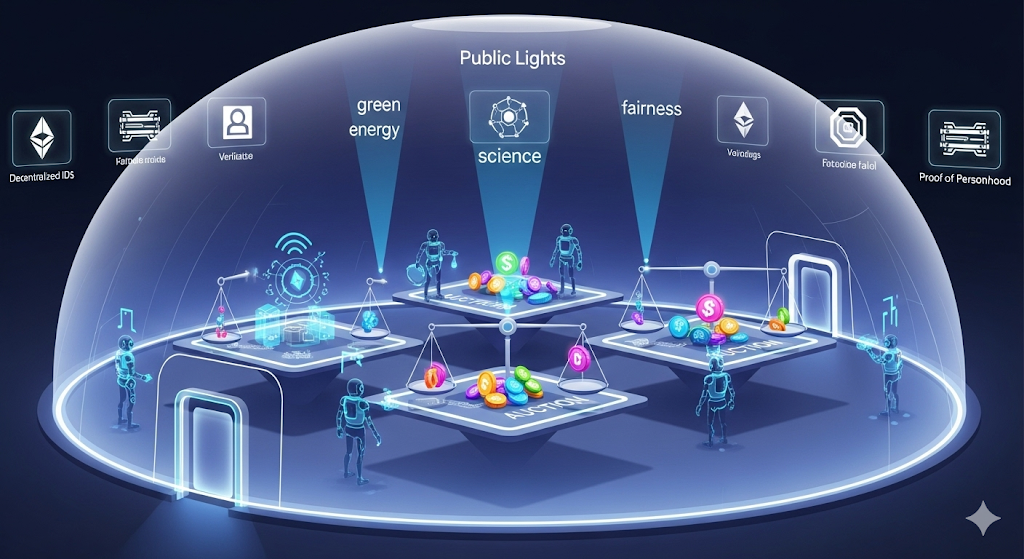

3) Auctions as the fairness engine (Dworkin’s envy‑test, operationalized)

The paper’s most actionable proposal borrows from Dworkin: give each person an equal endowment in an agent currency, and let their agent bid for scarce goods—compute, premium tools, API priority, proprietary datasets, concierge services. The goal is outcomes where no user would prefer someone else’s bundle plus their leftover budget (the envy test).

What this fixes

- Unequal AI capability: If the scarce resource is not the agent itself but the shared pool (compute/time/data slots), equal endowments rebalance bargaining power.

- Preference aggregation: Auctions convert messy multi-user, multi-constraint tradeoffs into prices that reflect revealed priority.

What this doesn’t fix

- Strategic skews: Smarter agents may still bid better; oversight must police manipulation (collusion, spoofing, griefing) and require explainable bid rationales for high-stakes lots.

- Participation gaps: People without agents (or who opt out) still need representation—via public trustees or floor allocations.

Implementation cheat‑sheet

- Lot taxonomy: {Compute blocks; Tool licenses; API QOS tiers; Data slices; Human‑in‑the‑loop hours}.

- Auction cadence: Micro (continuous) for elastic resources, Batch (hourly/daily) for high-stakes items with human review.

- Spending rails: Tiered approvals (agent can spend up to X autonomously; above X, require explicit user/enterprise policy sign‑off).

- Fairness audit: Publish post‑auction envy metrics and dispersion stats; stress‑test against capability gradients.

4) Mission economies: steer the swarm on purpose

Markets won’t auto‑solve climate, public health, or supply‑chain resilience. The authors argue for mission economies—market rules that price public objectives directly (think: compute rebates for net‑zero workloads; bonus credits for validated research replications; penalties for energy‑intensive strategies during grid stress). Two design tips:

- Pay on delivered outcomes, not participation. Use credit assignment to distribute rewards back through contributing agents (searchers → solvers → integrators), so specialization flourishes without freeloading.

- Stay outcome‑agnostic. Mission markets should reward the metric (e.g., CO₂‑abated per compute‑hour) rather than prespecifying the winning tech stack, to avoid top‑down “winner picking.”

5) Identity, reputation, and accountability: the rails

A steerable market needs machine‑readable trust. The stack looks like this:

- DIDs (decentralized identifiers) for agent identities (ephemeral

did:keyfor disposable workers; durabledid:ionfor enterprise/state agents). - VCs (verifiable credentials) as reputation portfolios: successful deliveries, certified skills, safety clearances, resource quotas, and compliance attestations.

- Proof‑of‑Personhood for human‑benefit allocations (UBI‑like credits, civic services), to stop Sybil farms from siphoning value while preserving privacy where possible.

- Protocol layer: A2A/MCP/AgentDNS/auction exchanges to make capabilities discoverable, billable, and enforceable under shared rules.

Governance hooks: Credential revocation, graduated sanctions, and “no‑transact” lists for unverified agents; legal duty for platforms to run oversight agents and publish incident post‑mortems.

6) Operator checklist: what to do this quarter

- Define your permeability policy. Choose what can cross the membrane (fiat↔agent‑credits, tools↔agents, data↔tasks) and at what cadence/limits.

- Stand up a pilot auction. Start with one scarce thing—API QOS minutes—and run a daily sealed‑bid auction with equal endowments. Measure envy, dispersion, and user satisfaction.

- Issue credentials. Create a minimal VC schema (completed‑jobs, SLO adherence, safety clearance). Enforce VC checks at your service edges.

- Adopt an identity method. Ephemeral DIDs for throwaway agents; long‑lived DIDs for agents that hold budgets or touch PII.

- Install circuit breakers. Define instability thresholds (price impact, error bursts, correlation spikes). On trigger, pause tool access and narrow exchange bands.

- Publish a mission weight. Pick one public objective relevant to your domain (e.g., reproducible science, energy efficiency). Start paying/discounting in credits on that outcome.

7) What we’re skeptical about

- “New currency solves contagion”: A branded agent currency is only a buffer if conversion windows are narrow, capped, and supervised. Otherwise it’s lipstick on leverage.

- “Auctions are sufficient”: Auctions align allocation, not conduct. You’ll still need antitrust‑style rules (no bid pooling, no self‑preferencing in exchanges) and audit rights on agent strategies.

- “Top‑down missions scale”: Mission markets must be outcome‑priced and plural—several civic goals can (and will) conflict. Treat them as tunable weights, not commandments.

8) One‑page glossary (operator‑friendly)

- Permeability: How easily money, data, actions, and risk cross between agent and human economies.

- HFN: High‑Frequency Negotiation—agents’ micro‑bidding/renegotiation loops at machine speed.

- DID / VC: Decentralized identity and verifiable credentials—cryptographic identity and attestations machines can verify.

- Proof‑of‑Personhood: Ways to prove “one real human” behind an account without exposing their identity.

- Envy test (Dworkin): No agent would prefer another’s acquired bundle plus leftover budget over its own.

Bottom line: Treat permeability as a product decision, not an accident. Build auctions to ration what’s scarce, missions to aim the swarm, and identity rails to make trust programmable. That’s how we get the upside of agent economies—without inheriting their worst reflexes.

—Cognaptus: Automate the Present, Incubate the Future