TL;DR

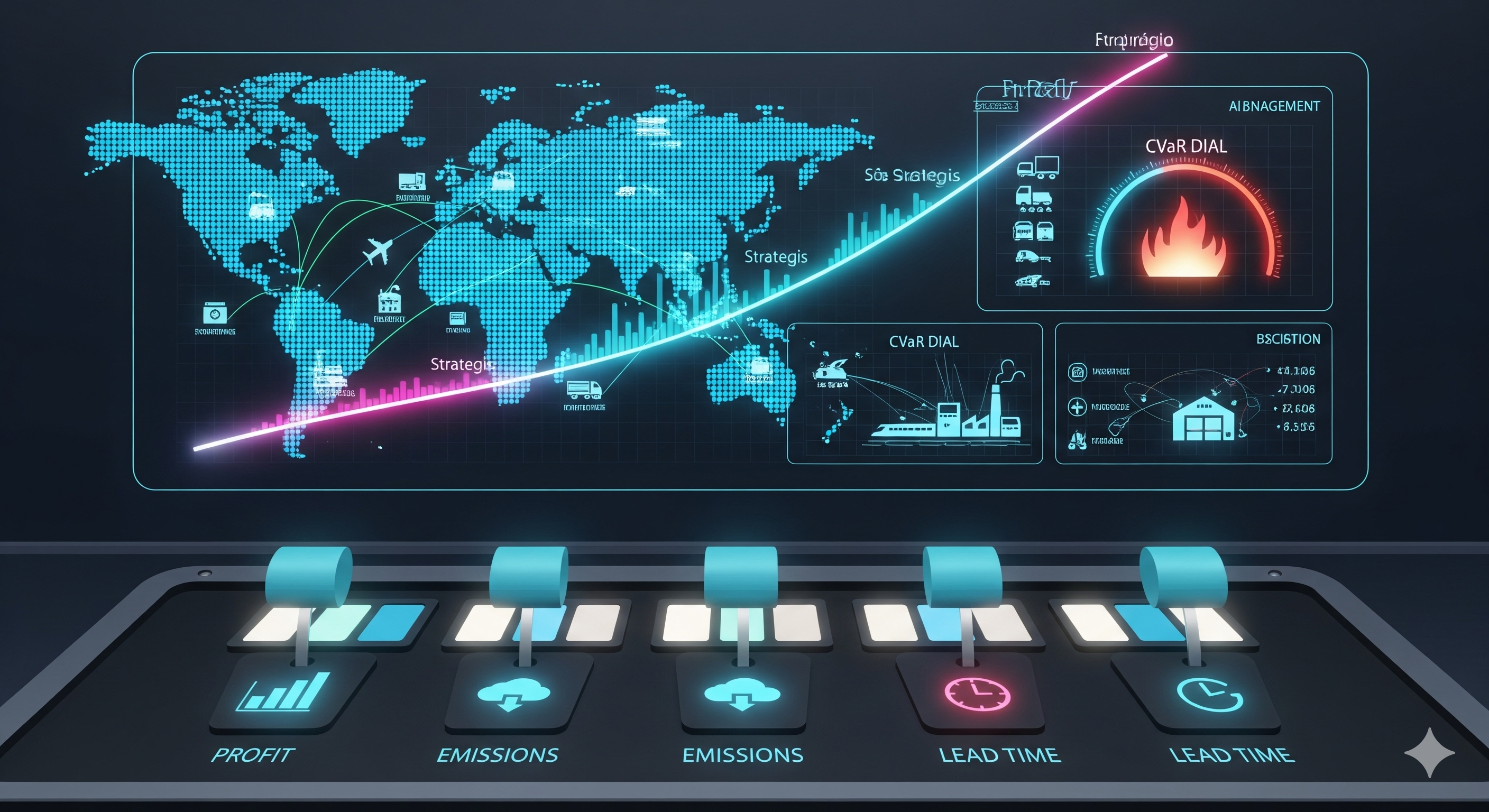

Most “multi‑objective” solutions collapse trade‑offs into a single number. MORSE keeps the trade‑offs alive: it evolves a Pareto front of policies—not just solutions—so operators can switch policies in real time as priorities shift (profit ↔ emissions ↔ lead time). Add a CVaR knob and the system becomes tail‑risk aware, reducing catastrophic outcomes without babysitting.

Why this matters (for operators & P&L owners)

Supply chains live in tension: service levels vs working capital, speed vs emissions, resilience vs cost. Traditional methods either:

- re‑optimize slowly when the world changes, or

- bake in fixed weights that age poorly.

MORSE treats policy selection as a portfolio choice. You keep a diverse population of trained neural policies, each embodying a different trade‑off. When carbon taxes spike or lanes get sanctioned, you hot‑swap to a policy that’s already good for that regime, not re‑train from scratch.

The big idea

- Population search over policy parameters. Use a MOEA (think NSGA‑II) to evolve policy networks, evaluating each on multiple rewards (profit↑, emissions↓, lead time↓).

- Non‑dominated sorting + crowding distance encourages convergence and diversity—you get broad coverage of feasible trade‑offs.

- Two‑headed action: continuous replenishment (Gaussian, scaled to feasible order size) + discrete transport mode (softmax over truck/rail/air), enabling both scale and mode shifts.

- Risk‑aware training with CVaR. Re‑score policies by CVaR at level α per objective using episode return distributions; preferentially keep policies with better tail behavior (e.g., fewer worst‑case delays or emission spikes).

What’s actually new

-

Pareto of policies instead of a one‑size‑fits‑all policy. Operators get a real‑time switchboard of strategies.

-

CVaR‑trained Pareto: a parallel front that explicitly shrinks the tails (e.g., for lead time and emissions) even if the mean stays flat. This is the practical bridge between RL and real‑world risk management.

-

Adaptive scenario handling: When emission penalties kick in or geopolitical costs rise, switching to a different policy preserves profit while keeping other KPIs within bounds.

What the paper shows (translated for ops)

- Scenarios: three inventory networks (3‑node seasonal, 3‑node Poisson, 5‑node Poisson).

- Shocks: (a) emission tax introduced mid‑run; (b) +10% cost surge (geopolitics).

- Outcome: Switching across the Pareto protects P&L under shocks and manages the emissions/lead‑time budget better than staying with a single policy.

- Benchmarks: Outperforms CAPQL and MONES on this inventory case across objectives.

Quick visual comparison

| Approach | How it balances objectives | When it fails |

|---|---|---|

| Scalarization (fixed weights) | Collapses to one score; simple training | Non‑convex fronts; weights age badly; no diversity |

| Multi‑policy MORL (inner‑loop) | Learns several policies in one training run | May lack broad diversity; algorithmic complexity |

| MORSE (evolution over policies) | Explicit Pareto front of policies; easy to switch; parallelizable | More simulation budget needed; requires policy catalog governance |

Implementation cheat‑sheet (for a pilot)

Data inputs: demand history (allow seasonal Poisson), lane distances, lead‑time distributions, cost schedule (item, transpo, backlog), storage caps, emission factors per mode.

Rewards (design as business KPIs):

- Profit: revenue − ordering − transport − holding − backlog.

- Emissions: per‑move emission × distance × volume.

- Lead time: per‑order realized transit/fulfillment time.

Action space:

- Replenishment: continuous in [0, cap] via scaled Gaussian.

- Mode: categorical (truck/rail/air…)

Training loop:

- Initialize N policy networks (He init).

- Simulate E episodes per policy; record per‑objective returns.

- Non‑dominated sort → fronts; compute crowding distance.

- Tournament selection → crossover+mutation → offspring.

- Repeat G generations.

- Optional risk pass: recompute fitness via CVaRα per objective and evolve a risk‑aware front.

- Ship both fronts to production: mean‑optimal and CVaR‑optimal.

Deployment loop:

- Monitor real‑time KPIs & constraints → pick policy from the front that matches current weights/limits (e.g., carbon budget tight → low‑emission policy).

- Re‑evaluate tail behavior weekly; promote/demote policies as lanes/regulations shift.

Pitfalls & how to avoid them

- Myopia to tails: A mean‑trained policy can look fine until a rare disruption. Keep the CVaR front as a first‑class citizen.

- State design drift: Include pipeline inventory (orders en‑route) and short history windows of orders/demand; otherwise the agent reacts too late.

- Over‑switching: Frequent policy swaps can thrash operations. Add hysteresis/guardrails (e.g., minimum dwell time, budget thresholds).

- Data leakage in scenarios: Separate scenario classes (tax on/off, cost surge on/off) during validation to ensure you’re measuring true adaptability.

Where it likely beats your current stack

- Rolling LP/MIP replans under non‑stationary demand: MORSE avoids repeated MILP solves on tight SLAs.

- Single‑policy DRL: gives you one compromise forever; MORSE keeps a menu.

- Heuristic safety buffers: CVaR front lets you quantify and price tail risk instead of hand‑tuning buffers.

What I’d test in a Cognaptus pilot

- Shadow‑mode A/B on one DC: run MORSE in parallel, recommend policy switches; measure P&L, SLA, CO₂e, lateness tails.

- Stress book: scripted shocks (lane closure, sudden carbon tax, supplier outage) to exercise switch logic and CVaR gains.

- Human‑in‑the‑loop: allow operators to veto/approve switches and log rationales; feed that as constraints or preference priors in future generations.

Bottom line

MORSE converts multi‑objective RL from a “pick‑your‑weights” art into a policy portfolio you can actually operate. With a CVaR‑aware front, you can pursue profit and cap worst‑case emissions/lead‑time—then hot‑swap when the world changes.

Cognaptus: Automate the Present, Incubate the Future*