The pitch: We’ve stretched LLM “depth” by making models think longer. ParaThinker flips the axis—training models to think wider: spawn several independent lines of thought in parallel and then fuse them. The result is higher accuracy than single‑path “long thinking” at roughly the same wall‑clock time—and it scales.

TL;DR for operators

- What it is: An end‑to‑end framework that natively generates multiple reasoning paths with special control tokens, then summarizes using cached context.

- Why it matters: It tackles the test‑time scaling bottleneck (aka Tunnel Vision) where early tokens lock a model into a suboptimal path.

- Business takeaway: You can trade a bit of GPU memory for more stable, higher‑quality answers at nearly the same latency—especially on math/logic‑heavy tasks and agentic workflows.

The problem: “Think longer” hits a wall

Sequential test‑time scaling (à la o1 / R1‑style longer CoT) delivers diminishing returns. After a point, more tokens don’t help; they reinforce early mistakes. ParaThinker names this failure mode Tunnel Vision—the first few tokens bias the entire trajectory. If depth traps us, width can free us.

The core idea: Native parallel thinking

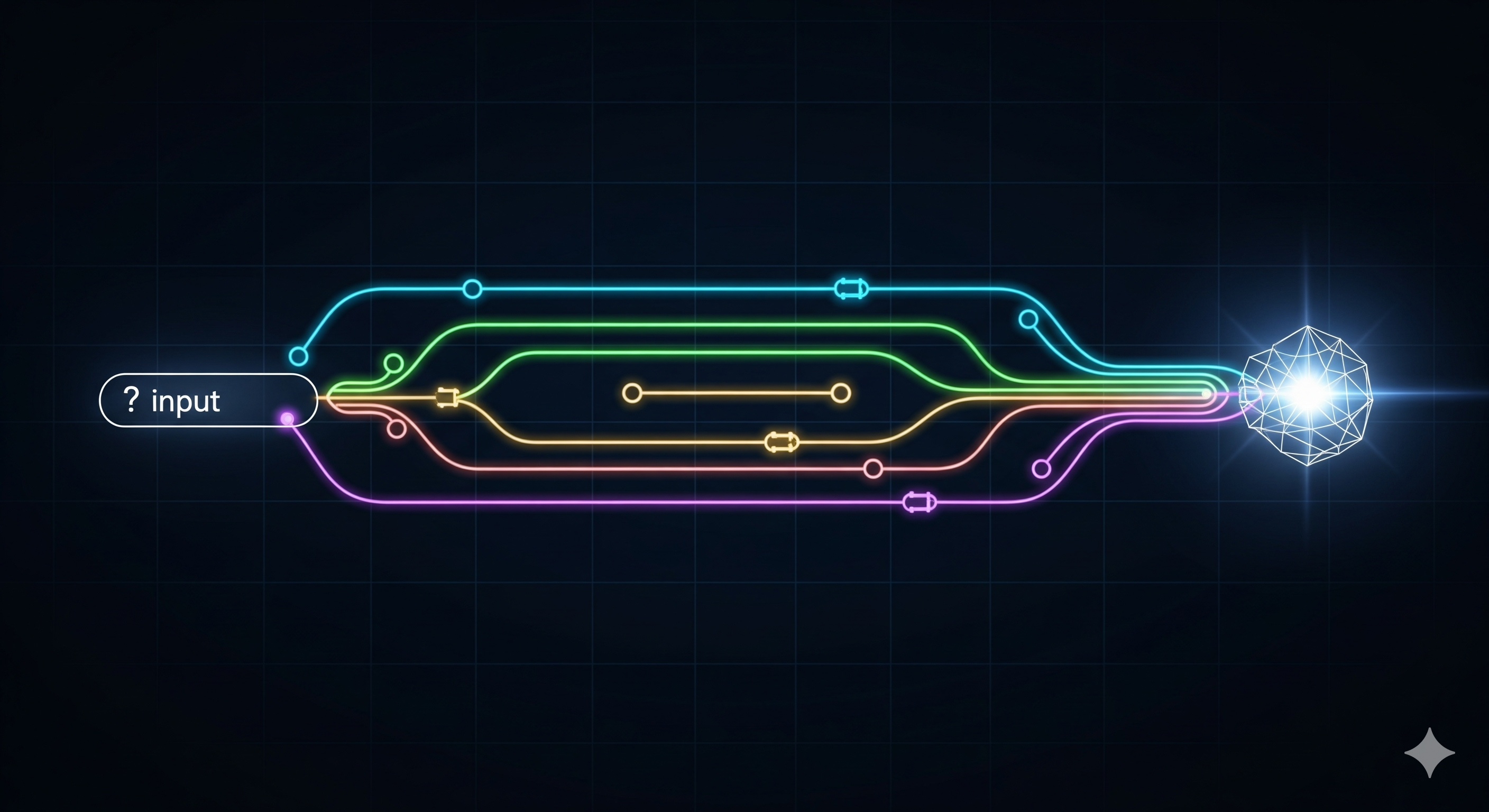

ParaThinker adds parallelism inside the model’s generation loop, not as an external sampling trick.

-

Control tokens: Trainable

<think i> ... </think i>markers seed distinct reasoning streams;<summary> ... </summary>wraps the merger. -

Thought‑specific positional embeddings: Each stream gets a learned identity embedding added to keys/values so the summarizer can tell paths apart without positional collisions.

-

Two‑phase attention masks:

- Parallel reasoning: each path can attend to the prompt + itself only (no cross‑talk).

- Summarization: the final decoder attends to all paths + prompt (controlled integration).

-

KV‑cache reuse: The merger reuses the per‑path KV caches (no re‑prefill), implemented atop vLLM/PagedAttention—so width barely stretches latency.

What the numbers imply

On rigorous math benchmarks (AIME’24/’25, AMC’23, MATH‑500):

- With P = 8 parallel paths, small models gain big (≈ +12.3% for 1.5B; +7.5% for 7B average), with only ~7% latency overhead.

- ParaThinker consistently beats majority voting under the same total token budget because the learned summarizer integrates evidence better than simple vote counts.

- A smart termination rule—stop when the first path finishes—keeps path lengths balanced and improves both accuracy and speed.

Practical read: Width lets a 1.5B–7B class model behave like a much larger sequential model for reasoning tasks, without paying the long‑trace latency tax.

Where this slots into your stack

Good fits

- Automated math/logic grading, code repair, theorem‑style proofs.

- Agentic chains where a wrong early step can cascade (planning, tool calls, data wrangling). Parallel streams hedge that early step risk.

Watch‑outs

- Memory grows with paths

P(more KV). Try 2→4→8; measure. - Open‑ended outputs (docs/code) benefit from summarization; simple numeric/MCQ tasks may still get mileage from majority voting atop ParaThinker.

How it differs from “parallel‑ish” baselines

| Approach | Where parallelism lives | How answers are combined | Pros | Cons |

|---|---|---|---|---|

| Sequential long CoT | Depth only | N/A | Simple | Tunnel Vision; diminishing returns |

| Self‑consistency / Majority Voting | Outside the model (sample many) | Vote or pick best | Easy; improves accuracy | Token‑hungry; weak for open‑ended outputs |

| Re‑prefill concat | Outside; concat all paths | Second pass with long context | Conceptually simple | Costly; positional decay; accuracy degrades as paths grow |

| ParaThinker | Inside: parallel paths and learned merger | Learned summary over path‑tagged KV caches | Accuracy gains under same budget; minimal latency | Needs SFT with special tokens; more KV memory |

Design choices that matter

- Learned thought embeddings beat “flattened positions” because RoPE’s long‑range decay otherwise down‑weights early paths and skews the merger.

- First‑finish termination outperforms waiting for all paths—keeps per‑path budgets aligned and prevents one path from dominating the summary window.

- KV‑cache reuse is the deployment unlock: no second pass, no copy—just stitch the caches and decode the summary.

Ops checklist (pilot recipe)

- Start small:

P=2orP=4, per‑path budget8K–16K. Track accuracy, latency, VRAM. - Budget discipline: Fix total token budget; compare sequential vs ParaThinker apples‑to‑apples.

- Latency SLOs: Measure p95 latency at your target batch size; expect near‑flat scaling to

P=4–8. - Guardrails: Keep a light external verifier for safety‑critical domains; ParaThinker reduces but doesn’t eliminate bad paths.

Strategic take

The industry’s default instinct—“give it more steps”—confuses thinking with wandering. ParaThinker reframes scaling law along a width axis that better matches how GPUs like to work (higher arithmetic intensity) and how cognition avoids fixation (divergent → convergent). For enterprises, that translates into cheaper reasoning wins on existing hardware and a cleaner path to small‑model adoption where latency is king.

Bottom line: If your workloads suffer from brittle first steps, add width before you add depth.

Cognaptus: Automate the Present, Incubate the Future.