When agent frameworks stall in the real world, the culprit is rarely just a bad prompt. It’s the wiring: missing validators, brittle control flow, no explicit state, and second-hop retrieval that never gets the right handle. Maestro proposes something refreshingly uncompromising: optimize both the agent’s graph and its configuration together, with hard budgets on rollouts, latency, and cost—and let textual feedback from traces steer edits as much as numeric scores.

TL;DR: Maestro treats agents as typed computation graphs and alternates between a C‑step (config tuning on a fixed graph) and a G‑step (local graph edits under a trust region), guided by evaluator rationales. The result is higher accuracy with far fewer rollouts than prompt-only optimizers.

Why this matters (and why prompt-only hits a wall)

Our readers have seen this pattern: reflective prompt evolution (GEPA), surrogate search (MIPROv2), or even RL-style tuning (GRPO) can polish a pipeline—but can’t add capabilities. If your HotpotQA agent never extracts entities between hops, no amount of rewording will consistently recover the missing term. Maestro’s answer is structural: add a node.

Common failure → Structural fix

- Instruction drift / loops → Introduce validators and conditional retries.

- Lost global state → Add explicit memory/state nodes and read/write policies.

- Second-hop paralysis → Insert entity extraction to feed reformulation.

- Math via LLM hallucination → Add numeric compute tools instead.

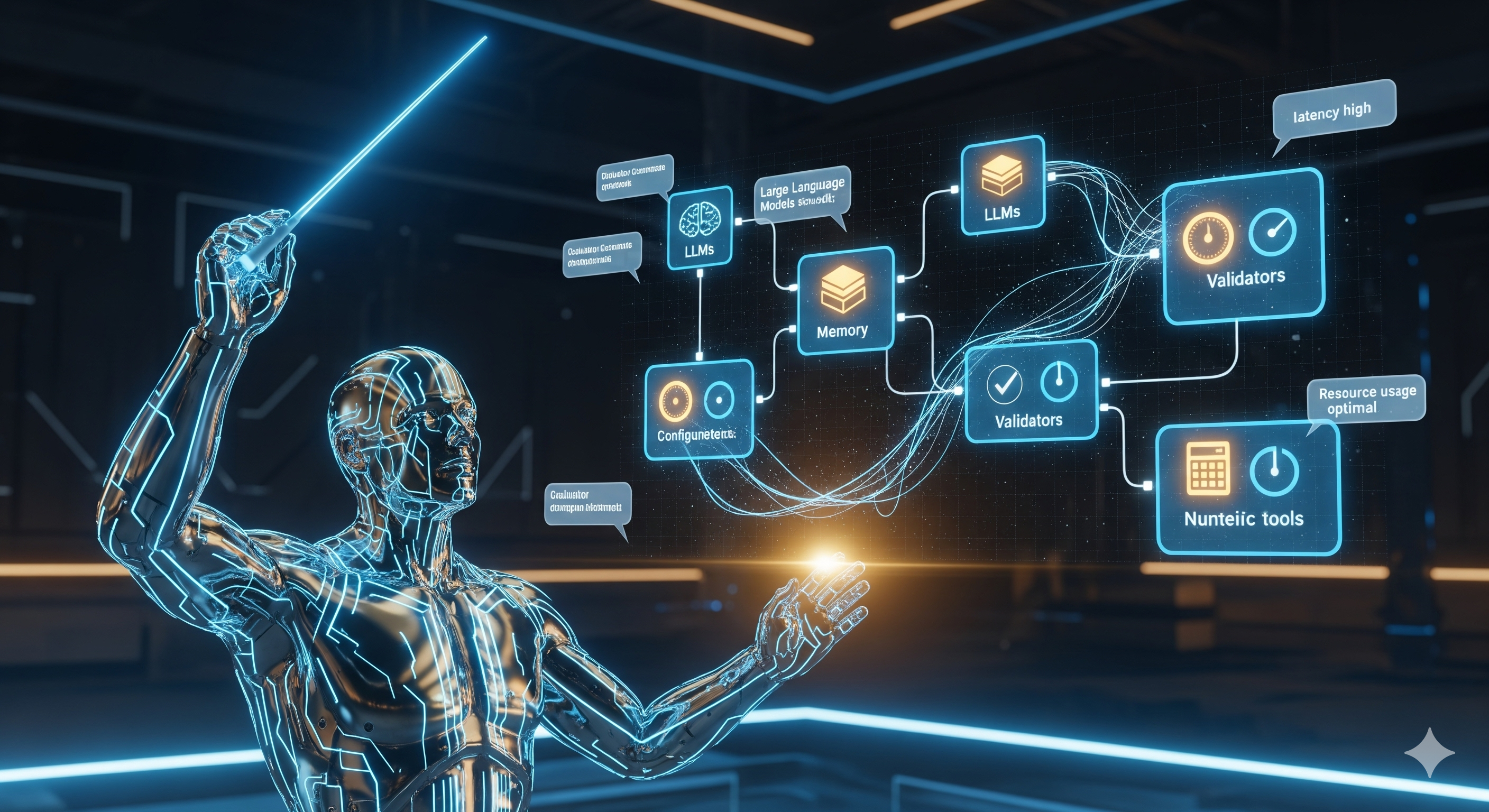

The big idea in one diagram (conceptual)

-

Agent = DAG with adapters & merges. Nodes wrap LLM+tools; edges carry adapters; merges define how multiple inputs combine.

-

Two alternating moves:

- C‑step: tune prompts/models/tools/knobs given a fixed graph under a rollout budget.

- G‑step: propose small graph edits (add/rewire/insert validators, memory, tools) under an edit-distance trust region.

-

Guarded acceptance: only keep edits that raise a budgeted objective; reflectively use evaluator text to choose where to edit next.

What the numbers say

Maestro reports sample‑efficient gains over strong baselines:

| Benchmark | Baseline (best) | Maestro (config only) | Maestro (graph + config) | Rollout economy |

|---|---|---|---|---|

| HotpotQA | GEPA ~69% | 70.33% | 72.00–72.33% | Hits 70.33% with ~240 rollouts; 72% with ~420, vs ~6.4k for GEPA |

| IFBench | GEPA+Merge 55.95% | 56.12% | 59.18% | Peaks with ~700–900 rollouts, beating 3k+ rollout baselines |

Takeaway: Graph edits create headroom that prompt tuning alone can’t unlock, and Maestro reaches it with fewer trials.

Case studies: where structure paid the bills

1) HotpotQA: insert entity extraction between hops

Adding an extract_entities step fed the second-hop query writer with concrete handles (names/options), lifting accuracy beyond prompt‑only tuning. This mirrors our own RAG build-outs: unless you surface the entity set explicitly, reformulators get vague.

Mini‑pattern: [first-hop summary] → entity list → second‑hop query generator → retrieval → final answer.

2) IFBench: validate → rewrite loop

A validate_constraints node checks the candidate answer against user rules and triggers one more rewrite when needed. This is the “measure twice, cut once” pattern for instruction following; cheap to add, high leverage.

3) Domain RAG: give math to tools, not the LLM

The RAG agent grew a numeric_compute tool (mean/stdev/growth). Offloading arithmetic improved correctness and cost. If your chatbot still does spreadsheets with chain-of-thought, you’re paying twice.

A practical lens: diagnosing structural failure modes

| Symptom in traces | Likely root cause | Low‑cost graph edit | What to watch |

|---|---|---|---|

| Repeated partial retrievals; vague second-hop queries | Missing entity grounding | Insert entity extractor feeding query reformulation | Query length vs. retrieval API limits |

| “Looks good” but violates a stated constraint | No validator in loop | Add validate → optional rewrite | Judge reliability & false OKs |

| Long dialogs forget commitments or branch coverage | No explicit state | Add state node with read/write policy | State drift; schema versioning |

| Math/aggregation errors; high tokens | LLM doing arithmetic | Add numeric compute tool | Tool latency; unit tests |

| Latency/cost spikes on easy cases | One-size-fits-all path | Conditional routing / early‑exit | Guard rails to avoid under‑thinking |

Implementation notes for builders

- Represent adapters explicitly. Edge adapters (serializers/templaters) are first‑class optimization targets; small schema fixes unblock downstream nodes.

- Use a trust region for graph edits. Constrain edits (add/rewire/remove) by an edit‑distance radius per iteration to keep search stable and budgets predictable.

- Exploit non‑numeric feedback. Treat judge rationales and failure rubrics as priors on where to mutate next. This is free sample efficiency.

- Budget like a PM. Track separate budgets for rollout tokens vs. structural proposals; don’t starve the G‑step.

- Warm‑start configs after edits. Reuse tuned prompts and knobs where possible; only re‑tune changed neighborhoods.

Where this fits the Cognaptus stack

For clients asking why an “LLM agent” underperforms, Maestro’s philosophy aligns with how we already harden production flows:

- RAG with typed memories (vector + tabular + param stores)

- Validator stacks (regex/JSON schema + rule LLM + safety filters)

- Domain tools (calc, pricing, ledger queries) with unit tests and cost caps

The novelty is treating structure as a search dimension, not a one‑off design choice. That’s how you convert brittle demos into dependable systems.

Quick start checklist (steal this)

- Map your current pipeline as a DAG: nodes, adapters, merges, state readers/writers.

- Instrument evaluators to emit rationales, not just scores.

- Run config-only tuning (cheap wins) under a strict rollout budget.

- Introduce one structural edit from the table above; warm‑start configs.

- Adopt guarded acceptance (keep only monotone improvements) and iterate.

Bottom line: Reliability emerges when we optimize what the agent does (graph) and how it does it (config)—together, under budgets, with reflective guidance. Maestro shows the path—and the data—to make that standard practice, not art.

Cognaptus: Automate the Present, Incubate the Future