Most “AI builds the app” demos fail exactly where production begins: integration, state, and reliability. A new open-source framework from Databricks—app.build—argues the fix isn’t a smarter model but a smarter environment. The paper formalizes Environment Scaffolding (ES): a disciplined, test‑guarded sandbox that constrains agent actions, validates every step, and treats the LLM as a component—not the system. The headline result: once viability gates are passed, quality is consistently high—and you can get far with open‑weights models when the environment does the heavy lifting.

The core idea in one sentence

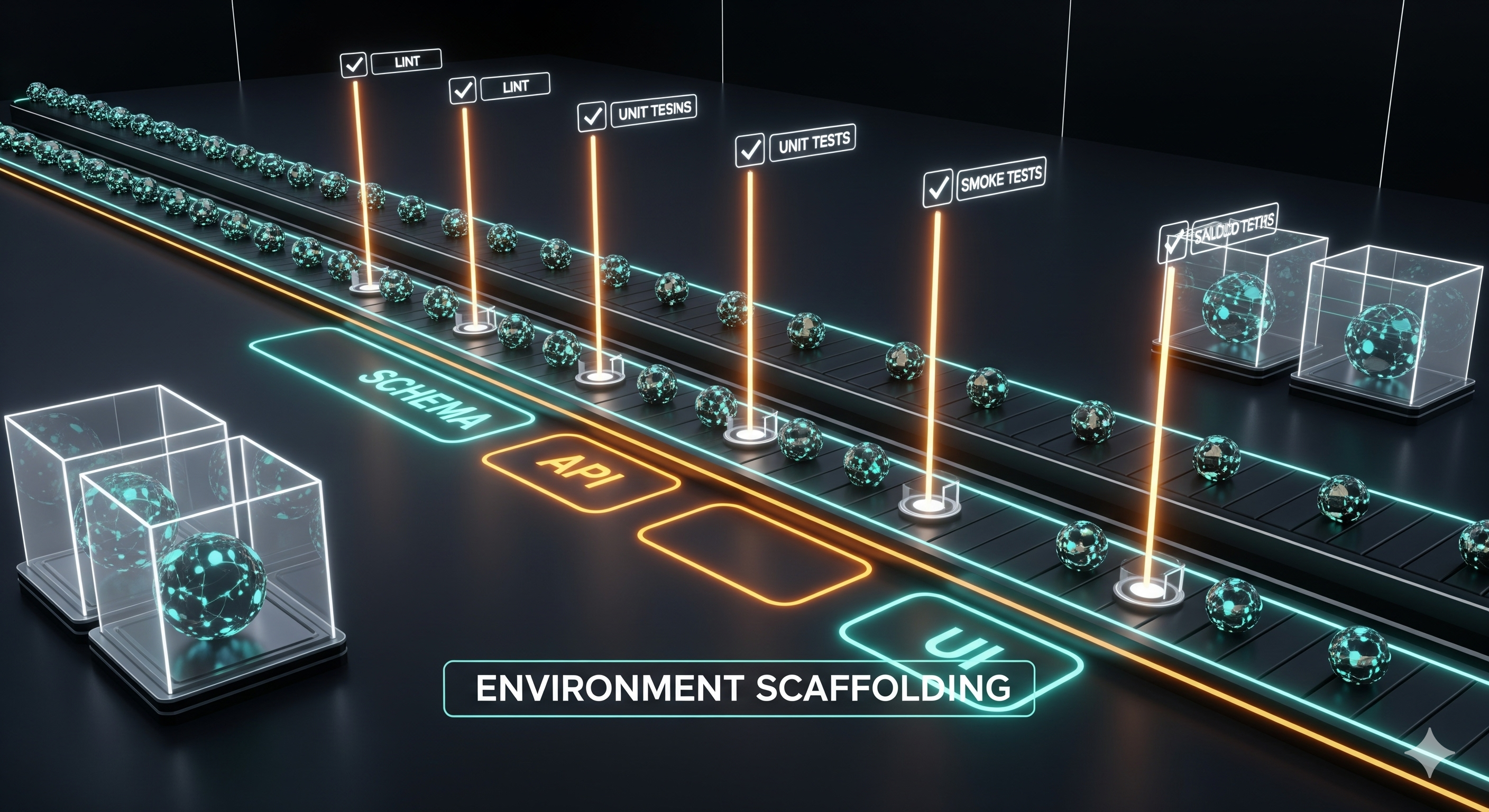

Scale environments, not just models. ES wraps generation in a finite state machine (FSM) that decomposes work (schema → API → UI), validates each stage (linters, unit/smoke tests, perf checks), and isolates execution (ephemeral containers & managed DBs). The model is interchangeable; the guard rails are not.

What’s actually inside “Environment Scaffolding”

-

Structured decomposition: Explicit stages with clear inputs/outputs, acceptance rules, and artifacts (e.g., migrate DB, implement handlers, wire UI).

-

Multi‑layered validation:

- Boot & Prompt fit (smoke): does the app start and match the task?

- CRUD integrity: handler/unit tests to ensure data operations really work.

- Code hygiene & typing: lint/type checks catch structural errors early.

- Performance: normalized runtime checks.

-

Runtime isolation: Every attempt runs in a sandbox (containers + ephemeral state) so repair loops are safe and reproducible.

-

Model‑agnostic orchestration: Swappable backends; the workflow stays constant.

Quick visual of the shift

| Dimension | Model‑Centric | Environment Scaffolding |

|---|---|---|

| Process | Big prompt (few passes) | FSM with per‑stage gates |

| Validation | Late/ad‑hoc | Integrated at every step |

| Recovery | Manual retries | Automatic repair loop using validator feedback |

| Execution | Often on host | Isolated sandboxes with cached layers |

| Model dependence | High | Low (models are interchangeable parts) |

Results that matter to operators

- Viability & Quality (TypeScript/tRPC cohort, n=30): 73% of apps deemed viable under smoke gates; among those, mean quality ≈ 8.8/10 and 30% hit perfect. Translation: once ES clears the early gates, quality clusters high.

- Open vs closed trade‑offs: An open‑weights leader (Qwen3‑Coder class) reached ≈81% of a closed baseline’s success at ~1/9th cost under the same scaffolding. Environment design can convert cheaper models into production‑viable output for CRUD‑style apps.

The counterintuitive ablation findings (and how to use them)

- Removing unit/handler tests increases apparent viability but degrades real functionality (CRUD regressions). Keep them.

- Linting has mixed value; some rules are too strict for scaffolded generation. Right‑size your rule set to favor correctness over style.

- Playwright E2E tests were too brittle in this context; disabling them raised both viability and average quality. Replace “all‑paths E2E” with targeted integration tests for critical user journeys.

Practical takeaway: Start with smoke + backend unit tests, add lightweight integration checks for the golden path, and apply curated linting. Save heavy E2E for pre‑release gates, not inner repair loops.

Why this matters beyond CRUD apps

ES is an operating model for agentic systems: plan → build → validate → repair, with strict interfaces between stages. Whether you’re wiring a tRPC stack, Laravel, or a Python/NiceGUI app, the invariant is the contract each stage must satisfy. That invariance is what lets cheaper models compete—and what makes failure modes diagnosable.

Playbook: adopting ES in your org (90‑day rollout)

Phase 1 — Contain & Observe (Weeks 1–3)

- Pick a single stack and define a three‑stage FSM (Schema → API → UI).

- Stand up sandboxed runners (Docker), seeded DBs, and artifact capture (logs, diffs, metrics).

- Implement smoke + handler tests as hard gates; linting with a minimal rule set.

Phase 2 — Tighten the Loop (Weeks 4–8) 4. Add a repair loop: on FAIL, feed error traces back to the LLM with precise diffs. 5. Introduce budgeted tree search (sample k variants per stage; keep top‑1 by validator score). 6. Cache environment layers and parallelize sandboxes to cut latency and cost.

Phase 3 — Scale & Generalize (Weeks 9–12) 7. Roll the same FSM to a second stack; keep validators stack‑aware. 8. Replace brittle E2E with golden‑path integration checks and perf smoke. 9. Add observability: per‑stage pass rates, repair counts, cost/time budgets, and a “definition of done.”

For Cognaptus clients: where ES pays off

- Internal tools & CRUD portals: Highest ROI; ES fits the dominant failure modes (schema, handlers, UI wiring).

- Cost management: Run open‑weights where ES coverage is strong; reserve closed models for fuzzy tasks (ambiguous UX copy, complex business rules).

- Governance: ES artifacts (tests, logs, diffs) are auditable—crucial for regulated teams.

A simple readiness checklist

- FSM defined with stage contracts and artifacts

- Smoke (boot + prompt fit) and handler/unit tests in CI

- Dockerized, ephemeral runners with seeded DB

- Minimal lint rules tuned to catch correctness issues

- Golden‑path integration checks for your core user flow

- Repair loop wired to validator output (diffs, traces)

- Dashboards for per‑stage pass rates, retries, and cost

Bottom line: Bigger models help—but guard rails win. If you can only invest in one thing this quarter, invest in Environment Scaffolding. It turns probabilistic generation into predictable delivery.

Cognaptus: Automate the Present, Incubate the Future