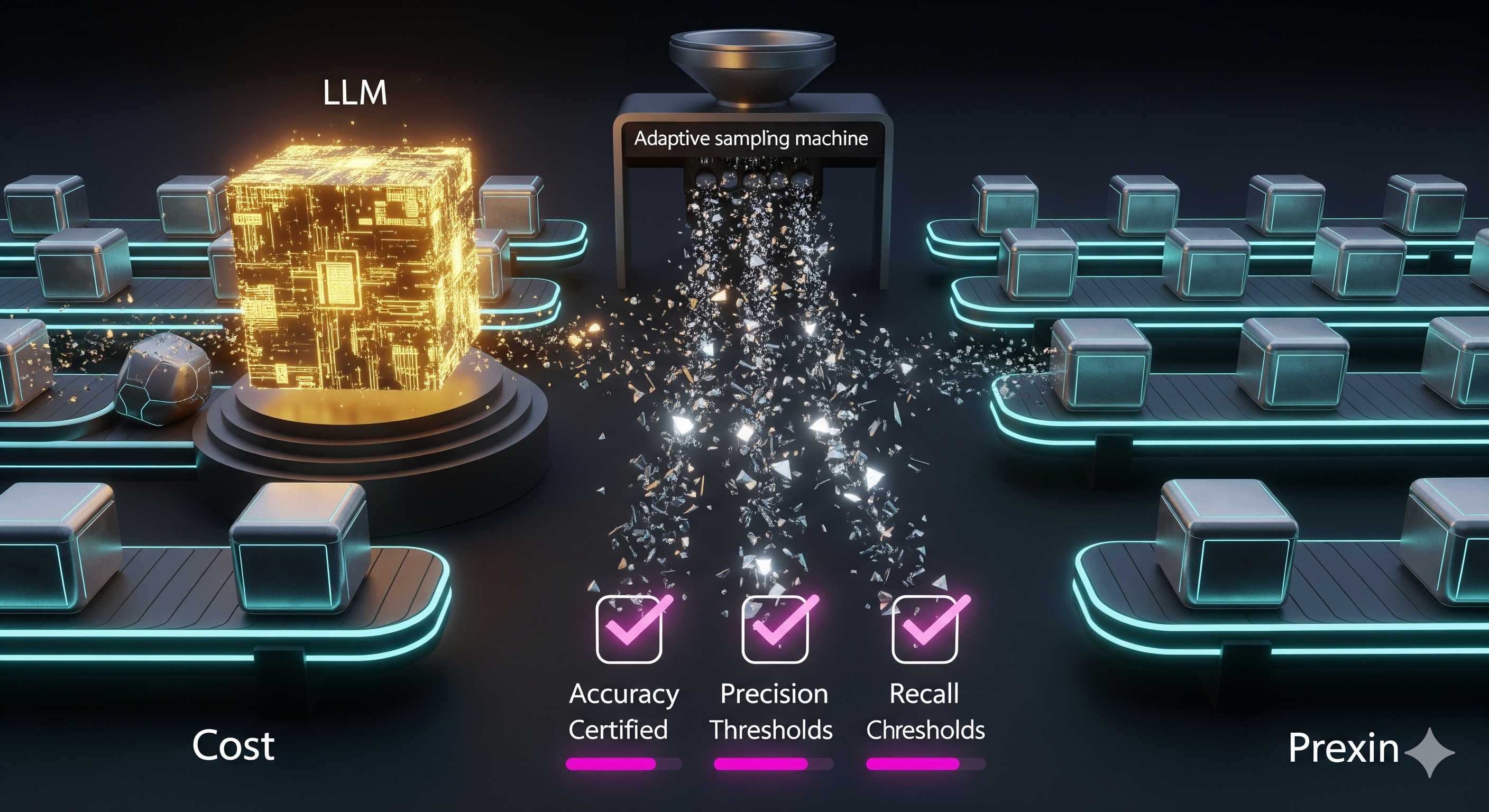

When teams push large text workloads through LLMs (contract triage, lead deduping, safety filtering), they face a brutal choice: pay for the “oracle” model (accurate but pricey) or accept quality drift with a cheaper “proxy”. Model cascades promise both—use the proxy when confident, escalate uncertain items to the oracle—but in practice they’ve been fragile. SUPG and similar heuristics often over‑ or under‑sample, rely on asymptotic CLT assumptions, and miss targets when sample sizes are small. The BARGAIN framework fixes this by combining task‑aware adaptive sampling with tighter finite‑sample tests to certify targets while maximizing utility (cost saved, recall, or precision). The authors report up to 86% more cost reduction vs. SUPG for accuracy‑target (AT) workloads, and similarly large gains for precision‑target (PT) and recall‑target (RT) settings—with rigorous guarantees.

Why cascades fail in the wild

- Wrong objective while sampling. Classic approaches sample by score quantiles, not by the business target (accuracy/precision/recall), so they waste budget where it doesn’t help threshold decisions.

- Asymptotics without safety belts. CLT‑based SUPG “works in the limit”, but thresholding decisions are made from small pilot samples.

- Variance blindness. Hoeffding‑style checks only look at the mean; they don’t get easier when the class is nearly pure (e.g., precision ~1.0 in the high‑score tail), so you leave value on the table.

What BARGAIN changes

1) Hypothesis tests that get tighter when the tail is clean. BARGAIN swaps Hoeffding/CLT for modern finite‑sample tests (Waudby‑Smith & Ramdas) that use cumulative variance, making the certification bar easier to clear where observed labels are low‑variance (e.g., top‑score bucket is almost all positives). Result: fewer false rejections of good thresholds → more proxy usage (cost saved) at certified quality.

2) Adaptive, target‑aware sampling. Instead of pre‑sampling a fixed set, BARGAIN walks thresholds from high to low, only sampling within the candidate’s score slice until the test declares the target met (then it moves on). If it reaches a region that truly misses the target, it keeps sampling until the budget ends—returning the best certified prior threshold. No budget wasted on irrelevant, low‑score samples.

3) Smarter selection logic. BARGAIN’s selector assumes that in real data, precision tends to be monotone as scores drop. It therefore requires zero misses above the chosen threshold (η=0), avoiding the usual union‑bound blow‑up and keeping failure probability tight while picking the highest‑recall threshold that still certifies the target.

Plain‑English mapping to business goals

- AT (Accuracy Target): “Match the oracle ≥T% of the time while using the oracle as little as possible.” Utility = cost saved.

- PT (Precision Target): “Keep false positives low (≥T precision) within a fixed oracle budget; maximize recall.” Utility = recall.

- RT (Recall Target): “Hit a coverage floor (≥T recall) within a fixed oracle budget; maximize precision.” Utility = precision.

Practical cue: For compliance filters and safety review queues (binary tasks), choose PT when brand risk of false positives is high; choose RT when you must not miss positives (e.g., adverse event detection). For multi‑class extraction/labeling, AT is the natural default.

A tiny, concrete example

Suppose you’re flagging contracts mentioning a specific clause:

- Proxy (cheap LLM) emits a score S(x) and a binary label.

- You want precision ≥ 90% under a 1,000‑doc oracle budget.

- BARGAIN samples only from high‑score buckets, certifies the smallest threshold whose precision test passes, and returns all docs above that threshold as positives. If the test fails at some threshold, it concentrates the remaining budget there until it can prove failure—then rolls back to the last certified threshold. Outcome: you ship more true clauses (higher recall) at the same budget while still certifying 90% precision.

How BARGAIN compares (at a glance)

| Method | Guarantee | Sampling | Estimation | Typical Outcome |

|---|---|---|---|---|

| Naive | Strong (but loose) | Uniform | Hoeffding | Safe but leaves a lot of recall/cost‑savings unclaimed |

| SUPG | Asymptotic only | Importance (score‑based) | CLT | Can miss targets on small samples; utility unstable |

| BARGAIN | Finite‑sample | Adaptive, target‑aware | Variance‑aware sequential tests | High recall/precision or cost‑savings at certified targets |

When it won’t save you

- Uncalibrated proxies. If high scores don’t actually mean “more correct,” any cascade has poor utility (though BARGAIN still maintains the guarantee—just at a stricter threshold with less savings).

- Vanishing positives in RT. With extremely few true positives, no method can both guarantee a high recall target and deliver high precision; BARGAIN makes this explicit and lets you relax the guarantee accordingly.

What we’d deploy at Cognaptus

- Score plumbing: Ensure proxies return usable scores (log‑probs or calibrated logits). Run a quick isotonic or temperature calibration pass if necessary.

- Guardrailed pilot: Start with [email protected] or [email protected] and δ=0.1; let BARGAIN learn your score→label landscape with adaptive sampling.

- Class‑specific thresholds (AT‑M): For multi‑class extraction, allow per‑class thresholds—many proxies are asymmetric (easier on commonsense classes, worse on rare ones).

- Audit logs: Store per‑threshold test stats (sample counts, pass/fail, certified bounds). This is your compliance artifact when stakeholders ask “how do you know it’s ≥90%?”

- Cost dashboards: Track cost avoided vs. target tightness; the sweet spot is often T∈[0.85, 0.95] for business workloads.

Our read

BARGAIN is the first cascade method that feels production‑credible: it prioritizes the metric you care about, spends budget where it matters, and gives a clean paper trail for QA and risk teams. The technical novelty isn’t a new model; it’s smarter statistics and sampling—precisely what real LLM ops needed.

Cognaptus: Automate the Present, Incubate the Future.