The Short of It

LLM agents are about to become your busiest “users”—but they don’t behave like dashboards or analysts. They speculate: issuing floods of heterogeneous probes, repeating near-identical work, and constantly asking for partial answers to decide the next move. Traditional databases—built for precise, one‑off queries—will buckle. We need agent‑first data systems that treat speculation as a first‑class workload.

This piece unpacks a timely research agenda and turns it into an actionable playbook for CTOs, data platform leads, and AI product teams.

What the Paper Argues (and Why It Matters)

The core thesis: LLM agents will be the dominant data workload, and their behavior—“agentic speculation”—has four tell‑tale properties you can exploit:

-

Scale: agents can issue 100s–1000s of requests per second per task.

-

Heterogeneity: early steps skim schemas/stats; later steps craft partial or complete solutions; validation shows up everywhere.

-

Redundancy: many plans and sub-plans overlap—ripe for sharing and caching.

-

Steerability: if the system talks back (hints, costs, join suggestions), agents get to the answer with fewer steps.

Implication for builders: stop optimizing for single perfect answers. Start optimizing for fast, sufficiently‑good signals that steer the swarm.

A Mental Model: From Queries to Probes

Agents don’t just send SQL; they send probes: bundles that may include SQL plus a brief (phase, goals, accuracy needs, budgets, success criteria). The system responds with results and grounding feedback (e.g., related tables, why-not provenance, cost estimates, or “batch these next 3 steps”).

Treat your database like a collaborator that can nudge agents away from dead ends, not a mute oracle.

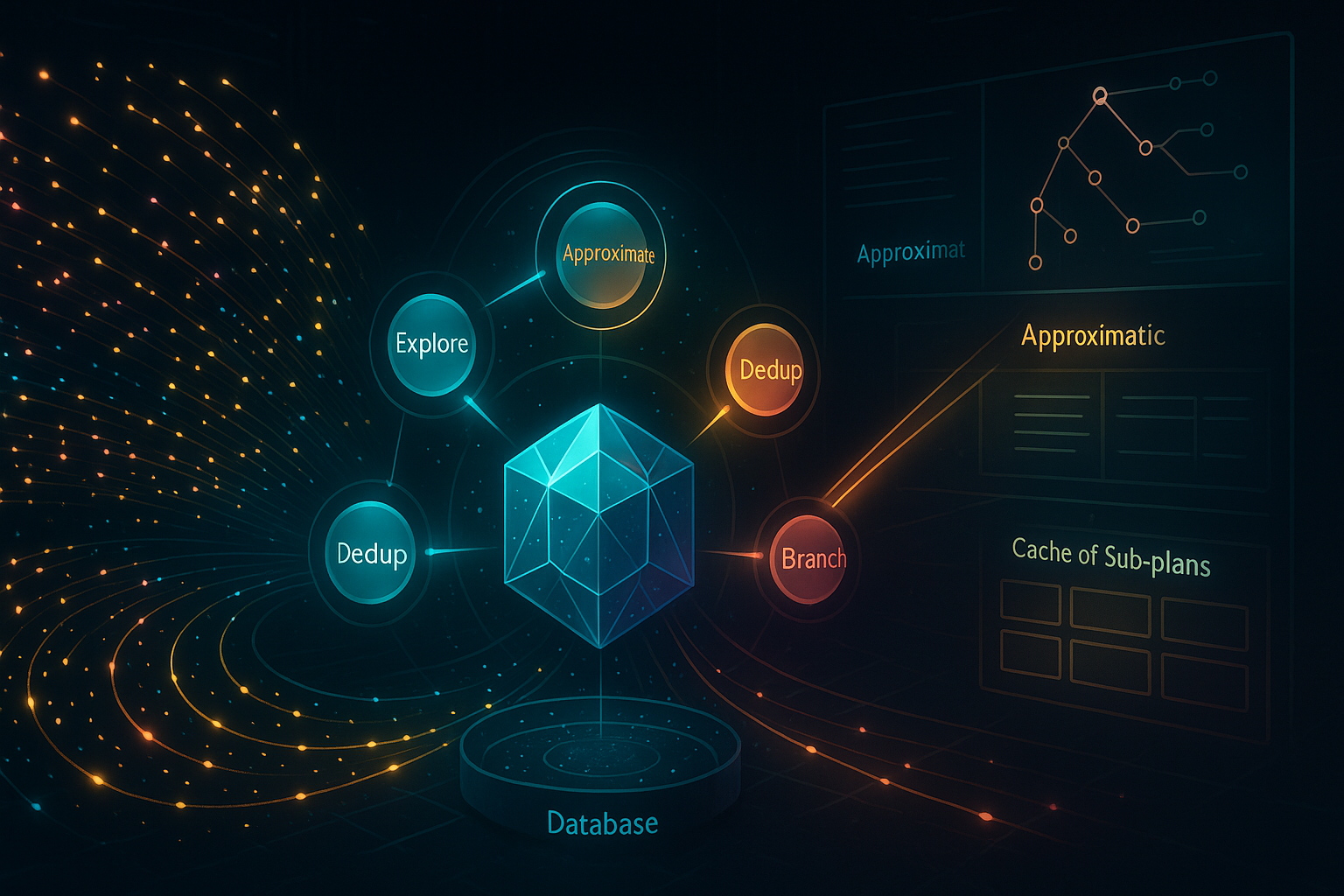

The Architecture We Should Build

Below is the paper’s architectural thrust, translated for a practical roadmap.

1) Probe Interface (beyond SQL)

- Brief-aware execution: understand phase (exploration vs. solution), target accuracy, and early‑stop criteria.

- Semantic operators: native ops to find tables/columns/rows by meaning (not just

LIKE). - Side-channel replies: surface hints (alternative tables, join keys), costs, and reuse opportunities.

2) Probe Optimizer (satisficing over maximizing)

- Intra-probe: prune irrelevant columns/queries; share sub-plans; return coarse first, precise later.

- Inter-probe: cache & materialize across turns and agents; pick one query to run exactly now to reduce five follow‑ups.

- Decision policy: optimize for time-to-next-decision, not time-to-full-answer.

3) Agentic Memory Store (the semantic “pseudo-index”)

- Persist learned grounding: encodings, missing value patterns, time/location granularities, prior probe results, “what worked before.”

- Queryable by agents and sleeper agents (in-database helpers that prefetch hints, detect reuse, and warn about stale assumptions).

4) Branched Transactions (“MVCC on steroids”)

- Support cheap forks and ultra‑fast rollbacks for “what‑if” updates across thousands of near-identical worlds.

- Provide multi‑world isolation: logical separation with physical sharing.

What Changes for Your Data Platform (Concrete Moves)

| Layer | Old World (BI/Dashboards) | Agent‑First World | First Steps You Can Pilot |

|---|---|---|---|

| Interface | SQL-only; fixed SLAs | Probes with briefs; semantic search; side‑channel hints | Add a thin probe API that accepts (goals, phase, accuracy, budget); log them for policy learning |

| Optimization | Throughput & exactness | Satisficing, coarse‑to‑fine; multi‑query sharing | Turn on approximate scan + early-stop; add sub-plan cache keyed by embeddings of projected/joined fields |

| Execution | One-shot queries | Incremental & interruptible execution | Implement chunked scans with “pause/continue,” return partial stats early |

| Storage | Fixed indexes/layouts | Agentic memory store; dynamic layouts | Start with a metadata KV + vector index for per-column notes, encodings, sample value sketches |

| Transactions | Linear ACID | Branching with fast fork/rollback | Prototype copy-on-write branching tablespaces for sandboxed what‑ifs |

| Governance | Static ACLs | Cross‑agent reuse vs. privacy trade‑offs | Add reuse policies: “share sub-plan X if redacted,” log provenance for audit |

Patterns You Can Use This Quarter

-

Coarse‑First Scouting

- For exploration-phase probes, return:

(row count, distinct values, null rates, 1% sampled histograms)per touched column—not full rows. - Expose a

STOP_IF(similarity > τ)hook so the system can auto‑abort near-duplicate scans.

- For exploration-phase probes, return:

-

Sub‑Plan Dedup Cache

- Hash or embed sub-plans (e.g.,

Scan[table, projected_cols],HashJoin[left,right,on]) and memoize results. - Evict by information gain (Δ in downstream decisions), not just LRU.

- Hash or embed sub-plans (e.g.,

-

Hinting Sleeper Agent

- On every probe, asynchronously compute: (i) related join candidates, (ii) likely missing filters, (iii) why‑not explanations for empty results, (iv) cost warnings with cheaper alternates.

- Feed hints back on the side channel; let the calling agent update its plan.

-

One‑Exact‑Now Policy

- In multi‑turn tasks, choose one high‑impact query to compute exactly if it likely collapses the tree of next questions (e.g., the canonical join).

- Everything else stays approximate until the agent commits.

-

Sandboxed What‑If Branches

- Introduce branch creation as a first‑class DDL (e.g.,

CREATE BRANCH ... FROM snapshot) with quotas and TTLs; optimize for rollback speed.

- Introduce branch creation as a first‑class DDL (e.g.,

KPIs That Actually Reflect Agent Workloads

- Time‑to‑Next‑Decision (TTND): median seconds from probe receipt to agent issuing its next, better‑informed probe.

- Redundancy Hit‑Rate: % of sub‑plans served from the dedup cache.

- Hint Utility: share of probes whose next turn changes because of system‑generated hints.

- Branch Churn: forks/rollbacks per task; rollback latency at P95.

- Approx‑Then‑Exact Lift: reduction in total compute vs. exact‑only baselines for equal task success.

Where This Collides with Reality (and How to Navigate)

- Privacy vs. Reuse: Agentic memory and cross‑agent sharing can leak. Apply scope‑aware caches (per‑tenant, redacted global) and strong provenance.

- Semantic Drift: Agent-written metadata goes stale on schema changes. Add staleness detectors and automatic deprecation of conflicting notes.

- Cost Explosions: Swarms can DDoS your warehouse. Enforce phase‑aware admission control and budgeted probes per task.

Strategic Take: Data Systems Become Co‑Pilots

The step-change isn’t AQP or MPP—those are ingredients. The shift is dialogue: databases that negotiate partial results, costs, and next steps with agent swarms. Teams that implement this co‑pilot posture will ship agentic apps that feel snappy, cheap, and reliable while competitors burn cycles chasing perfect answers.

Leadership ask for this week: pilot a probe API, add a sub‑plan dedup cache, and instrument TTND. You’ll feel the throughput relief immediately.

Cognaptus: Automate the Present, Incubate the Future