TL;DR

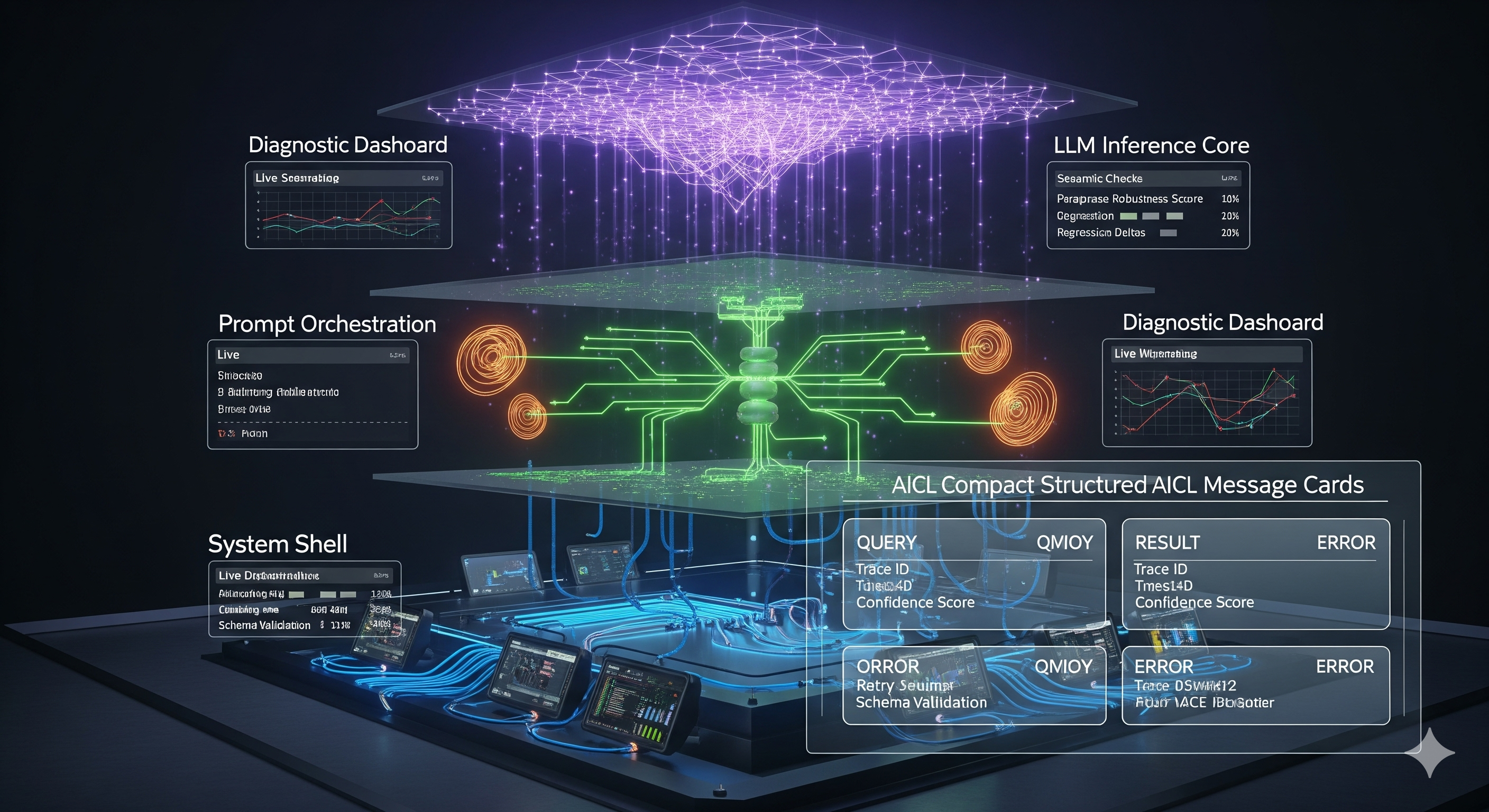

Traditional QA treats software as deterministic; LLM apps aren’t. This paper proposes a three‑layer view (System Shell → Prompt Orchestration → LLM Inference) and argues for a collaborative testing strategy: retain classical testing where it still fits, translate assertions into semantic checks, integrate AI‑safety style probes, and extend QA into runtime. The kicker is AICL, a compact agent‑interaction protocol that bakes in observability, context isolation, and deterministic replay.

Why this matters for operators and product teams

LLM products now look like systems—not prompts. They combine RAG, tools, stateful multi‑turn workflows, and sometimes multi‑agent handoffs. The result is probabilistic behavior plus cross‑layer failure modes. If you keep writing boolean, exact‑match tests, you’ll ship brittle releases and discover regressions in production. The fix isn’t to abandon testing; it’s to move from asserting single outputs to observing semantic behavior distributions.

A clean mental model: the three layers

Below is the simplest model we’ve seen that stays useful in practice.

| Layer | What it includes | What to test | Practical KPIs | Typical Owner |

|---|---|---|---|---|

| System Shell | APIs, preprocessing/postprocessing, retries/timeouts, tool adapters, HIL interfaces | Unit, interface, integration, perf/load | p95 latency, non‑200 rate, tool call success, schema conformance | Platform Eng |

| Prompt Orchestration | Prompt assembly, context compression, long‑term memory, graph/agent workflow | Semantic assertions (LLM‑as‑judge), prompt‑space coverage, paraphrase robustness, state transition checks | pass@k under paraphrases, state‑leakage rate, failure path reproducibility | Agent/Apps |

| LLM Inference Core | Model + decoding + guardrails | Capability benchmarks, hallucination/harms, jailbreak resistance, drift regression | capability deltas by version, refusal quality, safety hit rate | Model/Infra |

Key shift: predictability drops from Shell → Core; testing cost and complexity rise. So aim for maximum determinism up‑stack (Shell), and probabilistic evaluation down‑stack (Core).

The six landmines (and how to defuse them)

| Challenge | Why it breaks products | Minimal guardrail | What “good” looks like |

|---|---|---|---|

| Semantic evaluation & consistency | Open‑ended outputs blow up exact matches | Replace booleans with LLM‑as‑judge + embedding similarity + multi‑candidate voting | Stable pass@k across paraphrase suites; reviewer agreement ≥ target |

| Open input space robustness | Real users type messy, adversarial, or out‑of‑distribution prompts | Distribution‑aware test synthesis; adversarial paraphrase & mutation sets | Controlled variance in scorer metrics; no catastrophic mode collapse |

| Dynamic state & observability | KV‑cache/memory leak across turns; hard to repro | End‑to‑end trace IDs, context snapshots, conversation replay harness | One‑click repro from prod trace to offline test |

| Capability evolution & regression | Fine‑tunes help one task, degrade another | Multi‑task, multi‑version diff with early‑warning thresholds | Version gates on capability deltas; automated rollback cues |

| Security & compliance | Jailbreaks, indirect prompt injection, latent harms | Red‑team suites + locale/culture‑aware harms; supply‑chain checks | Decreasing exploit yield; documented risk posture by market |

| Multimodal/system‑level integration | Error amplification across modalities and long call chains | Cross‑modal consistency checks; semantic perf tests under depth | Budgeted latency vs depth; graceful degradation under load |

The collaboration playbook (Retain • Translate • Integrate • Runtime)

- Retain classical testing where determinism holds (Shell). Don’t rebuild what already works—double‑down on schema checks, retries, idempotency.

- Translate assertions into semantic verifications (Orchestration): use judges, similarity, and prompt‑space coverage (clusters over embeddings) rather than code paths.

- Integrate safety tooling and adversarial generators with SE test harnesses: run paraphrase equivalence + attack mutations as one suite.

- Runtime extend QA into production: treat production traces as test seeds; pipe them back into CI for test–deploy–replay.

AICL: the missing substrate for testable agents

Natural language is great for prototyping and terrible for deterministic replay. Agent Interaction Communication Language (AICL) is a compact, typed message format that adds:

- Semantic precision via message types (QUERY, RESULT, PLAN, FACT, ERROR, MEMORY.STORE/RECALL, COORD.DELEGATE, REASONING.START/STEP/COMPLETE)

- Observability & provenance (ids, timestamps, model_version, priors, ctx, correlation

of) - Replayability (canonical encoding + explicit priors)

- Evaluation hooks (confidence, reasoning trace markers, cost/latency fields)

Minimal example (text form):

[QUERY: tool:search{q:"how to file VAT in PH"}

| id:u!q42, ts:t(2025-08-31T05:00:00Z), ver:"1.2.0", cid:"sess-9a1",

ctx:[tenant:"acme", user:"ops"], model_version:"gpt-ops-202508",

priors:{jurisdiction:"PH"}]

[RESULT: {steps:["Login", "Pick period", "Upload sales"], schema:"tool:search/1"}

| id:u!r42, of:u!q42, conf:0.91, latency:812ms]

With AICL in place, you can:

- Snapshot conversation state (

ctx,MEMORY.*) and replay failures offline; - Run semantic diffs by version (

model_version) and gate releases on capability deltas; - Accrue a living test corpus from production (AICL logs → CI input) without guesswork.

A starter checklist you can ship next week

- Traceability: Add a global trace ID and structured context snapshot to every request/response.

- Semantic test bank: Build a paraphrase set for your top 20 workflows; measure pass@k and judge agreement.

- Prompt‑space coverage: Cluster your real prompts (embeddings) and sample deficits for new tests.

- Regression gates: Define per‑task capability thresholds and block deploys on negative deltas.

- Attack hygiene: Add indirect‑injection and role‑play jailbreaks to nightly runs; fail on unsafe‑rate > target.

- Orchestration snapshots: Persist pre‑LLM prompt, tool I/O, and post‑processors for each turn to enable root‑cause.

- Protocolize: Pilot AICL for one agent workflow; log

QUERY/RESULT/ERRORplusMEMORY.*.

Where this fits with previous Cognaptus themes

- We’ve argued that agents are supply chains of prompts, tools, and state. Today’s piece makes that operational: test each link, then test the chain.

- Our earlier RAG coverage advice (embed → cluster → sample) generalizes here as prompt‑space coverage.

- The “LLM drift is product drift” idea shows up as capability regression gates.

Bottom line

Stop trying to force deterministic tests on probabilistic systems. Assert less; observe more. Use classical tests where they still shine, upgrade assertions into semantic checks where language rules, and extend QA into runtime with replayable traces. Then make all of it boringly automatable with AICL.

Cognaptus: Automate the Present, Incubate the Future.