TL;DR

Generative judges that think before they judge—and are trained with online RL using stepwise labels—beat classic discriminative process reward models (PRMs). The StepWiser approach brings three wins: (1) higher accuracy at spotting the first bad step, (2) cleaner, more reliable inference via a “chunk‑reset” search that prunes bad steps while keeping overall length similar, and (3) better data selection for fine‑tuning.

Why this matters (for builders and buyers)

Most enterprise CoT systems fail not because they can’t produce long reasoning, but because they can’t police their own steps. Traditional PRMs act like a yes/no bouncer at each step—fast, but shallow. StepWiser reframes judging as its own reasoning task: the judge writes an analysis first, then issues a verdict. That small shift has big, practical consequences:

- Transparency: The judge “shows its work,” making audits and red‑teaming far easier.

- Control at inference: If a step looks wrong, automatically reset and re‑sample that step, not the whole answer.

- Training leverage: Use the judge to score chunks and pick better samples for SFT/RSFT, compounding quality without expensive human labels.

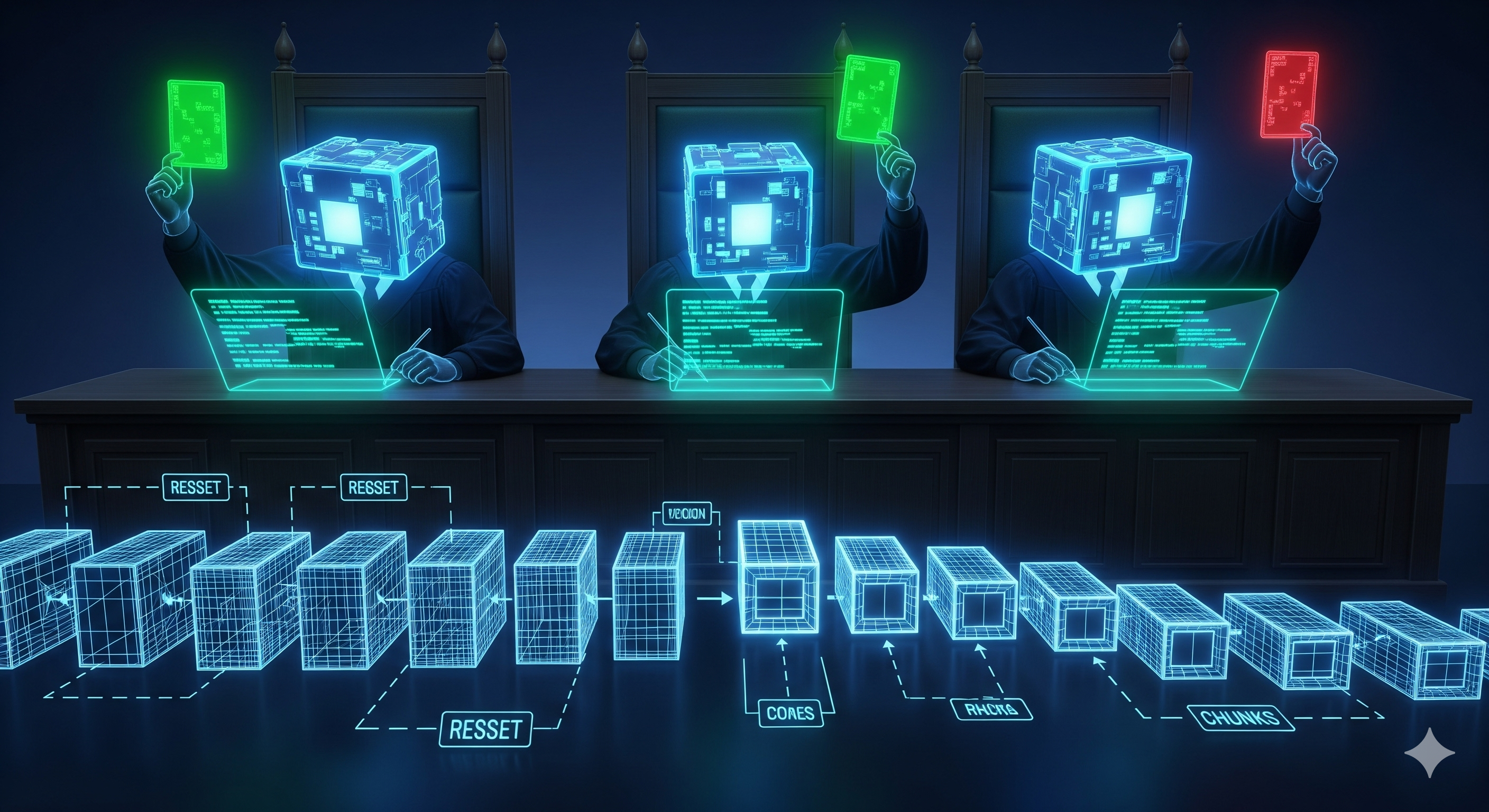

The core idea in one picture (words)

- Self‑segment CoT into Chunks‑of‑Thought (meaningful, coherent blocks).

- After each chunk, run Monte Carlo rollouts to estimate how likely you are to succeed from here; convert those Q‑values into a binary step label (good/bad). Relative signals that reward progress (e.g., Q‑improvement) work best.

- Train a judge with RL (GRPO) to reason about the chunk and then deliver a verdict.

Effectively: meta‑reason about the model’s reasoning, step by step.

Discriminative PRM vs. Generative (StepWiser) at a glance

| Judge type | How it works | Pros | Cons | Best use |

|---|---|---|---|---|

| Discriminative PRM | Predicts a label/score per step (no rationale) | Simple, fast | Opaque; saturates on static SFT; weaker on later steps | Low‑stakes filters, heuristics |

| Generative Judge (StepWiser) | Writes a short CoT about the step, then judges; trained with online RL on stepwise labels | Transparent; state‑of‑the‑art on step detection; stronger inference‑time control | Heavier compute at judge time; needs rollout infra for labels | Safety‑critical CoT, self‑correction loops, data curation |

What’s new under the hood

1) Self‑segmentation → fewer, better chunks. Instead of brittle rules like double newlines, a quick SFT teaches the policy to emit semantically cohesive chunks. Result: fewer steps, similar token count, better structure.

2) Step labels from outcomes, not heuristics. For each chunk, estimate a Q‑value by sampling futures from that point. Label the chunk as good if it raises success probability (especially under relative criteria like value‑ratio/effective‑reward), or bad if it doesn’t.

3) RL‑trained judge with its own CoT. The judge is optimized online so its verdicts match the step labels—after producing a rationale. Balanced prompt sets and entropy control prevent collapse.

Results that matter

-

Error localization (ProcessBench): StepWiser’s generative‑RL judge beats SFT‑trained discriminative PRMs and even trajectory‑level RL baselines, with larger gains at 7B scale. Majority‑vote on the judge adds a modest lift.

-

Inference‑time search (Chunk‑Reset): Generate chunk→judge→accept or reset that chunk (up to a few retries). Accuracy improves while accepted tokens stay similar; the system spends more tokens only on rejected chunks—evidence that it’s pruning errors rather than rambling.

-

Data selection (Stepwise RSFT): Use average per‑chunk judge scores to choose the best completions. Fine‑tunes picked by the generative judge outperform those picked by discriminative PRMs or outcome‑only filters.

Practical playbook for Cognaptus‑style stacks

A. Upgrade your judge

- Replace stepwise classifiers with a generative judge that writes a brief rationale.

- Train (or fine‑tune) with stepwise labels from rollouts; prefer relative progress signals over absolute thresholds.

B. Add Chunk‑Reset at inference

- Wrap your policy: emit chunk → judge → accept or regenerate that chunk. Limit retries (e.g., 3–5) and keep a budget for “rejected length.”

- Apply to math, code planning, policy routing, and tool pipelines where early mistakes snowball.

C. Use the judge for data curation

- Score chunks, average per completion, and select fine‑tuning winners beyond “final answer correct.”

- This is a high‑return lever when human annotation is scarce.

D. Governance & UX

- Log judge rationales for audit trails.

- Surface them in review UIs so analysts see why a step was rejected.

Limits & open questions

- Compute: Rollouts for Q‑labels aren’t free. Self‑segmentation reduces steps, but you still need infra.

- Domain shift: Labels learned on math CoT transfer partially, but domain‑specific verification (code tests, policy checks) will be essential.

- Judge drift: Online RL can bias toward “over‑positive” verdicts if data aren’t balanced; monitor class balance and entropy.

- Beyond binary: Multi‑grade judgments (error type, severity) may unlock finer control of resets and data selection.

The Cognaptus take

StepWiser turns judgment into a first‑class reasoning task. For enterprise builders, the near‑term ROI is clear: fewer silent failures, cheaper iteration, and cleaner training data. If you only implement one thing next sprint, make it Chunk‑Reset with a generative judge—you’ll ship more reliable CoT without inflating token budgets.

Cognaptus: Automate the Present, Incubate the Future