TL;DR

Hermes 4 is an open‑weight “hybrid reasoner” that marries huge synthetic reasoning corpora with carefully engineered post‑training and evaluation. The headline for operators isn’t just benchmark wins—it’s control: control of format, schema, and especially when the model stops thinking. That last bit matters for latency, cost, and reliability.

Why this matters for business readers

If you’re piloting agentic or “think‑step” LLMs, two pains dominate:

- Unbounded reasoning length → blow‑ups in latency and context costs.

- Messy outputs → brittle downstream integrations.

Hermes 4 addresses both with: (a) rejection‑sampled, verifier‑backed reasoning traces to raise answer quality, and (b) explicit output‑format and schema adherence training plus length‑control fine‑tuning to bound variance. That combo is exactly what production teams need.

What’s actually new (beyond another leaderboard post)

1) DataForge → DAG‑style synthetic data at scale.

- Pretraining text is cleaned, deduped, then run through a graph of generators/judges to spin off tasks across genres (QA, coding, analysis, etc.).

- Crucially, Hermes doesn’t just keep the final Q&A—it also trains on the intermediate calls that produced it. That builds competence in writing instructions, answering, and judging, not just answering.

2) Atropos → verifiable, tool‑use‑aware trajectories.

- Thousands of task‑specific verifiers (math checkers, JSON validators, code test harnesses) supply binary, programmatic rewards during rejection sampling.

- A tool‑use environment teaches interleaving

… with <tool_call>/<tool_response>, like a mini‑agent within one turn.

3) Packing + loss masking → throughput without leakage.

- Heterogeneous sample lengths are first‑fit decreased into near‑perfectly packed batches; attention is restricted within samples.

- Only assistant tokens contribute to loss; system/user text is masked out. That means cleaner gradients and faster training.

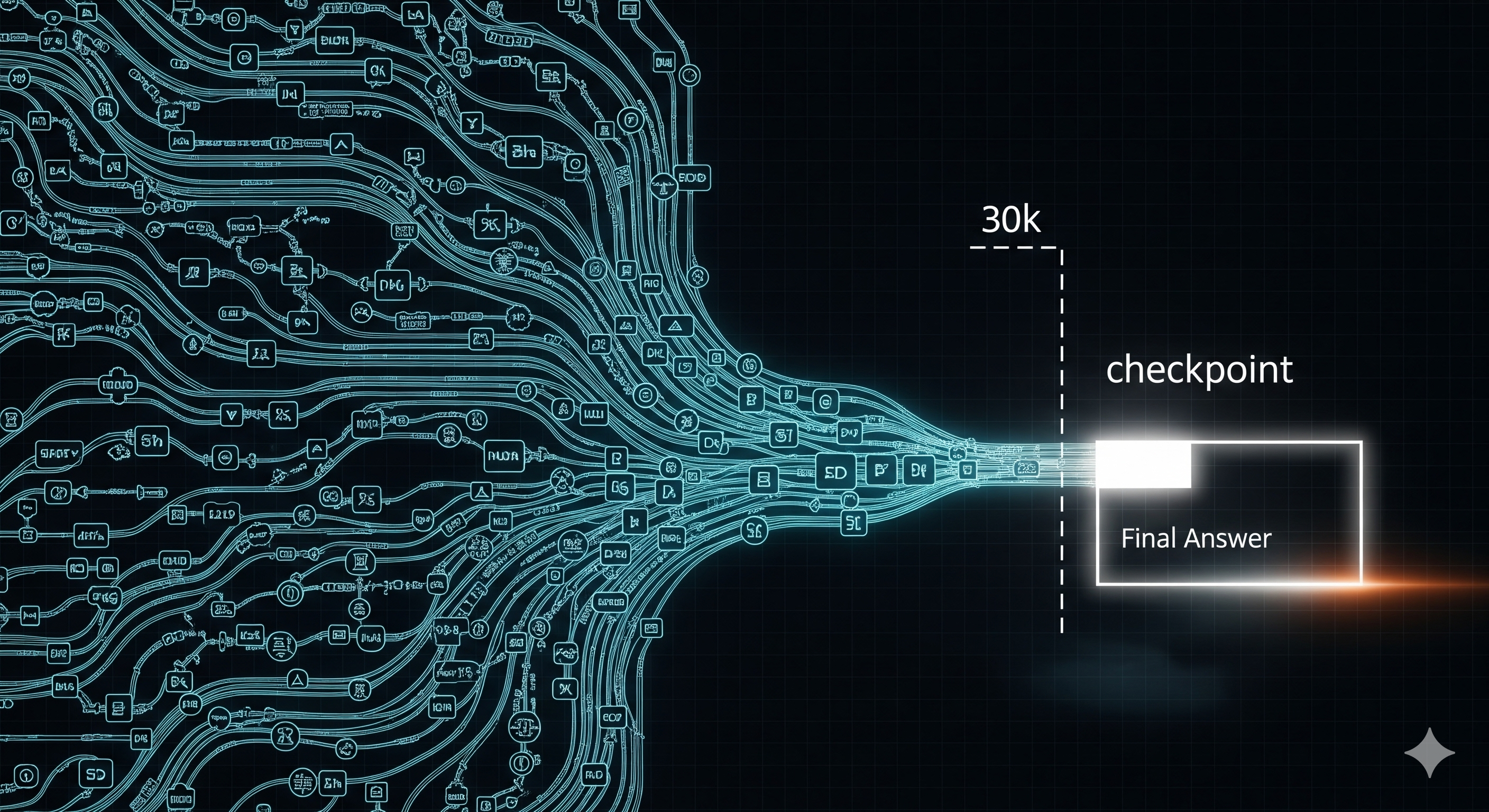

4) Length‑controlled reasoning (“Stop at 30k”).

- After the main SFT, Hermes 4 gets a second tuning stage where is forced at ~30k tokens. The model learns to end thinking and move to the answer, trading a small slice of peak scores for large gains in tail‑latency predictability.

Translation for operators: less risk of runaway chains of thought, more predictable SLAs.

The useful bits, distilled

A. Output governance you can copy tomorrow

| Problem | Hermes‑style Tactic | What to Implement in Your Stack |

|---|---|---|

| Answers buried in prose | Answer‑format training (e.g., boxed math, XML tags) | Add strict E2E tests: reject generations that don’t produce the requested tag/format; retrain with pass/fail signals |

| Fragile integrations on JSON | Schema‑adherence training with dynamic Pydantic models | At inference, validate JSON → auto‑repair prompt → re‑try; in training, inject realistic schema errors |

| Hallucinated tools / weak tool reasoning | Interleaved tool‑use within |

Design a single‑turn “micro‑agent” template: think → call → observe → continue → answer |

| Long, costly reasoning traces | Length‑control SFT (fixed‑token ) | Add a termination token policy: contract to 5–30k thinking tokens per task class and measure latency vs. accuracy |

B. Data synthesis that doesn’t collapse on itself

| Risk | Antidote |

|---|---|

| Synthetic data feedback‑loops → model collapse | Do not train on full self‑generated reasoning; train the termination decision instead, and keep diverse seed data + independent judges |

| Over‑fitting answer models as judges | Use different models as answerer vs. judge; keep judge rubrics per task |

C. Evaluation that matches production reality

- Run one consistent inference engine across all evals to avoid “engine confounds.”

- Prefer verifiable tasks (code tests, numeric checkers) over pure LLM‑as‑judge—then add judge‑based suites (Arena‑Hard, RewardBench) for human‑like preference coverage.

- Overlap inference and verification for code tasks to keep evals compute‑bound, not queue‑bound.

How it stacks up (qualitatively)

Hermes 4’s competitive story isn’t just raw scores; it’s behavioral plasticity. With small system‑prompt or chat‑template tweaks (even changing the assistant role token), the model more readily adopts personas, reduces sycophancy, and stays “in‑character.” In practice, that means less prompt‑whack‑a‑mole when you need a consistent voice for support agents, analysts, or coders.

Deployment playbook (Cognaptus edition)

1) Segment tasks by “thinking budget.”

- Math/coding hard cases: allow ~10–30k think tokens.

- FAQ/support/CRUD: cap at ~0.5–2k; prefer structured templates.

2) Enforce formats in CI/CD for prompts.

- Every production prompt gets a canonical expected format and a test. CI fails when a prompt change breaks parsers.

3) Add a Schema‑Repair layer.

- If JSON fails validation, route the object back with a terse “repair‑only” instruction; limit to one repair iteration before human fallback.

4) Instrument termination.

- Log think‑token counts by intent and correlate with latency, CTR, and bug rate. Treat surges as regressions.

5) Keep judges independent.

- Maintain a separate “judge model set” and rotate it; never let your answer model judge itself.

What we’re watching next

- Thinking‑efficiency metrics: not just pass@1 but accuracy per 1k think tokens.

- Tool‑dense trajectories: can a single turn orchestrate multiple calls without devolving into verbose scaffolding?

- Elastic inference routing: dynamic “reasoning lanes” that auto‑select short‑ or long‑think decoding paths based on early signals.

Bottom line

Hermes 4’s real contribution is operational: it shows you can scale up reasoning and constrain it—with graph‑based synthetic data, verifier‑validated traces, and a dead‑simple but powerful stop‑thinking training step. If you’re building agentic systems, borrow these recipes now.

Cognaptus: Automate the Present, Incubate the Future