The short of it

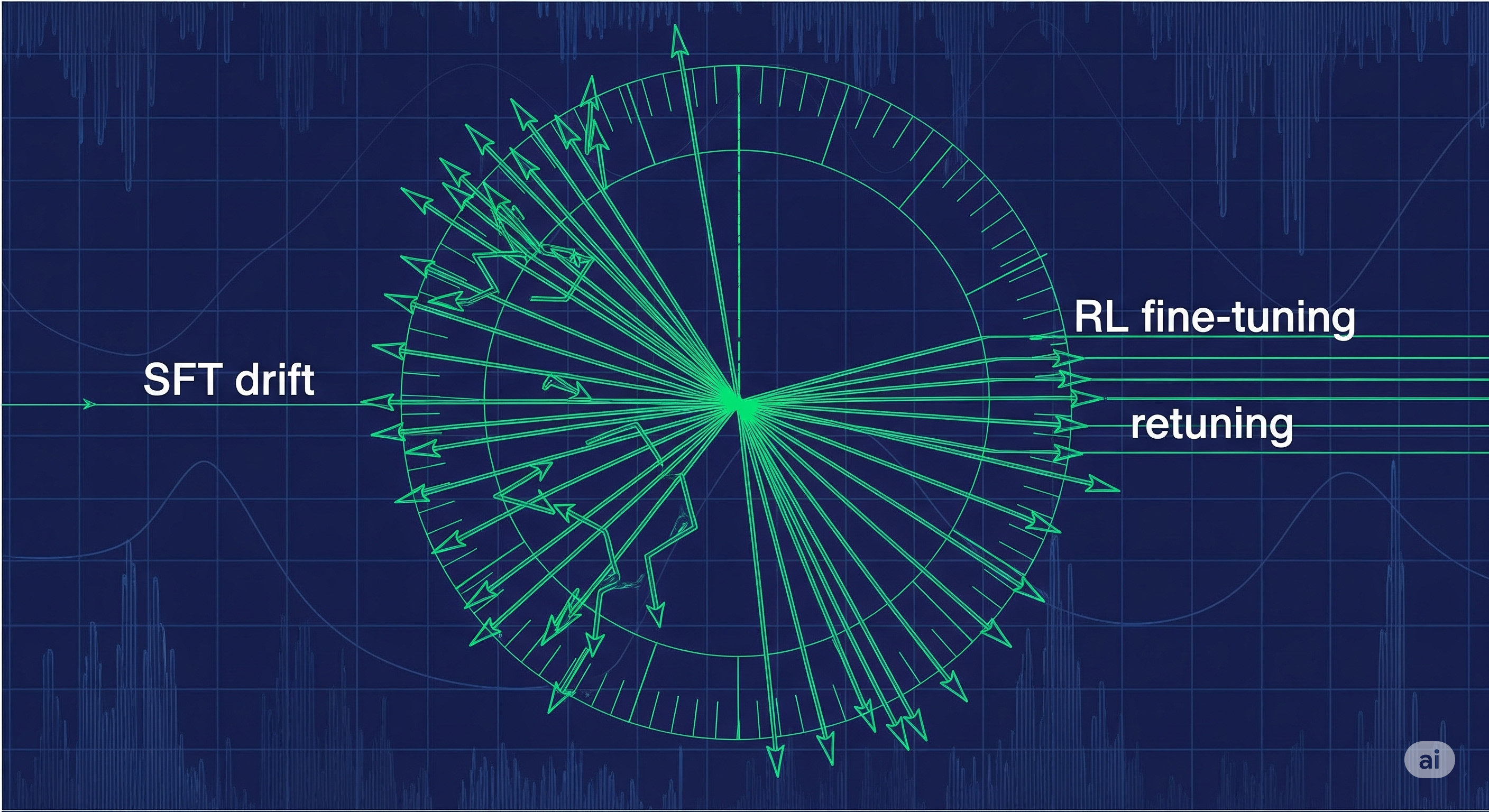

Reinforcement‑learning fine‑tuning (RL‑FT) often looks like magic: you SFT a model until it aces your dataset, panic when it forgets math or coding edge cases, then run PPO and—voilà—generalization returns. A new paper argues the mechanism isn’t mystical at all: RL‑FT mostly rotates a model’s learned directions back toward broadly useful features, rather than unlocking novel capabilities. In practical terms, cheap surgical resets (shallow layers or top‑rank components) can recover much of that OOD skill without running an expensive RL pipeline.

What the authors actually did

- Setup. Start with Llama‑3.2‑11B and Qwen‑2.5‑7B. Run standard two‑stage post‑training: SFT first, then PPO‑style RL‑FT.

- Probe. Use a controlled arithmetic‑reasoning environment (24‑point card game) with an OOD twist (J/Q/K no longer all == 10) to stress test generalization versus memorization.

- Lens. Track how key weight matrices change using SVD—not just singular values (magnitudes) but also the singular vectors (directions). The directional story turns out to be the punchline.

Key empirical patterns (and what they mean for builders)

-

SFT boosts ID, hurts OOD (gradually). OOD performance peaks early in SFT, then erodes as the model specializes. This is classic catastrophic forgetting—your task loss improves while general skills decay.

-

RL‑FT restores, up to a point. RL can claw back most of the OOD ability lost to SFT, but not if SFT drove the model into a severely overfit regime. Translation: better SFT in → better RL‑FT out.

-

Magnitude barely moves; directions swing wide. Across attention and MLP submodules, the singular value spectra barely budge after SFT or RL. What changes is the orientation of the principal directions—especially at the spectrum’s head and tail—indicating the model is reallocating where features live, not how strong they are.

-

Cheap knobs work. Restoring only the top‑rank directions or only the shallow quarter of layers recovers a surprisingly large chunk (≈70–80%) of OOD performance. You don’t always need full‑blown RL‑FT to fix drift.

A mental model: “rotate, don’t rewrite”

Think of the network’s weight matrices as a set of acoustic guitars. SFT tightens some strings (specializes), detunes others (forgets OOD). RL doesn’t buy a new instrument; it mostly re‑tunes the strings—re‑aligning feature directions so broad skills resonate again. The guitar’s wood (intrinsic capacity, the bulk spectrum) stays the same.

Practical playbook (actionable, not aspirational)

When you see OOD regression after SFT, try these in order:

-

Early‑stop by OOD proxy. Track at least one OOD‑sensitive dev probe during SFT. Stop before the bend in the OOD curve.

-

Directional resets (cheap):

- Shallow reset: Revert the first ~25% of layers to the base model.

- Top‑rank UV merge: Replace only the top‑k singular vectors (U/V) of selected layers with the base model’s, keeping SFT’s singular values.

- Head & tail focus: Prioritize components tied to the largest and smallest singular values; leave the mid‑spectrum alone.

-

Light‑touch alignment: If you must run RL‑FT, start from a non‑overfit SFT checkpoint and use KL control to avoid drifting away from base priors.

-

Data fixes > knobs: If your SFT set is too narrow or stylistically uniform, diversify before training again. Rotations can’t rescue missing knowledge.

Symptom → Minimal intervention → Why it helps

| Symptom you observe | Minimal intervention | Intuition |

|---|---|---|

| OOD math or logic suddenly tanks mid‑SFT | Early‑stop at the OOD peak; checkpoint there | Avoids pushing into specialized subspaces that discard generalizable directions |

| OOD loss drifted; ID is great | Shallow reset (~25% layers) | Early layers store general features; resetting re‑anchors representations |

| OOD partly recovers but stalls | Top‑rank UV merge (per layer) | High‑rank directions encode core general skills; restoring them reorients capacity |

| RL‑FT helps ID but not OOD | Increase KL weight / reduce step size | Keeps policy near base manifold; prevents harmful rotations |

| RL‑FT unstable, reward hacking | Rule‑aware rewards, verifier‑in‑loop | Rewards the right geometry; reduces drift into brittle directions |

For roadmap & budgeting

- RL‑FT is a restoration tool. Plan it as a polisher, not a capability factory. Expect diminishing returns if SFT checkpoints are heavily overfit.

- Add a “directional QA” gate. Before you launch PPO, run cheap direction‑only fixes (UV merges, shallow resets). If they get you ≥70% of the way, reconsider an RL cycle.

- Couple data curation with geometry. Use broader SFT batches early, then narrow; monitor an OOD probe so you catch the peak and checkpoint there for RL.

What this changes for the industry debate

We’ve seen hot takes that “RL makes models reason.” This study reframes it: RL mostly undoes SFT’s directional drift on features the base model already had. That clarifies why some RL‑first reproductions underperform (they start from the wrong geometry) and why “KL‑hugging” base checkpoints works: you’re preserving the good directions while nudging a few to align with rewards.

Where I still want answers

- Why do SFT and RL share similar rotation profiles? The paper observes nearly identical principal‑angle curves across stages; a mechanistic story (optimizer gauge freedom? implicit orthogonalization?) would be gold for recipe design.

- Which modules matter most across tasks? The results use arithmetic reasoning; does code gen shift the action to feed‑forward up‑/down‑projections, or does attention dominate consistently?

- Automating the fix. A practical pipeline could: (1) measure OOD probes; (2) detect directional drift; (3) auto‑select layers/ranks for UV merging; (4) only then green‑light RL‑FT.

Cognaptus: Automate the Present, Incubate the Future