The one-sentence take

A new live benchmark, FutureX, swaps lab-style trivia for rolling, real-world future events, forcing agentic LLMs to search, reason, and hedge under uncertainty that actually moves—and the results expose where today’s “agents” are still brittle.

Why FutureX matters now

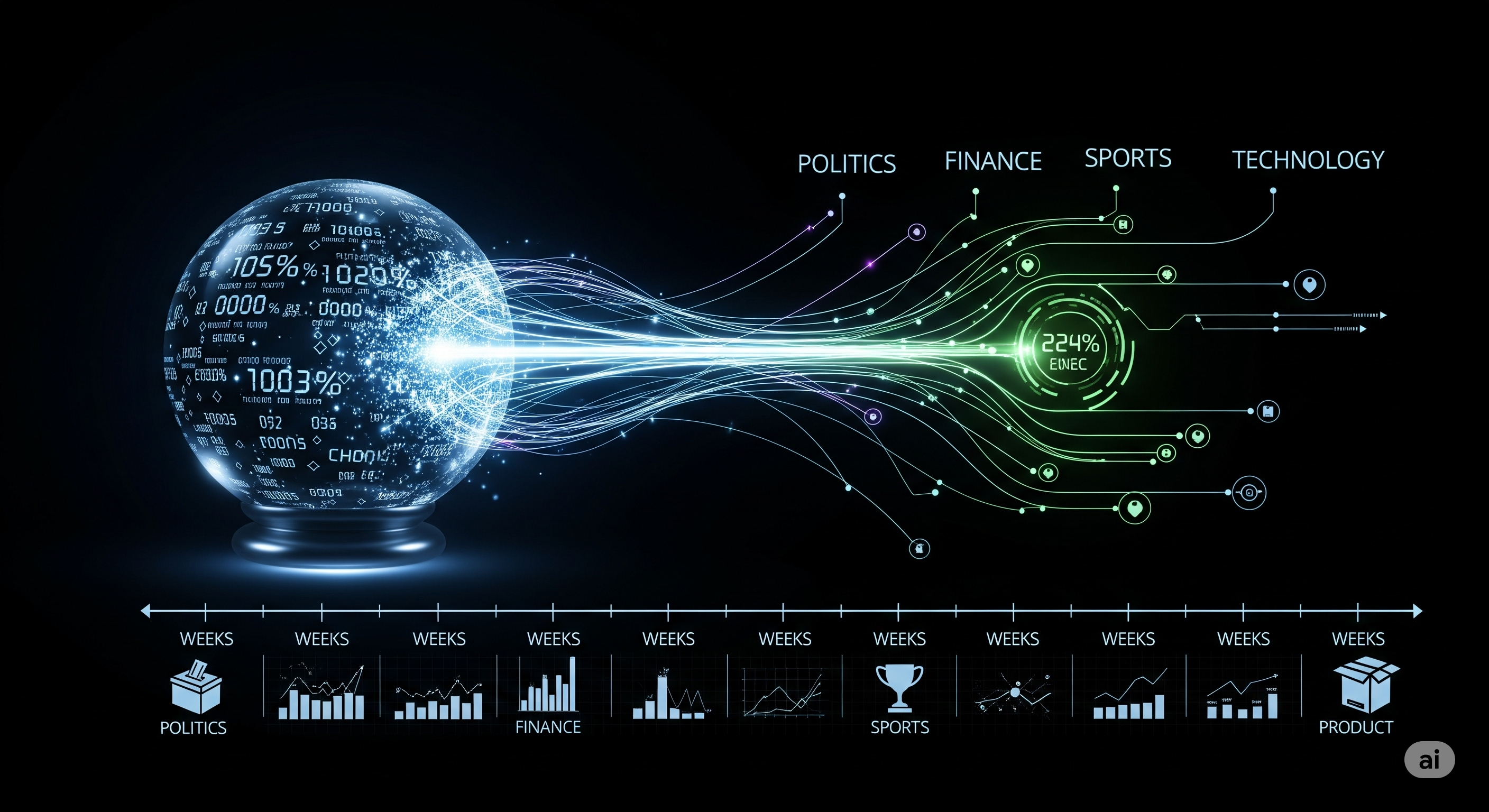

Enterprise teams are deploying agents to answer questions whose truth changes by the hour—markets, elections, sports, product launches. Static leaderboards don’t measure that. FutureX runs as a cron job on reality: it collects new events every day, has agents make predictions, and grades them after events resolve. That turns evaluation from a screenshot into a time series and makes overfitting to benchmark quirks a lot harder.

The design in plain English

- Source breadth: ~2,000 candidate sites distilled to 195 reliable, high-frequency sources across politics, economics/finance, tech, sports, and entertainment.

- Continuous curation: A semi-automated pipeline filters out subjective or unsafe prompts, perturbs wording, and schedules events to resolve on a one‑week horizon for timely, comparable scoring.

- Agent breadth: 25 models spanning base LLMs, search-augmented LLMs, and deep-research agents (open and closed source).

- Four difficulty tiers: from Basic (single-answer) through Wide Search (multi-answer, exactness required) and Deep Search (open-ended, low volatility) to Super Agent (open-ended, high volatility)—a realistic gradient of capabilities.

What’s genuinely new (vs. prior art)

| Benchmark | Update style | Sources | Task type | Model coverage | Frequency |

|---|---|---|---|---|---|

| ForecastBench / Autocast family | Mostly static or market-anchored | Prediction markets + limited news | Simulated or delayed scoring | Few agents | Monthly/one-off |

| FutureBench | Live but narrow | Mainly prediction markets + some news | Weekly | Single open-source agent + a few LLMs | Weekly |

| FutureX | Fully automated, live | 195 curated, high‑refresh sites | Tiered (Basic→Super Agent), open-ended & choice | 25 agents (base, search, deep-research) | Daily ingestion, weekly scoring window |

Why this matters: live breadth + tiering reduces “leaderboard luck.” Models can’t just memorize catalogs or exploit fixed templates; they must search widely, integrate sources, and reason under uncertainty—closer to how teams actually use them.

Three insights product leaders should steal

-

Benchmark your pipeline, not just your model. FutureX’s hardest tiers reward interactive search + synthesis. In real deployments, retrieval, browsing, and post-processing often dominate error budgets. Treat evaluation as a system test (tools, prompts, guardrails), not a single-model bake-off.

-

Choose a horizon that matches decisions. The one-week resolution window is a smart compromise: enough time for outcomes to be non-obvious, short enough to keep feedback timely. For trading, risk, or marketing ops, align your agent’s KPI window (and alerting cadence) with business latency—daily isn’t always better.

-

Score for precision under ambiguity. FutureX’s Wide Search tier zeroes scores for a single extra option while halving for omissions. That’s a great proxy for enterprise tasks like compliance checklists or incident triage, where precision beats verbosity. Borrow that loss function.

Where FutureX is strongest—and where to push next

Strengths

- Scale + diversity: ~500 events/week across domains, continuously refreshed.

- Automated, closed-loop pipeline: collect → predict → resolve → score, with minimal manual touch.

- Volatility-aware tiers: separates stable-fact synthesis from truly moving targets—a key differentiator.

Gaps & opportunities

- Calibration & confidence: Public reporting should track not just accuracy but Brier/log loss and calibration drift by tier, so operators can threshold actions.

- Cost-to-quality curves: Deep-research agents can be expensive. Publishing tokens/time vs. accuracy would help teams choose the right class of agent for each workflow step.

- Domain stratification: Finance and sports are structurally different from politics or entertainment. Break out per-domain leaderboards to avoid averaged-away risks.

- Robustness to missing predictions: Live systems fail. FutureX tolerates gaps statistically; product teams should add SLOs and fallbacks (e.g., degrade to narrower tiers or human-in-the-loop when tools fail).

What this means for your roadmap (90‑day plan)

- Shadow-run your agent against FutureX-like data: set up a daily job that scrapes your domain-specific sources, generates one‑week forecasts, and backfills outcomes.

- Adopt tiered gating: route tasks by volatility and ambiguity—e.g., Basic → templated LLM; Deep Search → LLM + retrieval; Super Agent → LLM + browsing + analyst review.

- Instrument confidence: log option probabilities, rationale length, tool calls, and decision latency. Track calibration weekly; auto‑downgrade workflows when drift spikes.

- Report like ops, not research: weekly dashboards with accuracy × cost × latency per tier & domain. Greenlight expansions only where marginal accuracy beats marginal spend.

A quick mental model for executives

Think of FutureX as “A/B testing for foresight.” You don’t just want right answers—you want a measured process that gets reliably less wrong, on time, at acceptable cost. The benchmark’s biggest gift is forcing that discipline in public. You can bring the same discipline in-house, privately.

Cognaptus: Automate the Present, Incubate the Future