TL;DR

A role‑based, debate‑driven LLM system—AlphaAgents—coordinates three specialist agents (fundamental, sentiment, valuation) to screen equities, reach consensus, and build a simple, equal‑weight portfolio. In a four‑month backtest starting 2024‑02‑01 on 15 tech names, the risk‑neutral multi‑agent portfolio outperformed the benchmark and single‑agent baselines; risk‑averse variants underperformed in a bull run (as expected). The real innovation isn’t the short backtest—it’s the explainable process: constrained tools per role, structured debate, and explicit risk‑tolerance prompts.

Why this paper matters (for business readers)

Most “AI for investing” demos either hide the logic in a black box or drown you in code. AlphaAgents lands in the middle: auditable reasoning across multiple domain roles with simple, equal‑weight decisions you can trace and challenge. That maps neatly to how real investment committees work—and is directly pluggable into existing portfolio workflows (signal harvesting → optimizer).

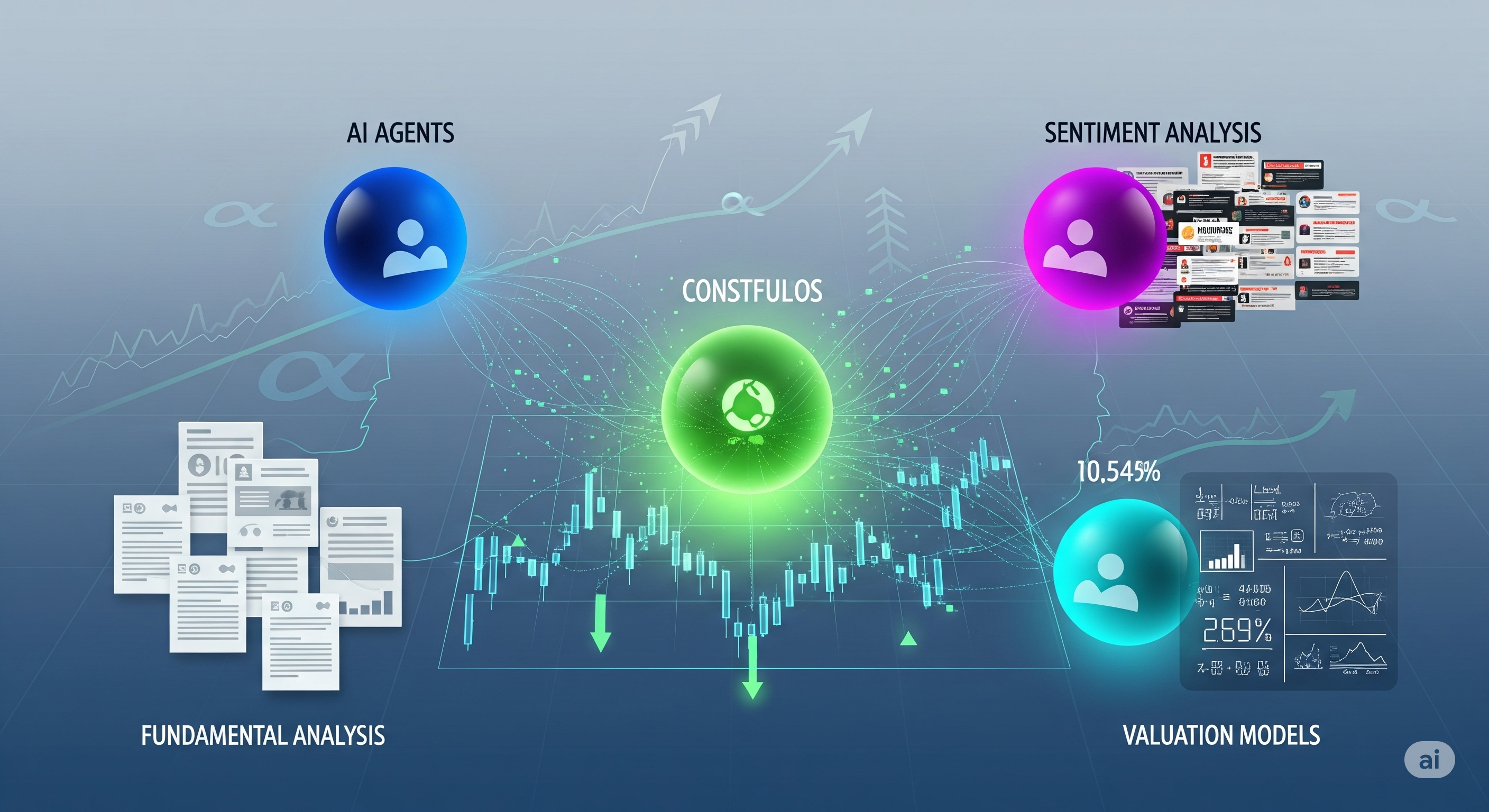

What AlphaAgents actually is

A three‑agent crew coordinated via an AutoGen group chat to produce stock analyses and a final BUY/SELL decision per name.

| Agent | Inputs & Tools | What it decides |

|---|---|---|

| Fundamental | 10‑K/10‑Q via tailored RAG; section‑aware chunking; finance‑specific prompts | Long‑horizon quality, progress vs. goals, red flags |

| Sentiment | Bloomberg news; LLM summarizer with reflection (reason → critique → refine) | Short‑term news/ratings impact |

| Valuation | Yahoo price/volume; numeric tool for annualized return/vol | Risk/return posture, momentum/dispersion cues |

The orchestrator forces each agent to speak at least twice, then merges views; a round‑robin debate runs when recommendations clash until consensus forms. This process discipline is the product: it reduces single‑perspective blind spots and catches LLM hallucinations by pinning each role to its own tools and data.

A clever twist: prompt‑level risk tolerance

Instead of hard coding thresholds, the authors encode risk‑averse vs risk‑neutral investor profiles inside the prompts so the same data can yield different decisions. In practice: the valuation agent might say SELL under risk‑averse (penalizing volatility) and BUY under risk‑neutral (leaning on momentum) for the same name. That mirrors how PMs tailor mandates without rewriting the whole model.

Trade‑off: adjacent profiles (risk‑neutral vs risk‑seeking) were hard to separate—useful caution if you hope prompts alone can span your entire IPS.

Evaluation design in one glance

- Universe: 15 randomly selected tech stocks; also used as benchmark (equal‑weight).

- Data window for training/analysis: January 2024 news + filings.

- Portfolio inception: 2024‑02‑01; equal‑weight selections.

- Horizon: ~4 months.

- Comparators: single‑agent (valuation; fundamental), multi‑agent (consensus), benchmark.

- Metrics: cumulative return, rolling Sharpe (risk‑free ≈ 1‑month T‑bill).

Limitations: small universe, short horizon, single sector, equal weights (no confidence scaling), and potential look‑ahead if news timestamps aren’t strictly enforced. Treat results as feasibility, not proof of persistent alpha.

Results (and how to read them)

- Risk‑neutral: The multi‑agent portfolio beats both single‑agent portfolios and the benchmark on cumulative return and rolling Sharpe. Why it makes sense: sentiment + valuation capture near‑term dynamics; fundamentals anchor long‑term quality. The synthesis outperforms either alone when the regime is not hostile to tech.

- Risk‑averse: All agent portfolios lag the benchmark during a tech‑led rally—by design they cut volatility and therefore upside. Still, the multi‑agent variant shows shallower drawdowns early in the test, consistent with diversified conservative signals.

Read this as “process alpha”: the debate and role separation produce more robust decisions than any single lens, especially when signals disagree.

What this means for your stack

Here’s a pragmatic way to productionize the idea without boiling the ocean:

1) Keep the agents; change the outputs.

- Emit numeric signals (e.g., $s_F,s_S,s_V \in [-1,1]$) plus uncertainty, not just BUY/SELL.

- Add a confidence score per role (retrieval faithfulness, tool‑use coverage, news freshness) to weight signals.

2) Upgrade selection → allocation.

- Feed role‑weighted expected returns and a volatility estimate into your existing Mean‑Variance or Black‑Litterman optimizer.

- Constrain by risk budget (max ex‑ante vol/DD) rather than only equal weights.

3) Institutional controls.

- Data lineage: pin every judgment to artifacts (10‑K spans, news IDs, price slices).

- Guardrails: enforce tool usage for math; reject answers without computed stats; bound agent memory.

- Reviewability: store debate logs as part of model governance; sample for human adjudication.

Where I push it next (roadmap)

| Enhancement | Why it matters | Quick take |

|---|---|---|

| Confidence‑weighted sizing | Aligns capital with conviction & data quality | Map retrieval faithfulness and tool‑use rates to position limits |

| Multi‑horizon agents | Separate 1–3m vs 6–12m signals | Ensemble short/long clocks to stabilize turnover |

| Sector balance & risk parity | Avoid single‑sector beta | Add constraints/penalties in the allocator |

| Live A/B with shadow books | Measure process alpha out‑of‑sample | Spin up paper portfolios alongside production |

| Regime detector | Gate sentiment weight by volatility regime | Down‑weight news in choppy, headline‑driven weeks |

Pitfalls & how to avoid them

- Leakage & timing: lock news/filings cutoffs; align time zones; audit any post‑close data.

- Prompt drift: snapshot prompts & tools; run canary tests each deploy.

- Hallucination: forbid free‑form numbers; require computed metrics via the math tool.

- Over‑consensus: if agents converge too easily, inject a devil’s‑advocate pass that must argue the opposite with evidence.

- Coverage gaps: sentiment falls apart when news is sparse—fallback to fundamentals/valuation with explicit lower confidence.

The bigger picture

AlphaAgents isn’t a portfolio optimizer; it’s a research copilot that produces traceable, role‑separated signals ready for your allocator. The result is a committee‑like process with memory and receipts—exactly what compliance and clients keep asking for.

Bottom line: Treat multi‑agent LLMs as a signal refinery: they compress heterogeneous evidence into auditable views, then let your risk engine decide how much to bet.

Cognaptus: Automate the Present, Incubate the Future.