The gist

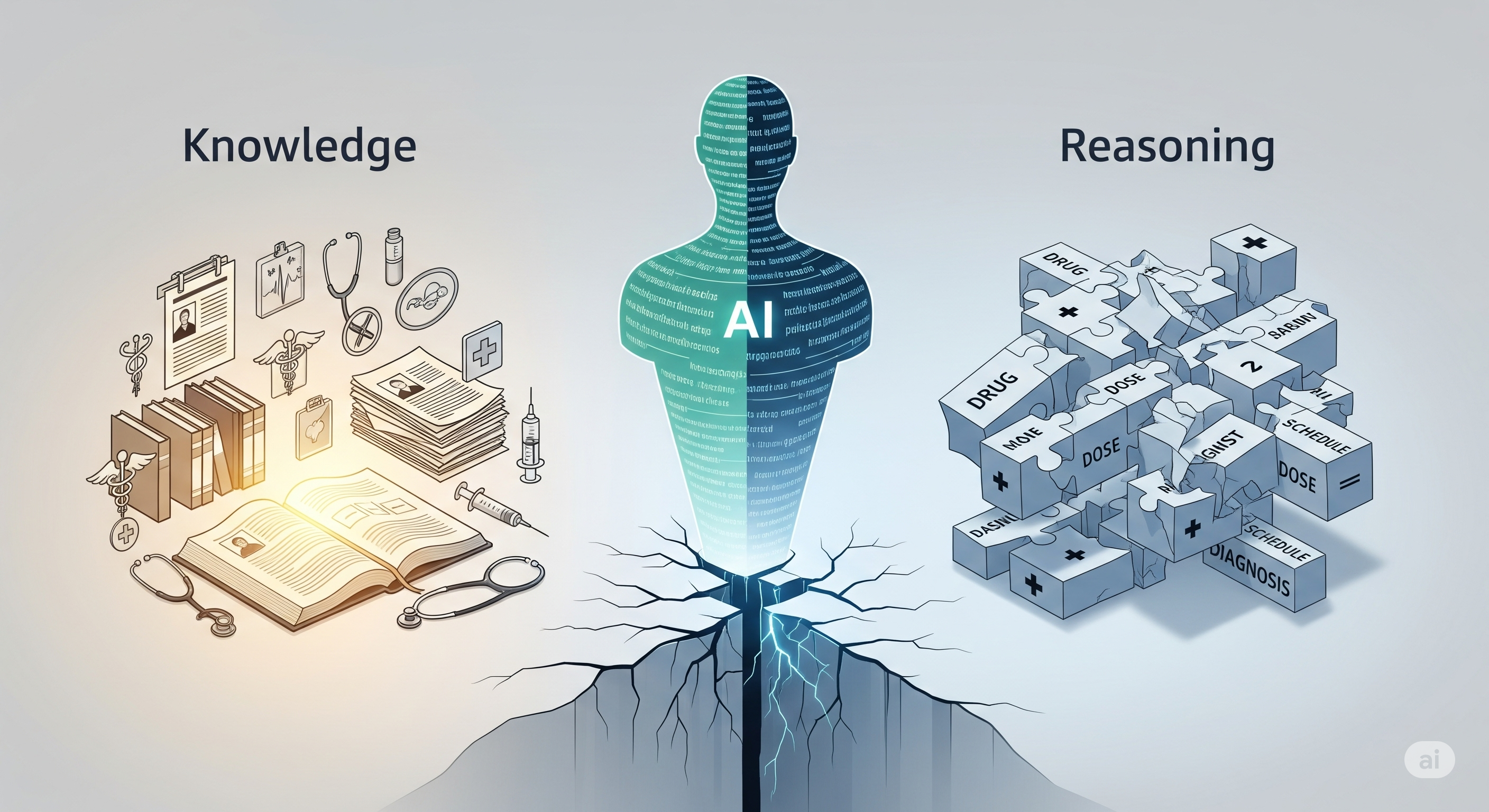

A new clinical natural language inference (NLI) benchmark isolates what models know from how they reason—and the results are stark. State‑of‑the‑art LLMs ace targeted fact checks (≈92% accuracy) but crater on the actual reasoning tasks (≈25% accuracy). The collapse is most extreme in compositional grounding (≈4% accuracy), where a claim depends on multiple interacting clinical constraints (e.g., drug × dose × diagnosis × schedule). Scaling yielded fluent prose, not reliable inference.

Why this matters for business: if your product depends on “LLM reasoning,” you may be shipping a system that recognizes rules but can’t apply them when it counts—a failure mode that won’t show up in standard QA or retrieval tests.

What the paper actually tests

The benchmark, built from parameterized templates, covers four reasoning families. Each item comes with a paired GKMRV probe—Ground Knowledge & Meta‑level Reasoning Verification—that checks whether the model knows the relevant fact and can judge a meta‑statement about it. This decouples knowledge from reasoning.

| Task | What “good” looks like | Typical LLM shortcut | Real‑world risk |

|---|---|---|---|

| Causal Attribution | Distinguish observational report from causal claim (needs counterfactual/comparator) | Treat a numeric outcome (e.g., “40% improved”) as proof of efficacy | Overstating treatment effects from single‑arm data |

| Compositional Grounding | Check joint constraints across variables (drug × dose × patient state × schedule) | Decompose into pairwise plausibilities (drug OK, dose OK → so outcome OK) | Recommending contraindicated regimens under certain renal/hepatic states |

| Epistemic Verification | Weigh evidence vs. authority; reject claims that contradict labs/imaging | Defer to clinician assertion over stronger contradictory evidence | Missing clear diagnoses because a note “sounds expert” |

| Risk State Abstraction | Combine probability with severity; don’t equate “unlikely” with “ruled out” | Use frequency alone; ignore harm magnitude | Discharging patients despite low‑prob, high‑severity risks |

Key pattern: models hit near‑ceiling GKMRV (they know the rule) yet fail the main NLI (they don’t apply it when reasoning). Consistency across wrong answers is high (≈0.87), indicating systematic heuristics, not random noise.

Why scaling broke here

The paper’s formalization (possible‑worlds semantics, causal do‑calculus, epistemic logic) makes the failure legible:

- No structured internal state. Inference requires composing latent concepts—e.g., EffectiveAtDose(drug, dose, diagnosis)—but models default to flatter associations like “drug ↔ benefit” and “drug ↔ reasonable dose,” missing joint constraints.

- Counterfactual blind spot. Causality needs E[Y|do(T=1)] − E[Y|do(T=0)]. Models often collapse this to “observed % responders ⇒ effective,” replacing interventions with observations.

- Epistemic myopia. When a clinician note conflicts with objective evidence, models frequently treat “expert text” as an override, violating evidential coherence.

- Risk without utility. They conflate frequency with risk, ignoring harm severity—precisely where medicine (and many businesses) require expected‑utility thinking.

These are representational limits, not data gaps. The GKMRV success shows the knowledge is there—the machinery to use it isn’t.

Implications beyond healthcare

This is a clinical benchmark, but the pathologies generalize to compliance, finance, and operations:

- Policy & compliance: “Mentions policy” ≠ “applies policy under exceptions.” Expect compositional failures when multiple clauses interact.

- Pricing & risk: “High frequency” events may dominate LLM advice even when low‑frequency, high‑severity events should govern decisions.

- Agentic workflows: Tool‑use plans may look cogent but fail when steps require joint constraints (e.g., permission × context × user role).

A practical checklist for builders

If you’re deploying LLMs in high‑stakes or regulated contexts, bake these into your engineering playbook:

- Split the stack (K ↔ R): Architect explicit separation between Knowledge (retrieval/KBs/constraints) and Reasoning (graph/solver/Bayesian or rule engine). Treat the LLM as an orchestrator, not the court of last resort.

- Contract‑tests for composition: Create template families where the correct label requires joint satisfaction across ≥3 variables. Track a reasoning–knowledge gap metric (main‑task − GKMRV).

- Counterfactual hooks: For causal claims, require a comparator artifact (control arm, A/B log, diff‑in‑diff). Block actions if only single‑arm evidence is present.

- Severity‑aware risk scoring: Encode Risk = Probability × Harm. Have a separate severity map (taxonomy + weights). Never let frequency alone drive “highest risk.”

- Evidence over authority: Add guards that down‑weight assertions when contradicted by higher‑fidelity signals (labs/logs/telemetry). Build an “authority‑override alert.”

- Refusal modes: Prefer safe abstain/route when constraints can’t be jointly satisfied. Measure and reward abstention quality.

Reference architecture (pattern sketch)

flowchart LR

U[User / Upstream System] -->|query| LLM

LLM -->|retrieve facts & constraints| KB[(Domain KB / RAG)]

LLM -->|emit claim graph| CG[Claim Graph]

CG --> RE[Reasoner: rules + causal + optimization]

RE --> VR[Verifiers: GKMRV, counterfactual, composition]

VR --> SG[Safety Gate]

SG -->|approve/abstain/route| OUT[Decision / Explanation]

Key idea: the LLM writes a claim graph; dedicated reasoners decide.

What to measure (beyond accuracy)

- Reasoning–Knowledge Gap (RKG): Main‑task accuracy − GKMRV accuracy (lower is better).

- Composition Pass‑Rate: % cases where all constraints are jointly satisfied.

- Counterfactual Fidelity: % causal claims with explicit comparator evidence.

- Severity‑Weighted Risk Error: |estimated risk − ground‑truth expected loss|.

Track these per release; regressions here predict bad surprises in production.

Bottom line

LLMs can “quote the rulebook” but often can’t referee the game. Reliable clinical—and enterprise—reasoning needs modular designs that make counterfactuals, constraints, and severity first‑class citizens. Treat fluent text as UX, not proof of thought.

Cognaptus: Automate the Present, Incubate the Future.