Agentic LLMs are graduating from chat to control rooms—taking actions, maintaining memory, and optimizing business processes. Inventory is a natural proving ground: a clean cocktail of uncertainty, economics, and coordination. AIM-Bench arrives precisely here, testing LLM agents across newsvendor, multi-period replenishment, the Beer Game, two-level warehouses, and a small supply network—each with explicit uncertainty sources (stochastic demand, variable lead times, and partner behavior).

Bottom line: most models exhibit human-like biases—anchoring on mean demand and amplifying variability upstream (bullwhip)—and only improve when we inject cognitive reflection or information sharing.

Why this matters for operators—not just researchers

- Inventory economics are nonlinear: tiny biases cascade into stockouts or expensive overstock.

- Agents act repeatedly: small mis-calibrations compound across periods and tiers.

- Cross-party dynamics dominate: a good local policy can still create a bad global outcome.

What AIM-Bench actually measures (and why it’s clever)

AIM-Bench doesn’t stop at outcome KPIs; it instruments the process:

- Outcome metrics: average cost, stockout rate, turnover rate.

- Process metric: distance from each order to the ex-post optimal quantity (via a decomposed dynamic program). This is crucial—it separates lucky outcomes from systematic policy quality.

The biases uncovered—and how to read them like an operator

1) Pull-to-center anchoring in Newsvendor

Models frequently anchor at the mean demand, only partially adjusting toward the optimal critical-ratio order. Some SOTA models still show sizable anchor factors; others resist, but inconsistently across frames. Operator’s translation: expect under- or over-ordering clustered around the mean, especially when incentives change framing (profit vs. loss) but not the state. Cognitive reflection prompts reduce anchoring.

Practical guardrail

- Prompt for System 2 reasoning: “State the critical ratio; compute q*; compare to mean; justify variance-weighted deviation.”

- Log each decision with the trio: (mean, q, q)* and the delta—alert when |q−q*| exceeds a tolerance band.

2) Demand chasing: less than humans, but still there

Human planners often overreact to the last observed demand. LLM agents, on average, chase less—but correlations between q_t and d_{t−1} can still appear in certain setups. Operator’s translation: penalize single-period recency; reward multi-period smoothing.

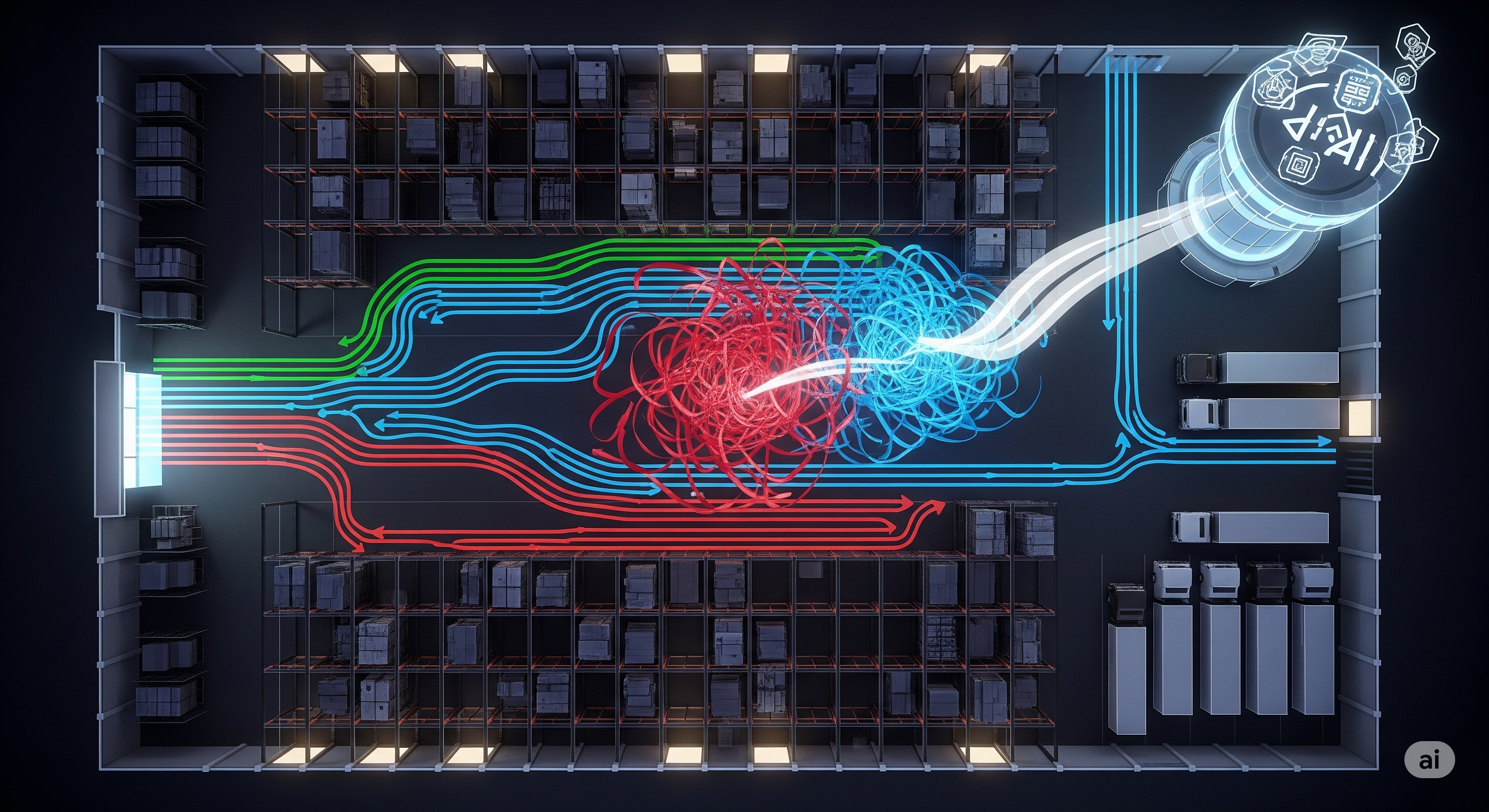

3) Bullwhip in multi-echelon play

In the Beer Game and small networks, variance amplification appears across tiers (β>1). Information sharing helps, but can also trigger action conformity (an LLM starts copying partners), which risks under-exploration. Operator’s translation: sharing improves visibility; you still need independent policy checks to avoid herding.

Model behaviors in plain English

AIM-Bench’s comparisons point to distinct “personalities” under uncertainty:

| Model tendency | Inventory posture | Typical failure mode | Mitigator |

|---|---|---|---|

| Mean-anchored LLM | Orders near historical average | Misses asymmetric costs when CR≠0.5 | Force CR → q* chain-of-thought; audit |

| Overestimator | High turnover, low stockouts | Cost bloat from holding | Penalize variance-sensitive overage; tune service target |

| Under-explorer (conformist) | Mirrors partner actions | Herding; suppresses beneficial deviation | Add diversity/entropy regularizer on actions |

| Reactive chaser | Correlates with last demand | Oscillation & bullwhip | Multi-period filters; EWMA constraints |

These are operational phenotypes you can test for and guardrail—regardless of base model.

What to implement if you’re piloting LLM agents in inventory

-

Two-layer decision protocol (Reason → Act):

- Reason: require explicit CR computation, assumed demand distribution, and q* derivation.

- Act: commit to q only after a “delta-to-optimal” check and a risk-cost decomposition (underage vs. overage).

-

Process metric in production: log $d(a, a^*)$ per order (or a proxy via a learned oracle) and alert when the rolling z-score breaches limits. This catches policy drift before costs explode.

-

Cognitive reflection prompts by default: bake a short habit loop: compute → compare → critique → commit. Evidence shows anchoring factors drop with this nudge.

-

Information sharing—with independence: share downstream demand and pipeline states, but add anti-herding regularizers (e.g., penalize excessive action similarity; require counterfactual justifications).

-

Benchmark before deploy: run your own SKUs through AIM-Bench-like sandboxes (match demand/lead-time regimes) and select models per regime: fixed lead times vs. stochastic VLTs can invert winners.

Where AIM-Bench pushes the conversation forward

- From outcomes to mechanisms: the distance-to-optimal metric explains why costs diverge.

- From single-agent to networks: it stress-tests coordination pathologies, not just forecasting.

- From bias discovery to mitigation: cognitive reflection and information sharing are not silver bullets, but they’re engineerable levers you can ship today.

What we still don’t know (and should test next)

- RL finetuning vs. prompt-only fixes: the paper focuses on prompt levers; policy learning may further reduce bias—but watch for overfit to simulators.

- Robustness across demand regimes: heavy-tailed, intermittent, or promotion-driven demand may flip bias patterns.

- Governance for agent conformity: when visibility rises, will your agents become copycats? Build diversity constraints early.

Cognaptus: Automate the Present, Incubate the Future