TL;DR

Agentic AI is moving from toy demos to systems that must coordinate, persist memory, and interoperate across teams and services. A new survey maps the landscape—frameworks (LangGraph, CrewAI, AutoGen, Semantic Kernel, Agno, Google ADK, MetaGPT), communication protocols (MCP, ACP, A2A, ANP, Agora), and the fault lines that still block production scale. This article distills what’s ready now, what breaks in production, and how to architect for the protocols coming next.

Why this paper matters (for builders and buyers)

Most agent demos fall apart outside the lab because they assume static roles, closed worlds, and manually wired integrations. The paper we’re unpacking proposes a useful taxonomy of (1) agent frameworks, (2) communication protocols, and (3) service-computing readiness. We translate that into concrete stack choices and risk mitigations for anyone shipping automation or AI copilots inside an enterprise.

The real shift: from scripts to services

Agentic AI isn’t just “LLM with tools.” It’s a coordination problem: reasoning + memory + messaging + safe execution. Classical MAS (e.g., BDI) gave us concepts for autonomy and cooperation; LLM agents add probabilistic planning, natural-language interfaces, and dynamic tool use. The net effect is that agents should be designed as services that discover, negotiate, and compose—not as long prompts glued to a single model.

What this changes in practice

- Design for change, not for roles. Hard-coding “planner/critic/executor” ossifies teams. Prefer graph/state-machine orchestration and registries that enable role liquidity.

- Standardize I/O early. JSON schemas and typed artifacts (“Artifacts”/“Agent Cards” in A2A) reduce prompt drift and enable cross-team reuse.

- Guardrails must be first-class. Validators, policy layers, and sandboxed execution belong in the substrate—not sprinkled on top of prompts.

Frameworks: how they really differ

Below is a pragmatic, opinionated map tailored for product teams.

| Framework | Where it shines | Where it hurts | Use it when… |

|---|---|---|---|

| LangGraph | Deterministic orchestration; stateful graphs; tracing | Needs surrounding services for discovery/publishing | You need robust flows, retries, and visibility for L3/L4 runbooks |

| CrewAI | Role-based collaboration with quick team setup | Static roles; thinner safety substrate | You want fast multi-agent demos and human-in-the-loop teamwork |

| AutoGen | Conversational multi-agent loops; shared tools | Code-exec risk; orchestration logic can sprawl | You need agents to “talk to code” or each other rapidly |

| Semantic Kernel | Enterprise-friendly planners/skills; extensible memory | Requires integration for service discovery | You want .NET/enterprise alignment with policy control |

| Agno | Declarative agents; lighter-weight trust layer | Early-stage, requires engineering for depth | You need explainable, reproducible agents with minimal overhead |

| Google ADK | Scalable multi-agent orchestration; cloud-native patterns | Experimental; cloud lock-in for some primitives | You’re standardizing on GCP and need distributed agent teams |

| MetaGPT | Software-team simulation; role templates | Rigid roles; code-gen safety surface | You want structured software workflows and documents quickly |

Rule of thumb: pick LangGraph for the “spine,” then add CrewAI/AutoGen for interaction-heavy teams, and Semantic Kernel where enterprise policy and skills matter. Keep memory and guardrails framework-agnostic so you can swap layers.

Memory: the hidden contract you must get right

Memory is not a feature—it’s the contract that keeps reasoning reliable over days/weeks across runs.

Memory layers to separate:

- Short-term (working) memory – local context/state between steps or nodes.

- Long-term/user memory – preferences, history, and durable facts.

- Semantic memory – embeddings and reasoning traces for reuse.

- Procedural memory – reusable action plans/skills and SOPs.

- Episodic memory – sharp snapshots of past incidents (also a risk surface).

Implementation notes

- Treat memory stores as versioned APIs with quotas, PII redaction, and TTLs.

- Index artifacts, not raw prompts (e.g., plans, diffs, decisions) for better replay and audit.

- Add a policy gate: what is eligible to be stored, for how long, under which jurisdiction.

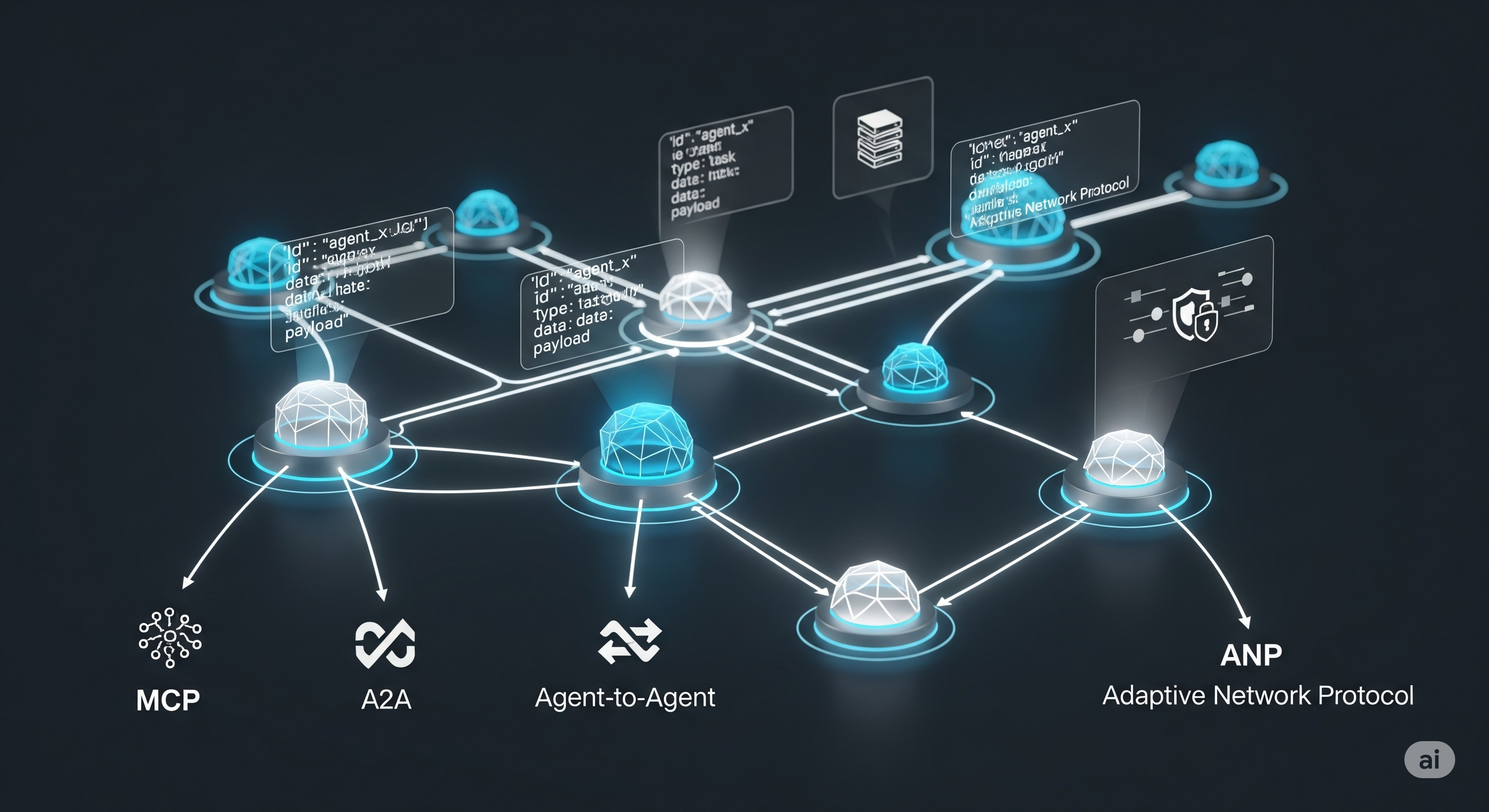

Protocols: who talks to whom—and how

The most consequential development isn’t a new agent framework; it’s protocols that let heterogeneous agents interoperate without bespoke wiring.

At-a-glance

- MCP (Model Context Protocol) – great for structured tool use and JSON-RPC; more client–server than peer-to-peer.

- A2A (Agent-to-Agent) – “Agent Cards,” task objects, and artifacts for capability discovery and coordination. Good for enterprise teams.

- ANP (Agent Network Protocol) – decentralized IDs + JSON-LD semantics; biased toward open markets and verifiable identity.

- ACP (Agent Communication Protocol) – RESTful, intent/goal messages; transport-agnostic and Web3-compatible.

- Agora – a meta-layer that hosts Protocol Documents (PDs) so agents can choose or synthesize the right protocol on the fly.

Why this matters

- Runtime discovery beats static YAML. Agents must find other agents and negotiate forms of work.

- Typed artifacts reduce hallucinated interfaces.

- PDs/JSON-LD unlock semantic composition beyond fragile string prompts.

Service-computing readiness: are we SOA yet?

Most stacks are close but incomplete for service-computing. Discovery/publishing is the gap; orchestration and composition are stronger.

What to borrow from W3C-era SOA (without the bloat):

- WSDL-like descriptors → function/skill contracts and versioning.

- BPEL-like orchestration → explicit, replayable flows with error semantics.

- WS-Policy / WS-Security → declarative runtime constraints + signed messages.

- WS-Coordination / WS-Agreement → roles, sessions, and SLO/SLA for agent selection.

Minimal viable blueprint (MVB) for 2025)

- LangGraph for stateful orchestration (spine).

- A2A (or MCP + registry) for discovery and artifact typing.

- Central skill/agent registry (OpenAPI + JSON-LD) with human-curated metadata.

- Memory bus (vector DB + KV + object store) with policy gates.

- Guardrail plane (validators, schema checks, content policy, code sandbox) enforced at node and call boundaries.

Guardrails: treat them like payments, not pop-ups

If your agents can call tools, read data, or execute code, guardrails are not optional.

Control points

-

Pre-call: schema validation, red-team prompts (jailbreak checks), capability allowlist.

-

In-call: timeouts, rate limits, streaming inspectors.

-

Post-call: output validators, typed artifacts, policy auditing.

-

Code execution:

- Prefer pure functions and pre-approved toolboxes.

- For dynamic code, use ephemeral sandboxes (container or micro-VM) with zero network, read-only FS, and syscall filters.

Operational practices

- Build a test orchard: canned user stories + adversarial prompts + failure seeds.

- Ship black box + white box monitors (LLM evals + deterministic metrics).

- Track artifact lineage so incidents are reproducible.

A simple decision tree for stack selection

- Do you need replayable flows and L3 incident debugging? → LangGraph.

- Do you need quick cross-functional “agent teams”? → CrewAI (add validators).

- Do agents need to “talk” and exchange typed results? → A2A (or MCP + strong schemas).

- Enterprise policy + skills with existing services? → Semantic Kernel.

- Market-like openness or identity verification? → ANP.

- Dynamic protocol choice across contexts? → Agora.

Migration path: from demo to production in 30–60 days

- Flatten prompts into typed artifacts (task, plan, decision, evidence, result).

- Introduce a graph runner for your largest workflow; add retries and compensating actions.

- Externalize memory behind a service with eligibility policies and TTLs; migrate chat logs to artifacts.

- Stand up a registry (OpenAPI + JSON-LD) and register every tool/agent; require versioned contracts.

- Add a guardrail plane: validators on both input and output; sandbox any code.

- Pilot a protocol (MCP or A2A) for one cross-team handoff; measure handoff failure rates and fix schemas.

What we’re watching next

- Protocol consolidation around PD/JSON-LD semantics.

- Memory governance: privacy, provenance, and retention become product features.

- Benchmarks that include ops: success isn’t pass@k—it’s MTTR, replayability, and cost per successful task.

Bottom line

Agent frameworks are maturing, but protocols + memory + guardrails determine whether your system scales. Treat agents as services with typed contracts and observable workflows, and you’ll avoid most “it worked yesterday” failures.

Cognaptus: Automate the Present, Incubate the Future.