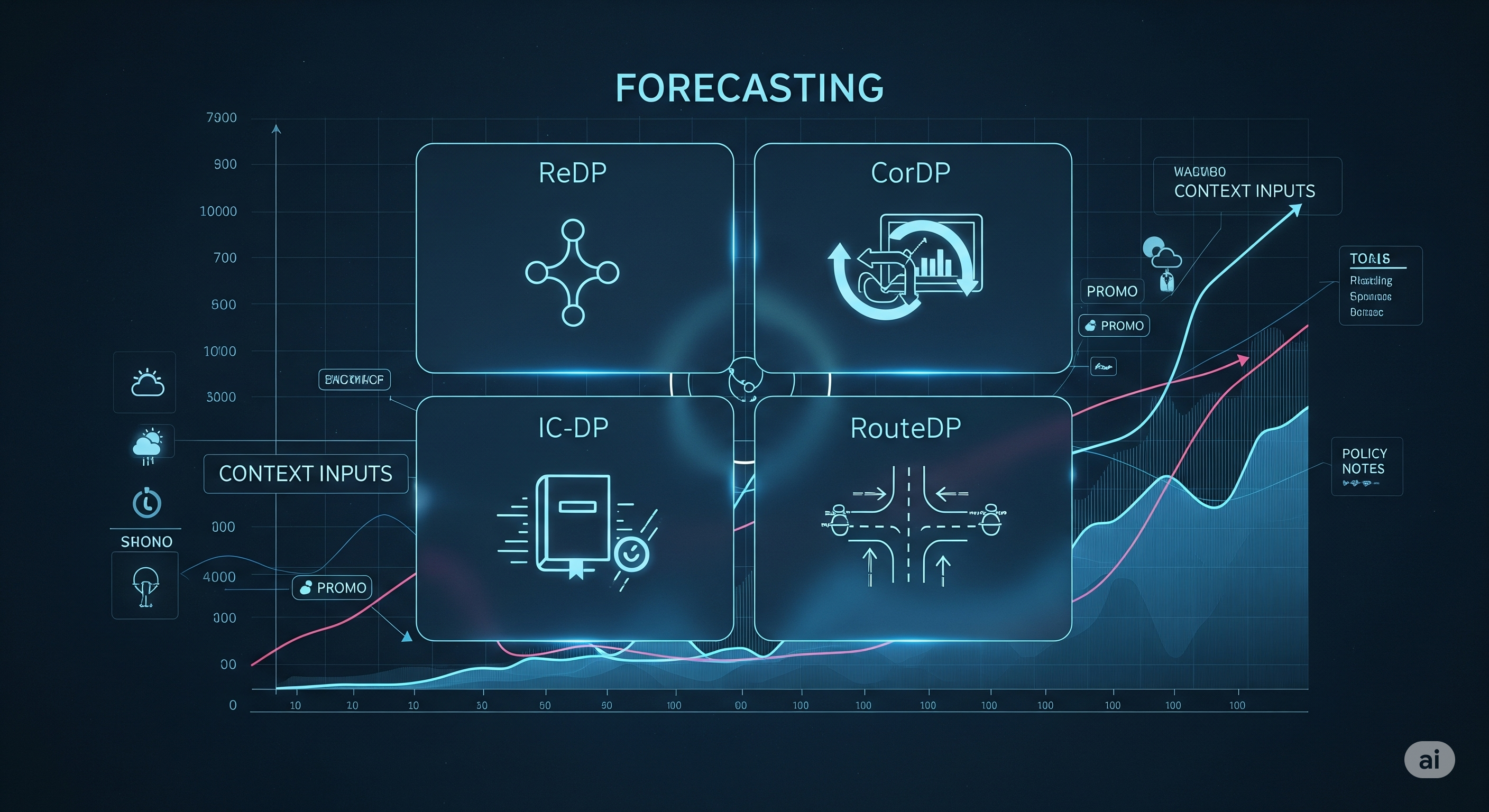

Large language models can forecast surprisingly well when you hand them the right context. But naïve prompts leave money on the table. Today’s paper introduces four plug‑and‑play strategies—ReDP, CorDP, IC‑DP, RouteDP—that lift accuracy, interpretability, and cost‑efficiency without training new models. Here’s what that means for teams running demand, risk, or ops forecasts.

Why this matters for business readers

Most production forecasts are numeric workhorses (ARIMA/ETS/TS foundation models), while contextual facts—weather advisories, policy changes, promos, strikes—arrive as text. LLMs can read that text and adjust the forecast, but simply stuffing history+context into a prompt (“direct prompting”) is often fragile. The four strategies below are operational patterns you can drop into existing stacks without re‑architecting.

The four strategies at a glance

| Strategy | What it does | When to use | Typical upside | Operational caveats |

|---|---|---|---|---|

| ReDP (Reasoning over Context) | Elicits an explicit reasoning trace before producing the forecast; you can score the reasoning separately | Auditing/model QA; post‑mortems; vendor evaluation | Reveals whether errors come from bad context understanding vs poor numeric application | Some models skip reasoning unless format is enforced; adds latency with little accuracy gain by itself |

| CorDP (Forecast Correction) | Start with a baseline probabilistic forecast (e.g., Lag‑Llama/ARIMA), then ask the LLM to minimally adjust it using context | You already trust a baseline and want context‑aware tweaks | Up to ~50% improvement on context‑sensitive windows vs baseline; often beats naïve direct prompting | Small LLMs can over‑edit; pick a strong baseline; choose Median‑CorDP (rewrite median repeatedly) for shape‑wide shifts and SampleWise‑CorDP for partial‑window effects |

| IC‑DP (In‑Context Direct Prompt) | Include one prior solved example (history+context+ground truth) before the new task | Domains with recurring motifs (heat waves, holidays, policy cycles) | Improves 10/11 models; small models gain ~15–56%, large models still gain materially | Longer prompts → higher token cost; curate examples for similarity of effect, not wording |

| RouteDP (Model Routing) | A small LLM ranks task difficulty; only the toughest cases go to the big model | Budget‑constrained ops; mixed task difficulty | With ~20% of tasks routed up, you can capture a large share of the total possible gain | Diminishing returns after ~40%; each model tends to be its own best router |

What’s genuinely new here

-

Separating “understands context” from “can apply it.” ReDP’s trace‑and‑score protocol shows small models often reason correctly yet fail to apply that reasoning numerically; big models do both reliably. That makes trace scoring a practical gate for model upgrades and vendor comparisons.

-

Treating the LLM as a corrector, not a forecaster. CorDP preserves the distributional strengths of your quant model and lets the LLM perform targeted edits where the text matters. Median‑vs‑SampleWise variants map neatly to business reality: use Median for policy/regime shifts that reshape the whole horizon; SampleWise for one‑off events (e.g., a weekend closure) confined to part of the window.

-

In‑context examples beat scale alone. IC‑DP’s single exemplar often lifts small models into the performance band of much larger models. For buyers, that’s a procurement lever: fewer tokens, smaller models, similar accuracy—if you maintain a small example library.

-

Routing gives you a spend dial. RouteDP turns difficulty scoring into compute allocation. In practice, routing just 20% of cases to the largest model achieved a step‑change reduction in error, with clear diminishing returns beyond ~40%.

A concrete deployment recipe (4 weeks)

Week 1 — Wire up metrics

- Wrap your current forecaster with RCRPS (or your preferred proper score) and add ROI windows where context should bite (promo period, storm window).

- Add a trace gate: if a candidate model can’t produce a well‑formed reasoning block, fail fast.

Week 2 — Pilot CorDP

- Choose the best available baseline (e.g., Lag‑Llama or your tuned ETS/ARIMA).

- Run both Median‑CorDP and SampleWise‑CorDP across 10–20 tasks; label tasks as full‑ROI (context shapes entire horizon) vs partial‑ROI (localized effect). Pick the variant by task type.

Week 3 — Add IC‑DP

- For each recurring context motif (holiday, heat wave, price cap), curate one gold example with history/context/ground truth.

- Re‑run small and mid models with IC‑DP. Track token cost vs accuracy; expect the best ROI on full‑ROI tasks.

Week 4 — Turn on RouteDP

- Prompt a small model to score difficulty ∈ [0,1] from history+context. Route top‑k hardest to your big model under a fixed token budget.

- Sweep k ∈ {10%, 20%, 30%, 40%}. Adopt the smallest k that meets your SLA.

Implementation details that matter in practice

- Guardrails & formats. Enforce JSON schemas for both reasoning blocks and forecast arrays to avoid silent format drift.

- Constraint awareness. Teach prompts hard constraints (e.g., non‑negative demand, capped utilization); Median‑CorDP especially excels here.

- Example curation. For IC‑DP, similarity means same causal effect on the forecast, not surface text overlap. Keep 3–5 canonical patterns per domain.

- Cost discipline. Combine IC‑DP (to shrink model size) with RouteDP (to shrink the volume of “big‑model” calls).

What we’d challenge or extend

- Trace‑to‑forecast alignment. ReDP exposes a gap: correct textual reasoning often doesn’t transfer to numeric edits in small models. A format‑enforced edit plan (e.g., “+15% on t=3..5, −5% after”) could close that loop.

- Distributional diagnostics. CorDP’s SampleWise version preserves shapes but can add variance; Median can over‑smooth. Track interval calibration and sharpness separately.

- Active example selection. For IC‑DP, retrieve examples by effect label (e.g., “holiday uplift, weekday‑like drop‑in‑weekend”) rather than by keywords.

Executive checklist

- Keep your quant forecaster; add CorDP for context.

- Maintain a tiny library of effect‑matched examples; plug into IC‑DP.

- Enforce structured reasoning traces; score them.

- Route only the hardest 20–40% of tasks to a large model.

- Track ROI on ROI windows; don’t chase overall error alone.

Cognaptus: Automate the Present, Incubate the Future