Executive takeaway: Efficient LLM architectures aren’t just academic: they reset the economics of AI products by cutting context costs, shrinking GPUs per QPS, and opening new form factors—from phone-side agents to ultra-cheap serverless endpoints. The winning strategy is hybrid by default, KV-light, and latency-budgeted.

Why this matters now

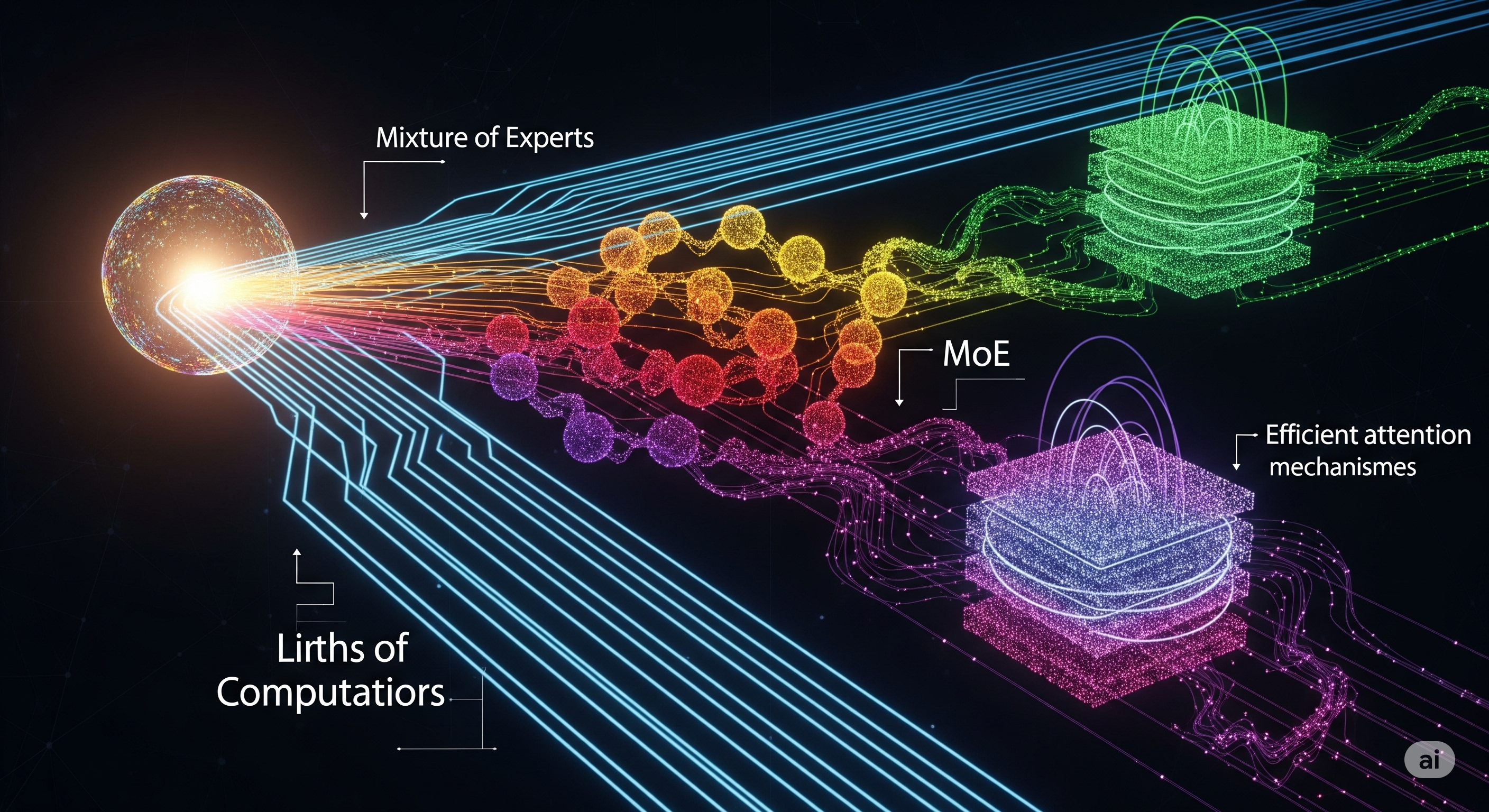

If you ship with AI, your margins live and die by three levers: sequence length, active parameters per token, and memory traffic. Classical Transformers lose on all three. The latest wave of “speed-first” designs offers a menu of swaps that trade negligible accuracy for step-change gains in throughput, tail latency, and $ per million tokens. This survey gives us a clean taxonomy and—more importantly—the design intent behind each family: compress the compute (linear & sparse sequence modeling), route the compute (MoE), restructure the compute (efficient full attention), and rethink the decoder (diffusion LLMs).

The four problems you actually pay for

- O(N²) attention: KV-cache explosion at long contexts.

- Bandwidth-bound kernels: Attention is often IO-limited, not FLOP-limited.

- Uniform compute per token: Every token pays full price regardless of difficulty.

- Training-invested debt: You already have a great Transformer—do you really have to retrain from scratch?

The “speed-first” toolbox addresses each pain specifically.

A practical taxonomy (with operator-level intuition)

| Bucket | Core Idea | What you buy | What you risk | Where it shines |

|---|---|---|---|---|

| Linear sequence modeling (Linear attention, Linear RNNs, SSMs, TTT-RNN) | Replace softmax with kernel/recurrence/state updates. KV goes from growing to constant/logarithmic. | O(N) time, tiny KV; great streaming; easier on-device. | Long-range exact recall can degrade; needs careful gating/normalization; some tasks prefer softmax inductive bias. | Streaming assistants, speech/IoT, always-on copilots, agent traces. |

| Sparse sequence modeling (static/dynamic/training-free) | Attend to fewer keys (patterns, learned routes, or heuristics). | Slashes attention FLOPs and memory; works with existing stacks. | Recall holes if sparsity misses the “right” tokens; tuning can be fiddly. | Long-context RAG, log-search, code navigation. |

| Efficient full attention (IO-aware kernels, GQA/MQA/MLA, MoA, quantized attention) | Keep softmax but fix memory traffic and KV shape. | FlashAttention-level speedups; KV shrink with GQA/MLA; better tail latency; minimal accuracy hit. | Smaller per-head capacity; requires stable kernels and quantization-aware training. | Low-risk accelerations for prod LLMs; enterprise compatibility. |

| Sparse MoE | Activate only a few experts per token. | Massive capacity without proportional compute; great scaling of quality/$; specialization. | Routing instability, load-balancing, infra complexity; memory becomes the new bottleneck. | High-QPS backends, multilingual/product-specialized assistants. |

| Hybrid architectures | Mix linear + softmax intra-/inter-layer. | Best of both worlds; keep recall where it matters, go linear elsewhere. | More design space to tune; training stability tricks required. | Latency-constrained assistants needing quality headroom. |

| Diffusion LLMs | Non-autoregressive decoding via denoising steps. | Potentially few-step generation; parallelizable tokens. | Maturity, alignment with instruction-following, eval gaps. | Structured generation, short-form completion when latency is king. |

Opinion: The winning blueprint for 2025–26

Hybrid cores + grouped queries + MoE edges. In practice:

- Interleave linear blocks (e.g., SSM/Mamba-style) with softmax blocks so you keep robust recall in a few layers while making the rest O(N).

- Adopt GQA/MLA to shrink KV (e.g., 8Q heads → 2K/V groups). This is the most “boring but big” latency lever that rarely hurts quality for most enterprise tasks.

- Optionally add sparse MoE in the FFN path for capacity bumps at fixed compute. Use conservative top-2 routing and strict capacity factors; keep the expert count modest (<64) to simplify serving.

- IO-first engineering: treat FlashAttention-class kernels, paged KV, and fused epilogues as table stakes. Most real-world wins are bandwidth, not math.

Build notes (for CTOs and platform PMs)

- Context expansion: Prefer KV-light methods (GQA/MLA, grouped attention) before jumping to fully linear. For 32–128K contexts, you’ll get 60–80% of the benefit with minimal risk.

- On-device/edge: Linear RNN/SSM families shine. Constant-memory decoding + quantized attention moves credible assistants to laptops/phones.

- Server QPS pricing: MoE pays off when traffic is bursty and expert reuse is high. If you’re consistently capacity-bound with uniform traffic, a dense model with GQA + Flash kernels may be simpler/cheaper.

- RAG stacks: Pair training-free sparse attention (e.g., heuristics that bias to retrieved chunks) with a small number of full-attn “anchor” layers for safe recall.

- Safety/guardrails: Linearization and hybrids preserve most alignment behaviors if you keep instruction-tuned heads in the softmax layers and freeze them early when converting.

Linearization: don’t throw away your pretraining

You can convert a strong Transformer into a linear/SSM/RNN-ish decoder via swap-then-finetune or distill-then-train. The play here is pragmatic: keep tokenizer, embeddings, and as many weights as possible; only swap attention blocks for gated linear/SSM layers and finetune just enough to regain task quality. Done right, you cut KV cost dramatically without a full retrain. This is the most underrated path for teams with sunk pretraining cost who need latency relief now.

A deployment-oriented decision tree

- Need 128K+ context, with exact recall of specific spans? Start with FlashAttention-3 + GQA and sprinkle static/dynamic sparsity; only then trial linear hybrids.

- Chasing edge/on-device? Favor SSM/Mamba-style layers or log-linear memory variants; quantize early.

- High variance prompts & domains? Consider MoE for specialization at stable cost; invest in routing telemetry and autoscaling.

- Ultra-low latency chat (<30–50ms/token p95)? Combine IO-aware attention with grouped KV and speculative decoding; experiment with small-step diffusion for fixed-length outputs (titles, snippets).

What this means for product strategy

- Feature design: Long-context features (meeting analysis, codebase Q&A) become viable without eye-watering bills. Make “upload the whole repo / vault” a standard affordance.

- Pricing: As $ per MTok drops, you can re-bundle: more tokens per plan, or move to latency/priority tiers (SLA-backed p95 instead of raw token quotas).

- Roadmap: Treat efficiency work as a product feature, not just infra. Announce “2× faster, 3× longer context, same quality” and watch adoption jump.

Open questions we’re tracking

- Recall guarantees for linear/sparse blocks under adversarial retrieval.

- Routing stability in MoE at small batch sizes and low-latency serving.

- Diffusion LLM alignment for instruction-following and tool-use.

- Unified theory of dynamic memory (delta rules vs. gated linear recurrences) that predicts when hybrids outperform.

Bottom line

Speed isn’t a vanity metric; it’s a business model enabler. The architecture choices you make now—linear, sparse, grouped, MoE, hybrid—decide whether you can profitably scale context, meet SLAs, and fit experiences on the devices your users already own. Ship a hybrid, KV-light core, keep a few full-attention anchors for recall, and route compute only where it pays.

Cognaptus: Automate the Present, Incubate the Future