From Static Scripts to Living Workflows

The AI agent world has a scaling problem: most automated workflow builders generate one static orchestration per domain. Great in benchmarks, brittle in the wild. AdaptFlow — a meta-learning framework from Microsoft and Peking University — proposes a fix: treat workflow design like model training, but swap numerical gradients for natural language feedback.

This small shift has a big implication: instead of re-engineering from scratch for each use case, you start from a meta-learned workflow skeleton and adapt it on the fly for each subtask.

The Core Trick: Textual Gradients

In neural networks:

- Parameters get tuned via gradients.

- Gradients come from differentiable loss.

In AdaptFlow:

- Workflow modules (agents, prompts, control logic) are the “parameters.”

- Gradients are textual: LLM feedback like “Add a self-reflection step” or “Use multiple agents with diverse reasoning styles.”

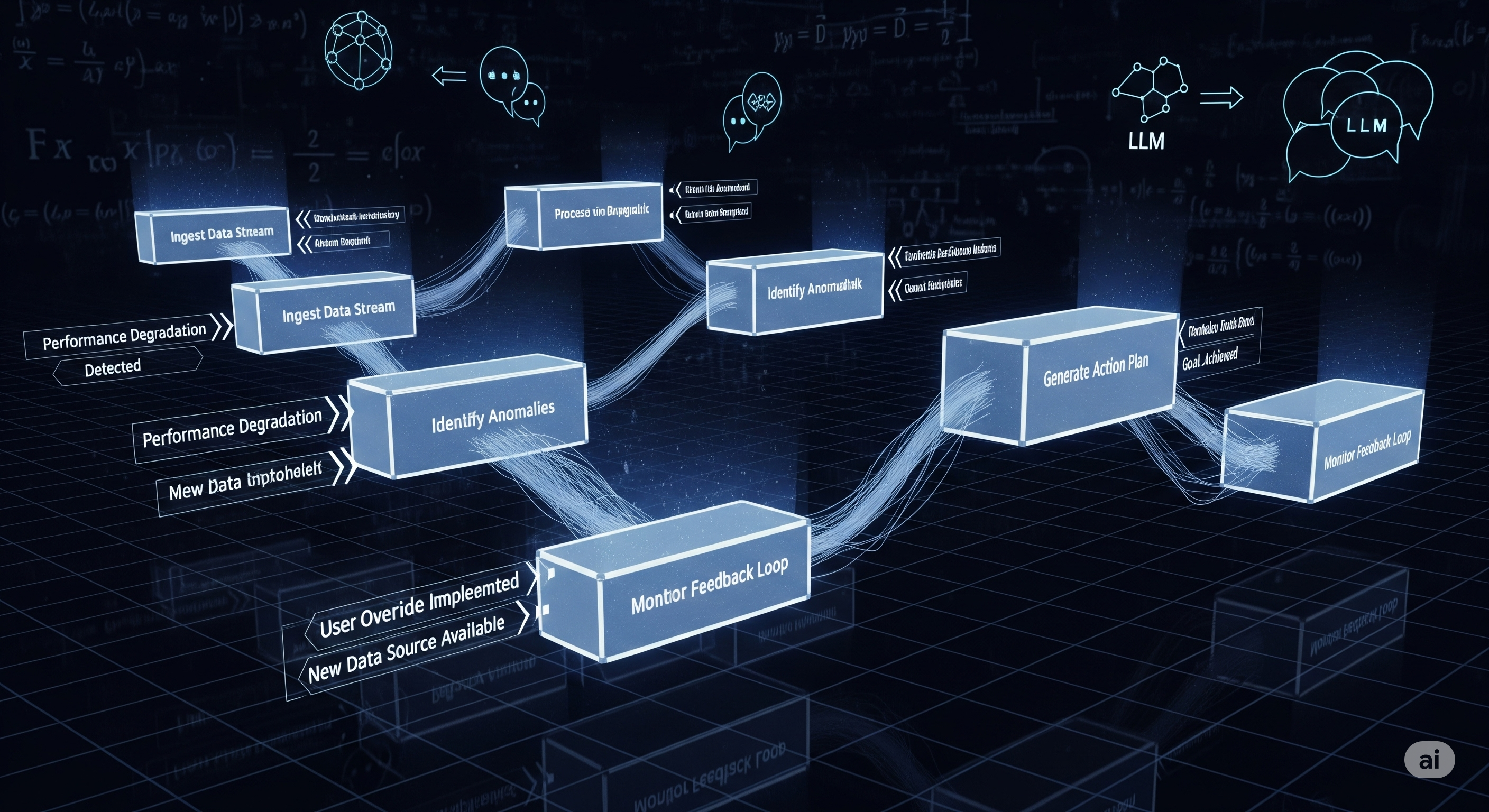

These updates happen in a bi-level optimization loop:

- Inner loop — Per-subtask refinement using LLM feedback.

- Outer loop — Aggregates the best refinements into a generalizable workflow initialization.

- Test-time adaptation — Lightweight tweaks for unseen subtasks, guided by semantic task descriptions.

The result: a workflow that’s both general enough to start anywhere and specific enough to perform well quickly.

Why It Works

The key design choices:

| Challenge | Old Approach | AdaptFlow’s Answer |

|---|---|---|

| Diverse problem types | One-size-fits-all template | Task clustering + per-subtask adaptation |

| Instability in search space | Fixed structure updates | Binary continuation signals for stability |

| Poor generalization | Domain-specific workflows | Reflection-enhanced meta-updates |

By treating workflows as living, modular programs instead of static chains, AdaptFlow sidesteps the overfitting and stagnation seen in ADAS, AFLOW, and similar frameworks.

Performance in Practice

Across QA, coding, and mathematics, AdaptFlow scored the highest overall average (68.5) against both manual strategies (CoT, Reflexion, LLM Debate) and automated baselines.

- Mathematics saw the biggest lift: modular specialization (e.g., Value Tracker for Number Theory, Approximation Detector for Prealgebra) boosted solve rates on complex symbolic reasoning.

- Test-time adaptation added up to +3.5% on unseen subtasks.

- Reflection module added another +1.3% by targeting failure patterns.

Industry Implications

This architecture isn’t just for academic benchmarks:

- Customer Service AI — AdaptFlow could cluster incoming ticket types and evolve specialized response flows.

- Business Intelligence — Adaptive data pipelines that swap analysis strategies for volatile vs. stable markets.

- R&D Automation — Scientific assistants that switch literature review or experiment planning tactics based on discipline.

For any domain where tasks vary but patterns repeat, this approach could replace static prompt-engineered templates entirely.

Two Caveats

- LLM feedback quality — If the textual gradients are vague, the workflow may drift.

- Compute cost — Inner and outer loops require multiple LLM calls per iteration.

Both issues can be mitigated: structured feedback prompts can improve update precision, and adaptive stopping rules can cut API bills.

Bottom line: AdaptFlow shows that meta-learning belongs not just in models, but in the orchestration that runs them. It’s an early but convincing blueprint for AI systems that don’t just run workflows — they grow them.

Cognaptus: Automate the Present, Incubate the Future