In the race to conquer time-series classification, most modern models have sprinted toward deeper Transformers and wider convolutional architectures. But what if the real breakthrough came not from complexity—but from symmetry? Enter PRISM (Per-channel Resolution-Informed Symmetric Module), a model that merges classical signal processing wisdom with deep learning, and in doing so, delivers a stunning blow to overparameterized AI.

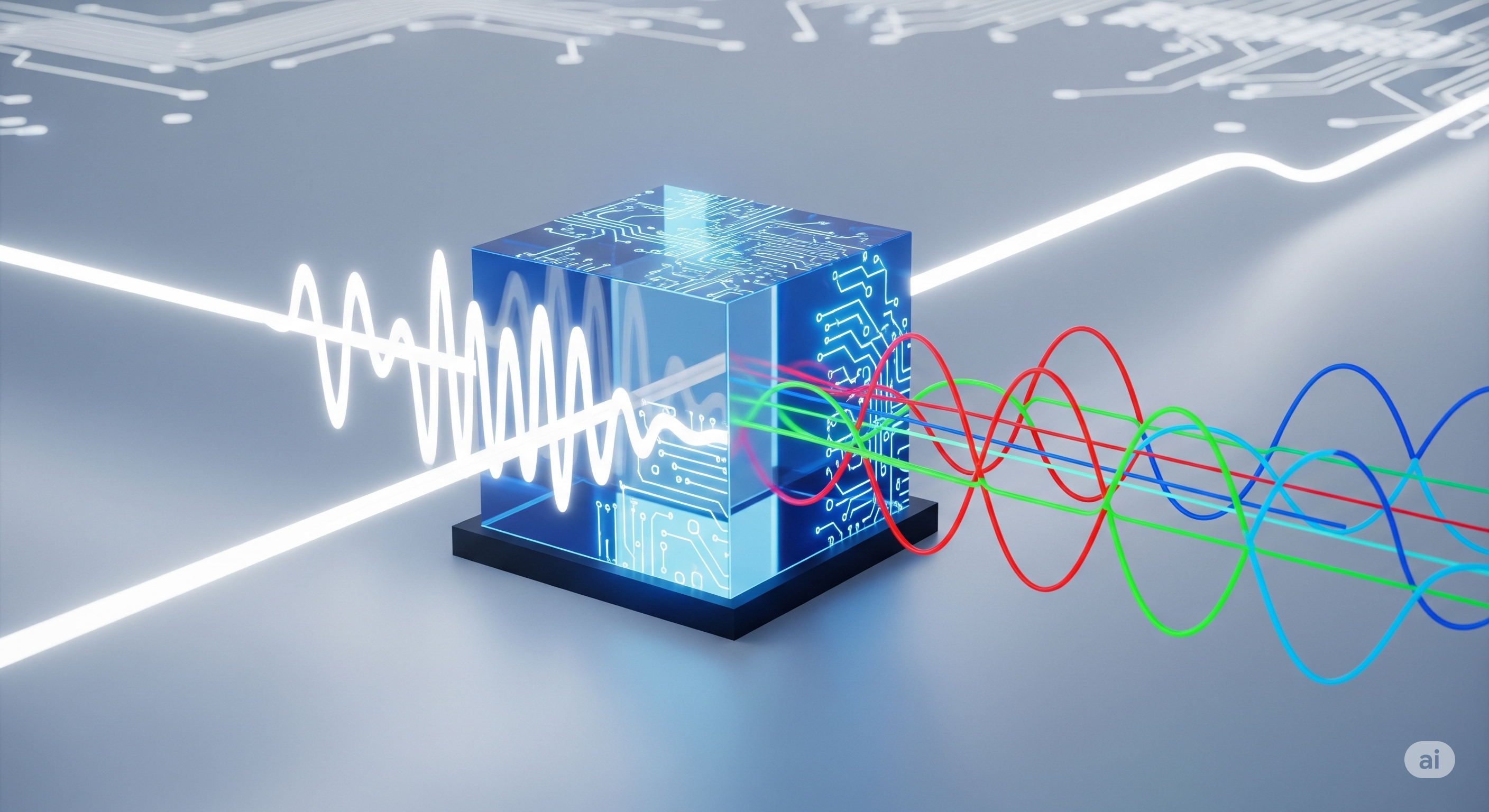

PRISM’s central idea is refreshingly simple: instead of building a massive model to learn everything from scratch, start by decomposing the signal like a physicist would—using symmetric FIR filters at multiple temporal resolutions, applied independently per channel. Like a prism splitting light into distinct wavelengths, PRISM separates time-series data into spectral components that are clean, diverse, and informative.

A Time-Series Transformer Killer? Not Quite. But Almost.

Despite their dominance in NLP, Transformers often stumble in time-series domains—especially those with limited data or noisy signals. Self-attention is expensive, and more importantly, blind to the natural structure of signals. Recent papers have even shown that simple linear models can outperform Transformers when inductive biases are better aligned with the task.

PRISM bypasses this entirely. No attention layers. No positional encodings. Just carefully designed filters that preserve phase, emphasize frequency selectivity, and operate across time scales. And when paired with even the simplest linear classifier, PRISM often beats state-of-the-art Transformer architectures like TimesNet, PatchTST, and Crossformer—using less than 10% of the parameters.

Anatomy of PRISM: Symmetry Meets Scalability

PRISM’s architecture revolves around three core ideas:

1. Symmetric FIR Filters

- Each filter is palindromic, ensuring linear phase.

- Filters act as localized frequency extractors—similar to a learnable DCT bank.

- Enforces diversity: filters are less redundant and more spectrally distinct than unconstrained convolutions.

2. Multi-Resolution per Channel

- PRISM applies filters of different sizes (e.g., 15, 31, 51, 71) to each channel.

- Smaller filters capture transients; longer filters detect trends.

- No cross-channel convolutions—each sensor is processed independently.

3. Resolution-Informed Patch Embedding

- Features from different resolutions are tokenized via depthwise convolutions.

- Fused into compact vectors via pointwise projections.

- Outputs can be pooled (for low-resource devices) or unpooled (for temporal modeling).

Here’s how it compares:

| Feature | PRISM | Transformers (e.g., TimesNet) | CNNs (e.g., ROCKET, TSLANet) |

|---|---|---|---|

| Frequency Selectivity | Explicit (FIR filters) | Emergent (learned attention) | Implicit (via filters) |

| Phase Preservation | Yes (linear-phase) | No | No |

| Parameter Count | Tiny (3k - 40k) | Huge (500k - 2M) | Moderate (100k - 500k) |

| FLOPs | Minimal | High | Moderate |

| Channel Interaction | None (by design) | Often fused early | Often fused early |

Why It Works: Ablation and Frequency Analysis

Two standout findings from the paper’s experiments:

- Multi-resolution matters more than multi-kernel: Adding new filter sizes gives bigger gains than adding more filters per size.

- Symmetric filters enforce spectral diversity: Compared to unconstrained filters, symmetric FIR filters yield higher pairwise FFT distances, proving they capture more unique frequency content.

PRISM’s design acts like an implicit regularizer—steering learning toward compact, diverse, and robust representations.

Real-World Proof: From Smartphones to Sleep Labs

PRISM was tested on six public benchmarks and a demanding case study:

- Human Activity Recognition (UCIHAR, WISDM, HHAR)

- Biomedical Signals (ECG, Sleep EEG, Heartbeat sounds)

- ISRUC-S3 Multimodal Sleep Staging (EEG + EOG + EMG)

Even in the most challenging setup—ISRUC-S3 with only 10 subjects—PRISM achieved comparable accuracy to large models like MSA-CNN, while using only 3,817 parameters and 8.3 MFLOPs. This makes it suitable for wearables, IoT devices, and real-time clinical systems.

A Prism, Not a Black Box

In an era of bloated models and opaque embeddings, PRISM’s elegance lies in its interpretability. Each filter is a signal-processing lens—clean, efficient, and analyzable. It doesn’t just classify. It illuminates.

Future work might explore cross-channel attention, self-supervised learning atop PRISM, or hybrid pipelines where PRISM serves as a plug-and-play front-end to downstream models.

But even in its current form, PRISM offers a timely reminder: sometimes, the path forward isn’t deeper or wider—it’s sharper.

Cognaptus: Automate the Present, Incubate the Future