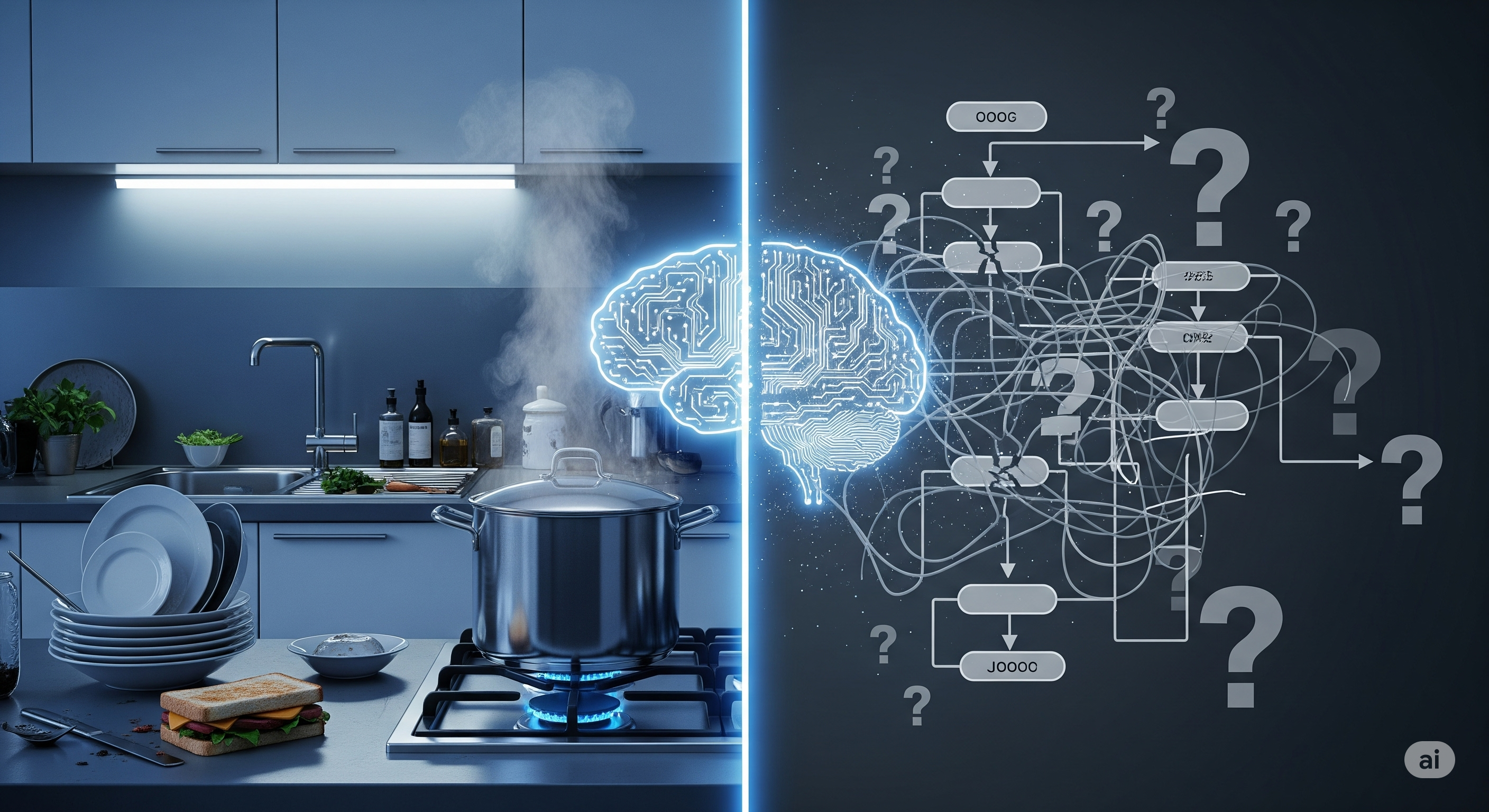

If today’s AI models can ace bar exams, explain astrophysics, and generate functional code from a napkin sketch, why do they still fail at seemingly simple questions that require looking and thinking?

A new benchmark called MCORE (Multimodal Chain-of-Reasoning Evaluation) answers that question with a resounding: because reasoning across modalities is hard—and we’re not as far along as we thought.

Beyond Pattern Matching: What MCORE Tests

The majority of multimodal evaluations today rely on either:

- Visual Question Answering (VQA) tasks — “What color is the cat?”

- Single-hop reasoning — “Which object is heavier?”

These are useful, but superficial. They don’t test the kind of multi-hop, chain-of-thought reasoning that humans rely on when interpreting scenes, predicting outcomes, or drawing causal links from both visual and textual cues.

MCORE is designed to do just that. Each benchmark instance includes:

- An image (often a real-world scene or diagram)

- A final question that cannot be answered directly without intermediate inference

- A reasoning graph: a set of annotated intermediate sub-questions and their answers

Think of it like chess. To evaluate a model’s understanding, it’s not enough to know that it played a winning move—we need to know if it saw the pin, predicted the fork, and understood the trade-off.

MCORE forces models to show their work, step by step.

Three Skills MCORE Measures

| Skill | Description |

|---|---|

| Final Answer Accuracy | Did the model answer the end question correctly? |

| Intermediate Step Accuracy | Did the model answer intermediate sub-questions correctly? |

| Self-Consistency | Are the intermediate answers logically consistent with the final answer? |

Benchmarking the Benchmarks: How Current Models Perform

When top MLLMs like GPT-4V, Claude, Gemini, and MathVista were put to the test, the results were humbling:

- Many got the final answer right—but contradicted themselves in the reasoning steps.

- Others solved individual steps—but couldn’t chain them together meaningfully.

This suggests that some models might be relying on surface-level pattern matching or hallucinated correlations, rather than grounded reasoning.

One case involved a question about what happens if you put your hand in boiling water. Some models correctly said “you will get burned,” but failed to mention the pot, the stove, or the steam—evidence they weren’t truly “seeing” the image.

MCORE vs. the Usual Suspects

| Feature | VQA Benchmarks | CoT Benchmarks | MCORE |

|---|---|---|---|

| Requires image input | ✅ | ❌ | ✅ |

| Involves multistep reasoning | ❌ / limited | ✅ | ✅ |

| Evaluates intermediate steps | ❌ | ✅ | ✅ |

| Diagnoses self-consistency | ❌ | ❌ / partial | ✅ |

| Open-ended answers | Often multiple choice | Mostly textual | ✅ |

This isn’t just another benchmark—it’s a reality check.

The Bigger Picture: Why This Matters

Multimodal reasoning isn’t just a fancy academic pursuit. It’s foundational for:

- Autonomous agents navigating real-world environments

- Educational tutors interpreting student diagrams

- Medical assistants examining scans and notes

- Legal or scientific AI interpreting visual exhibits or charts

In all these domains, an answer without sound reasoning is not just insufficient—it’s dangerous.

Challenges and the Road Ahead

The authors of MCORE are transparent about its limitations:

- Creating gold-standard reasoning graphs is labor-intensive

- There can be multiple valid reasoning paths that models are penalized for not taking

- Black-box models (e.g., GPT-4V) make it hard to trace internal logic

But the value is clear: MCORE doesn’t just expose model errors. It shows where and how they fail, providing a blueprint for future training and evaluation.

One promising direction? Use models themselves to generate or critique reasoning graphs—a meta-reasoning approach that could scale the benchmark and improve the models.

Final Thought

The illusion of intelligence fades quickly under the light of cross-modal reasoning. MCORE doesn’t just raise the bar—it asks the right questions about what it really means to “understand.”

Cognaptus: Automate the Present, Incubate the Future