When a junior developer misunderstands your instructions, they might still write code that compiles and runs—but does the wrong thing. This is exactly what large language models (LLMs) do when faced with faulty premises. The latest paper, Refining Critical Thinking in LLM Code Generation, unveils FPBench, a benchmark that probes an overlooked blind spot: whether AI models can detect flawed assumptions before they generate a single line of code.

Spoiler: they usually can’t.

The Premise Problem: From Syntax to Scrutiny

Current code generation benchmarks like HumanEval or MBPP test functional correctness under ideal inputs. But in the real world, users are ambiguous, imprecise, or just wrong. When a prompt contains faulty premises—like missing definitions, logical contradictions, or misleading comments—LLMs often generate hallucinated or broken code without realizing anything is wrong.

FPBench confronts this head-on. It introduces 1,800 problems crafted from HumanEval and MBPP+, each deliberately sabotaged using one of three perturbation strategies:

| Method | Strategy | Model Weakness Exposed |

|---|---|---|

| UPI (Unrelated Perturbation Insertion) | Adds misleading comments or hints | Logical conflict resolution |

| RAD (Random-Based Deletion) | Randomly removes variables, constants, or comments | Resilience to missing context |

| RUD (Rule-Based Deletion) | Deletes high-impact premises based on an importance score | Dependency and causal reasoning |

By evaluating how well LLMs handle these adversarial variants, FPBench repositions code generation not as an output test, but as an input validation challenge.

Three Metrics That Matter

FPBench sidesteps misleading metrics like pass@k, which conflate lucky guesses with real understanding. Instead, it introduces:

- Proactive Error Recognition Rate (PRER): Can the model unprompted detect something is wrong?

- Passive Error Recognition Rate (PAER): Can the model find the flaw if explicitly asked?

- Overhead Ratios (PROR/PAOR): How much extra token bloat does the model incur when trying to reason under faulty premises?

This trio offers a cognitive lens, separating epistemic vigilance from surface-level fluency.

Results: Compliant, But Not Critical

| Model | PRER (No prompt) | PAER (With prompt) | PROR | PAOR |

|---|---|---|---|---|

| DeepSeek-R1 | 0.57 | 0.77 | 1.42 | 1.56 |

| GPT-4.1 | 0.23 | 0.81 | 1.06 | 1.87 |

| GPT-4o | 0.26 | 0.68 | 1.08 | 1.27 |

| O4-mini | 0.12 | 0.71 | 1.09 | 1.56 |

| Qwen3-235B | 0.30 | 0.72 | 1.06 | 1.48 |

Two disturbing patterns emerge:

- LLMs are terrible at catching mistakes unless told to. Even the strongest models score below 0.6 on PRER. Without prompts, they don’t doubt.

- Token bloat is not intelligence. Some models more than double their output length under scrutiny (e.g., GPT-4.1-mini’s PAOR = 2.42) — yet gain marginal improvement.

The insight? Today’s LLMs mimic confidence more than they demonstrate cognition.

Triple Dissociation: Cognitive Fault Lines

One of FPBench’s most striking contributions is the discovery of a triple dissociation:

Each faulty premise type activates a different cognitive failure mode in the model.

| Perturbation Type | Cognitive Pathway Impaired | Symptom |

|---|---|---|

| RUD | Causal reasoning via pattern dependency | Hallucinated logic |

| UPI | Logical contradiction detection | Conformist acceptance |

| RAD | Syntactic structure recognition | Silent omissions or invalid code |

This suggests LLMs don’t have one general failure mode, but multiple weak links across different cognitive layers. Future training must build layered scrutiny mechanisms to handle these failure types.

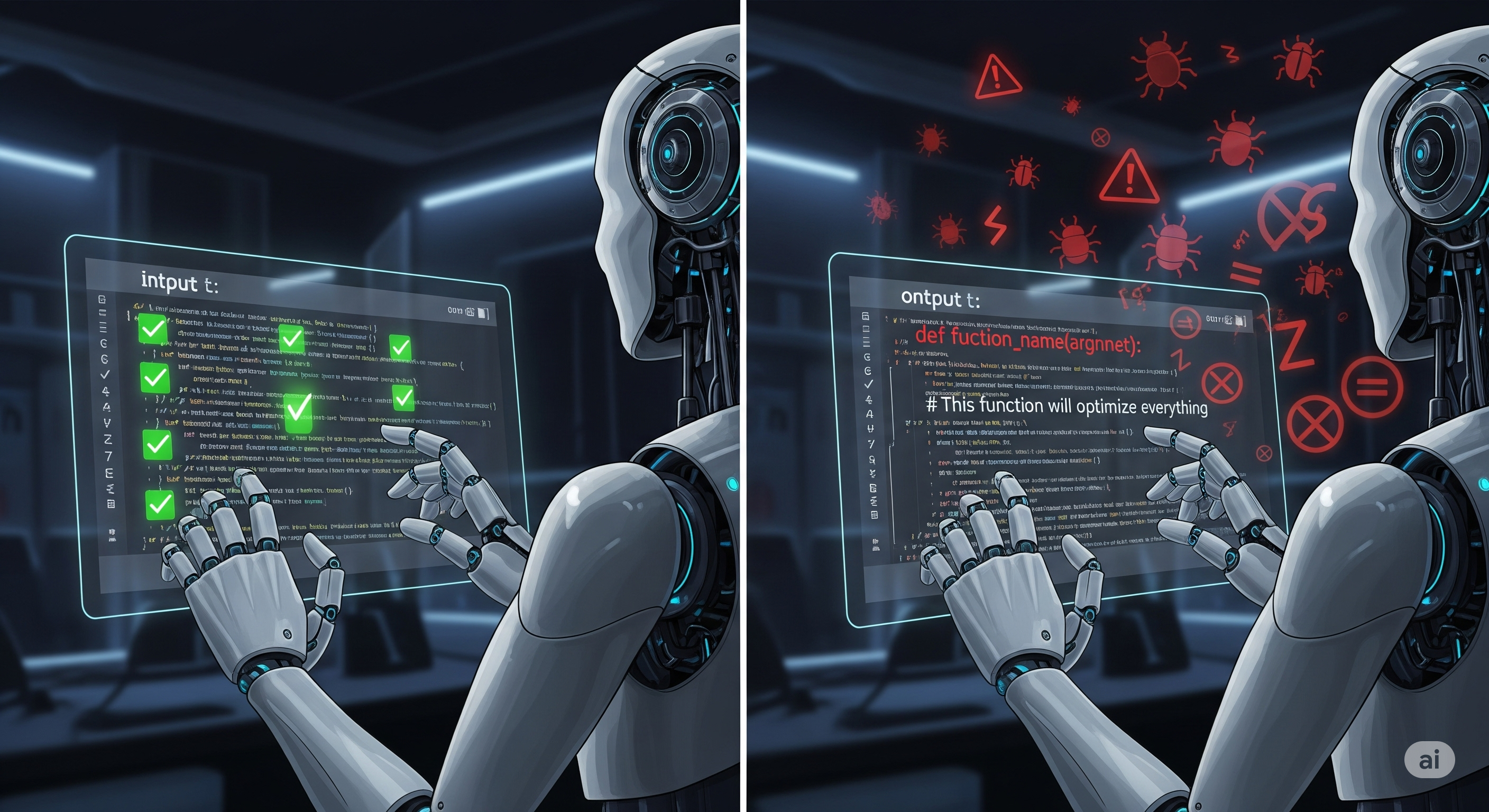

The Bigger Message: Don’t Just Complete. Critique.

FPBench argues that the next evolution of code generation isn’t just about making fewer syntax errors or solving harder problems. It’s about building models that can say: “Wait, something about this question doesn’t add up.”

That means shifting from completion-based models to premise-checking collaborators.

Until we get there, AI assistants remain vulnerable not because they can’t generate code—but because they can’t doubt the user.

Cognaptus: Automate the Present, Incubate the Future