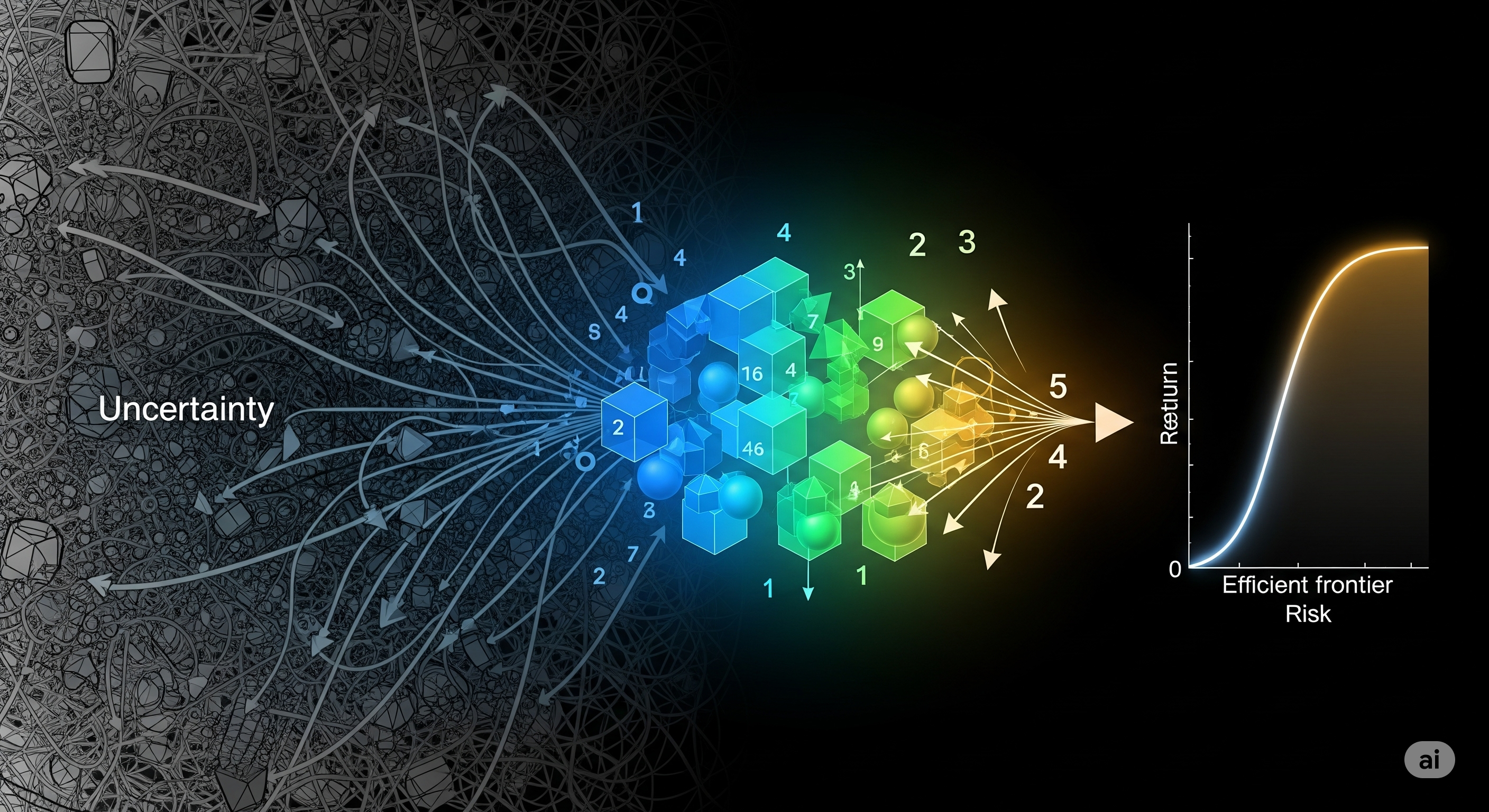

In asset management, few debates are more charged than the tug-of-war between causal purity and predictive utility. For years, a growing number of voices in empirical finance have argued that causal factor models are a necessary condition for portfolio efficiency. If a model omits a confounder, the logic goes, directional failure and Sharpe ratio collapse are inevitable.

But what if this is more myth than mathematical law?

A recent paper titled “The Myth of Causal Necessity” by Alejandro Rodriguez Dominguez delivers a sharp counterpunch to this orthodoxy. Through formal derivations and simulation-based counterexamples, it exposes the fragility of the causal necessity argument and makes the case that predictive models can remain both viable and efficient even when structurally misspecified.

The Central Claim: Calibration Trumps Causality

At the heart of the paper lies a provocative insight: what truly undermines portfolio efficiency is not omitted causality, but miscalibrated signals. In other words, directional correctness (i.e., getting the signs right) is not enough. If your model consistently under- or overestimates the magnitude of alphas, you’ll allocate capital poorly—even if you’re moving in the right direction.

| Modeling Aspect | Does It Affect Efficiency? |

|---|---|

| Sign agreement | Necessary, but not sufficient |

| Correct ranking | Helpful, but not enough |

| Signal calibration | Critical |

| Causal structure | Not required |

Counterexamples That Matter

The paper builds two instructive cases:

-

Structural Cancellation in Logistic Models: A confounder Z is omitted, but because it is linearly dependent on an included variable X, its effect is absorbed. The model is wrong—but the signals it produces remain directionally correct.

-

Nonlinear Confounded Generative Process: Even in a more complex setup with tanh(X) and sin(Z) components, the predicted signals from a logistic model (ignoring Z) still support valid efficient frontiers. No collapse, no inversion, just a different—but still convex—geometry.

These examples are not just academic curiosities. They debunk the widespread intuition that misspecification automatically results in negative diagonal weight scatter or systematic sign failure.

Theoretical Validation: Why Optimization Still Works

Rodriguez Dominguez shows that classical mean-variance optimization remains well-posed when signals are directionally aligned with true returns, even if those signals come from flawed models. The Sharpe ratio doesn’t vanish, and the efficient frontier stays smooth and convex.

Lemma 2: If the surrogate return vector (from your model) has positive dot product with the true return vector, the frontier geometry survives.

This is a powerful rebuttal to papers like Lopez de Prado et al. (2024–2025), who claimed that misspecified models collapse optimization altogether. Their simulations and visualizations may have suffered from cherry-picked edge cases or overreliance on negative diagonals.

Why This Matters for Practitioners

In real markets, perfect structural identification is a fantasy. Financial systems are nonstationary, confounded, and often adversarial. Instead of chasing causality unicorns, practitioners are better served by focusing on:

- Signal calibration: Use tools like isotonic regression or temperature scaling to align predicted signal magnitudes with realized outcomes.

- Robust optimization: Implement frameworks that tolerate noise, such as regularized quadratic programs or Bayesian updates.

- Predictive-first modeling: Accept that models can be wrong in structure but useful in function.

The Reframing: Causality as a Bonus, Not a Requirement

This does not mean causal insights are worthless. In regulatory or stress-testing contexts, they may be essential. But for most portfolio construction tasks, the burden of proof is on those who insist that causal completeness is required.

Rodriguez Dominguez reminds us of a principle echoed by Breiman and Shmueli:

“In the real world, prediction is often more valuable than explanation.”

Finance, more than any other domain, rewards what works. And what works, this paper shows, does not always need to know why.

Cognaptus: Automate the Present, Incubate the Future.