Financial markets don’t reward the loudest opinions. They reward the most timely, well-calibrated ones.

FinDPO, a new framework by researchers from Imperial College London, takes this lesson seriously. It proposes a bold shift in how we train language models to read market sentiment. Rather than relying on traditional supervised fine-tuning (SFT), FinDPO uses Direct Preference Optimization (DPO) to align a large language model with how a human trader might weigh sentiment signals in context. And the results are not just academic — they translate into real money.

From Labels to Preferences: Why SFT Falls Short

Supervised fine-tuning has dominated sentiment analysis in finance. Models like FinBERT or FinGPT v3.3 learn to map text to discrete labels like positive, negative, or neutral. But financial language is messy: sarcasm, coded terms, and domain-specific signals abound. Worse, markets are non-stationary — yesterday’s sentiment cues don’t always apply tomorrow.

SFT, which optimizes for correctness on fixed labels, struggles in this setting. It tends to overfit and memorize. DPO, in contrast, teaches a model preferences over outputs: not just which answer is correct, but why one output is better than another.

| Training Paradigm | Optimization Target | Robust to Distribution Shift? | Output Format |

|---|---|---|---|

| SFT | Match ground-truth label | ❌ Often fails | Discrete |

| DPO | Prefer better outputs | ✅ Better generalization | Discrete (with scores) |

How FinDPO Works

FinDPO is built on Llama-3-8B-Instruct and trained with preference pairs drawn from labeled financial datasets (Financial PhraseBank, TFNS, and GPT-labeled news). Each training instance uses DPO to compare the correct sentiment label with an incorrect one, forcing the model to move probability mass toward the preferred output.

To avoid overkill, FinDPO uses LoRA for parameter-efficient training: only 0.52% of parameters are updated. The entire training pipeline runs in 4.5 hours on a single A100 GPU.

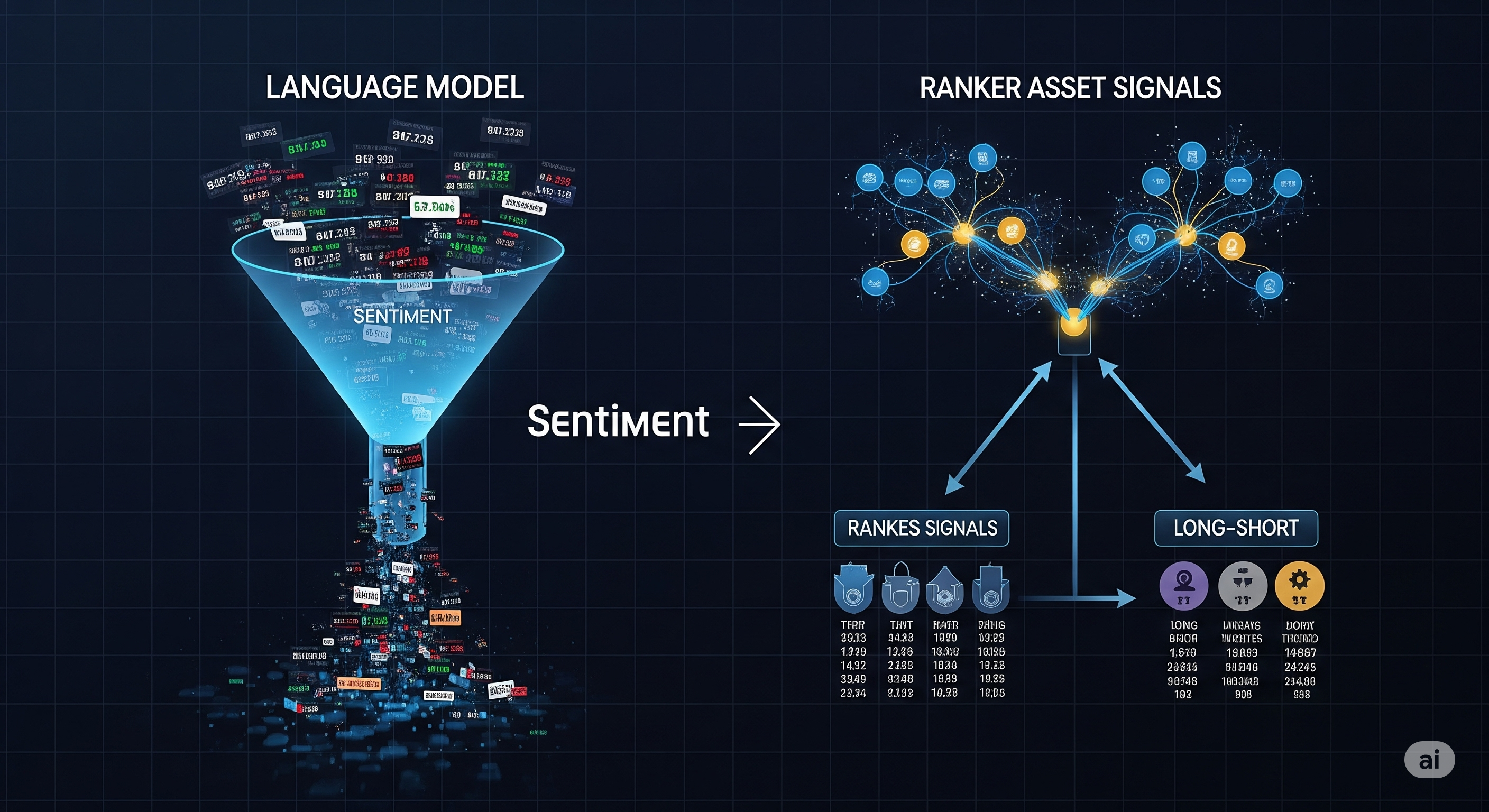

Critically, FinDPO keeps the model causal, allowing for logit-based scoring — not just classification. This makes it uniquely suitable for downstream portfolio construction, where asset ranking is more important than classification.

Turning Sentiment into Returns

The most groundbreaking part of FinDPO isn’t just its benchmark scores (though they’re impressive: +11% F1 gain over FinGPT v3.3). It’s how it transforms those outputs into realistic trading signals:

- Logit-to-score converter: Extracts probability mass from the first token logits, then applies temperature scaling for calibrated sentiment strength.

- Asset-level ranking: Each company-day gets a sentiment score in [-1, 1], enabling rank-based long/short selection.

- Realistic backtest: Over 6 years and 417 S&P 500 firms, portfolios rebalance daily using sentiment ranks, under 5 bps transaction cost.

Performance: The Sharpe Awakening

| Method | Ann. Return | Sharpe | Calmar |

|---|---|---|---|

| FinDPO | 66.6% | 2.03 | 2.21 |

| FinLlama | −4.1% | −0.24 | −0.06 |

| FinBERT | −13.3% | −0.74 | −0.18 |

| VADER | −30.2% | −1.92 | −0.29 |

| S&P 500 | 11.3% | 0.62 | 0.41 |

Even under friction, FinDPO’s sentiment signals remain tradable. It beats FinLlama by an order of magnitude in cumulative return (747% vs. 261% at zero cost), but more importantly, it holds up when costs rise. This sets it apart from most academic sentiment systems that crumble under slippage.

A Paradigm Shift for FinLLMs?

FinDPO challenges a quiet assumption in financial NLP: that fine-tuning is enough. But just as traders improve through experience and reflection, models may benefit more from learning preferences than from parroting labels.

This opens the door to preference-optimized FinLLMs in:

- Earnings call Q&A assessment

- Regulatory filing risk scoring

- Analyst tone shift detection

- High-frequency news stream filtering

Wherever nuance, ambiguity, and context matter, DPO-trained models like FinDPO could provide the edge.

Cognaptus: Automate the Present, Incubate the Future.