Pricing American options has long been the Achilles’ heel of quantitative finance, particularly in high dimensions. Unlike European options, American-style derivatives introduce a free-boundary problem due to their early exercise feature, making analytical solutions elusive and most numerical methods inefficient beyond two or three assets. But a recent paper by Jasper Rou introduces a promising technique — the Time Deep Gradient Flow (TDGF) — that sidesteps several of these barriers with a fresh take on deep learning design, optimization, and sampling.

What Makes American Options So Hard?

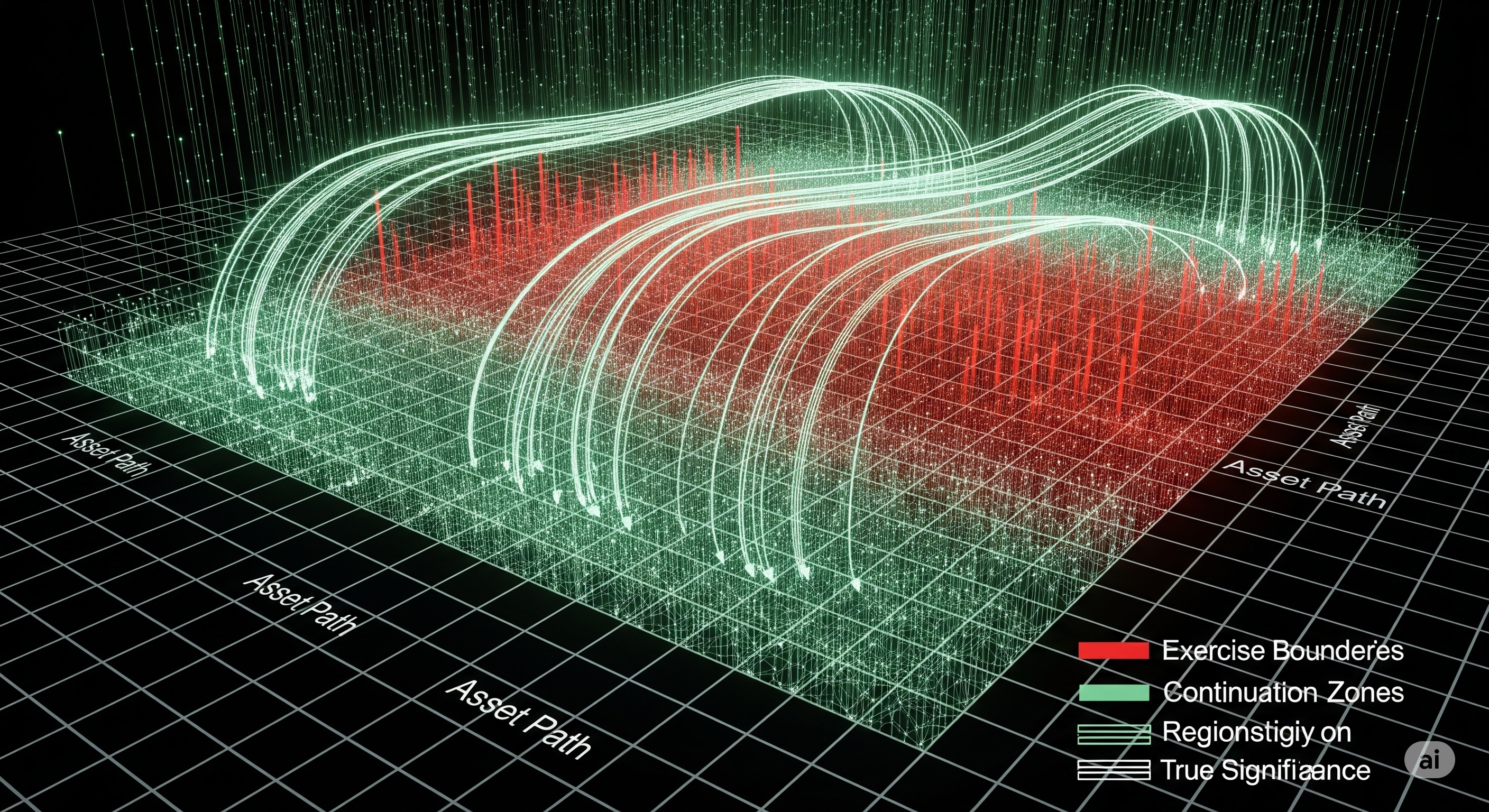

An American option allows exercise at any point before maturity, which introduces a free-boundary partial differential equation (PDE). The boundary itself — the optimal exercise region — must be discovered as part of the solution. In high dimensions, this becomes computationally brutal.

Classic methods like the Longstaff-Schwartz regression Monte Carlo can simulate early exercise strategies, but they scale poorly with dimension. Meanwhile, PDE solvers like the Deep Galerkin Method (DGM) work in principle, but suffer from slow training and overfitting to regions where the PDE constraints don’t even apply.

TDGF addresses both issues with three strategic moves:

1. Reformulate the Problem: Learn the Continuation Value Only

Instead of learning the full price function, the TDGF network only models the continuation value, defined as:

$u(t, x) - \Psi(x) \quad \text{where } u(t, x) \geq \Psi(x)$

This makes sense: whenever the option’s value is below its immediate payoff ($\Psi$), we should be exercising, not solving a PDE. So why train the network there? TDGF trains only on regions where continuation matters.

This aligns with domain knowledge — financial structure informs neural network design — and significantly reduces variance during training.

2. Time-Stepping with Energy Minimization

TDGF discretizes the time domain and solves the PDE sequentially, one step at a time. At each time step, it minimizes an energy functional composed of:

- An L2 difference from the previous step

- A regularization term from the PDE operator (gradient and potential terms)

Unlike DGM, which minimizes the L2 error of the full free-boundary PDE at once, TDGF operates locally in time, making optimization more stable. It doesn’t require computing the second derivatives of the solution in the loss, further reducing computational load.

3. Smart Sampling: No More Uniform Grids

In high dimensions, uniform sampling leads to sparse edge coverage — the regions where American options exhibit the richest structure. TDGF sidesteps this by employing a box sampling strategy:

Domain = [0.01, 3.0] (Black–Scholes), or [0.01, 2.0] + [0.001, 0.1] (Heston volatility)

Split into 19 boxes

Sample each box uniformly (30d or 60d samples per box depending on the model)

Training begins with stratified box sampling to initialize broad knowledge and then switches to uniform sampling — but only where the continuation value is positive.

This approach is particularly effective in the Heston model, where volatility becomes a latent state variable. Here, the TDGF must learn over $2d$ dimensions (e.g., price + variance for each asset) — yet still outpaces DGM.

Benchmarking the Speed Gains

Let’s look at the numbers:

| Model | Method | Training Time (d=5) | Inference Time |

|---|---|---|---|

| Black-Scholes | DGM | 16,174s | 0.0024s |

| TDGF | 6,583s | 0.0017s | |

| Heston | DGM | 41,718s | 0.0016s |

| TDGF | 12,881s | 0.0017s |

Not only does TDGF slash training times by more than 50%, it matches or beats DGM and Monte Carlo in accuracy across 2D and 5D settings.

The real-world implication? A high-dimensional American basket put option can be priced in minutes, not hours, and the model deployed for real-time risk or hedging.

Why This Matters

Incorporating financial priors into neural network design — whether via architecture, domain-filtered loss, or intelligent sampling — is a major step forward. It suggests that the path to efficient LLMs or AI agents for finance may not lie in bigger models, but better-informed ones.

Rou’s TDGF is more than a speedup; it’s a reframing of the problem space. Instead of throwing brute force at the full domain, it focuses on where decisions matter — the essence of both optimal stopping and good modeling.

Cognaptus: Automate the Present, Incubate the Future