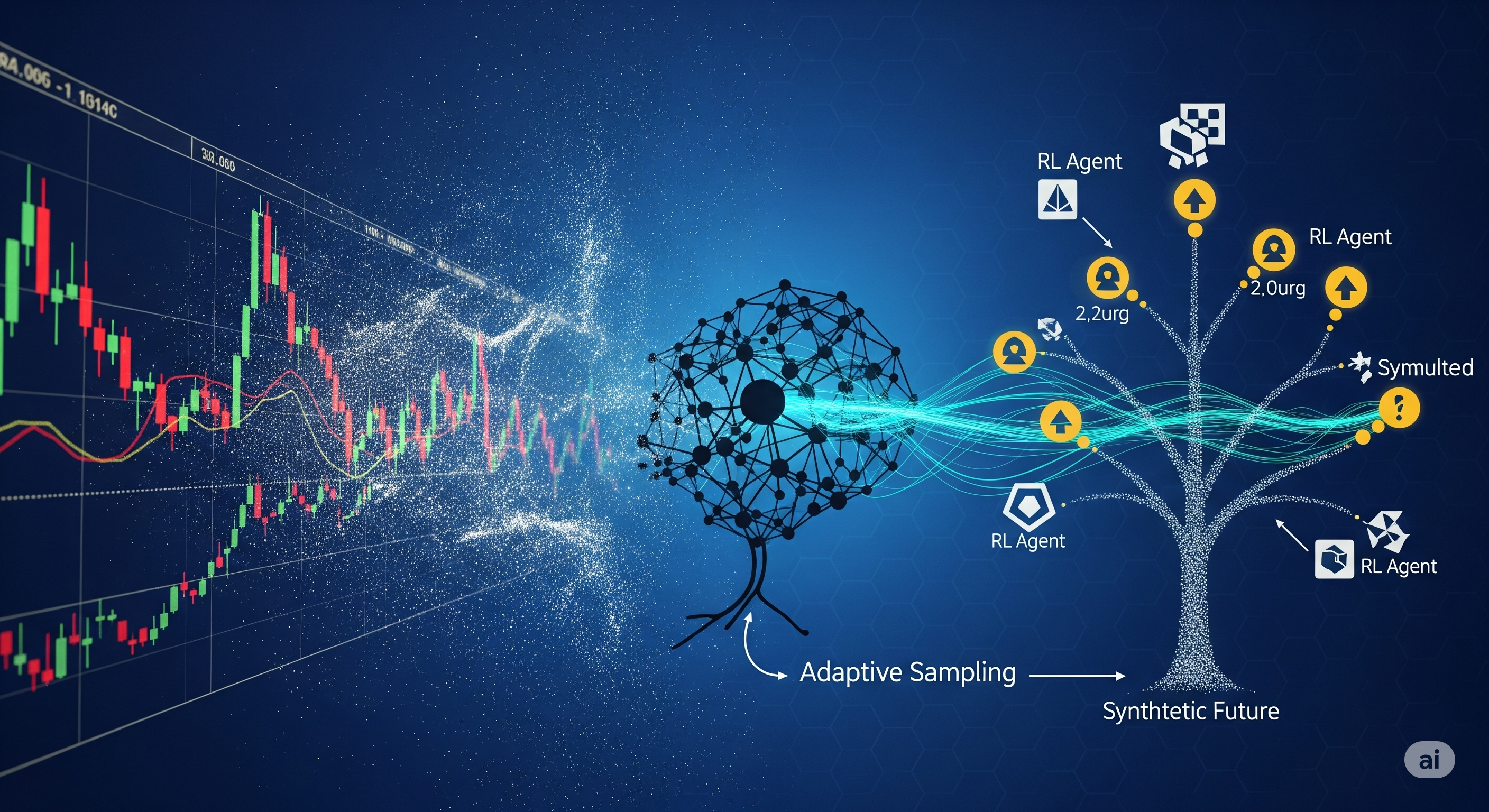

What if you could simulate thousands of realistic futures for the market, all conditioned on what’s happening today—and then train an investment strategy on those futures? That’s the central idea behind a bold new approach to portfolio optimization that blends score-based diffusion models with reinforcement learning, and it’s showing results that beat classic benchmarks like the S&P 500 and traditional Markowitz portfolios.

In a field often riddled with estimation errors and fragile assumptions, this model-free method offers a fresh way to handle dynamic portfolio selection under uncertainty.

The Classic Struggle: Dynamic, But Model-Free

Dynamic mean-variance optimization sounds appealing: rebalance portfolios over time, adapt to new information, hedge intertemporally. But the math quickly gets messy, especially when we don’t assume a specific stochastic model for returns. Market dynamics are noisy, nonstationary, and often not well-described by parametric processes like geometric Brownian motion.

That’s where generative models enter the picture. Instead of assuming a model, why not learn one from the data—and use it to simulate plausible futures?

But not just any generative model will do. TimeGANs and other adversarial approaches can be unstable and lack the ability to do conditional sampling—crucial for financial decisions that depend on recent history. This is where score-based diffusion models shine.

Score-Based Diffusion Models: Conditional Scenario Engines

Diffusion models, known for their recent dominance in image generation, are surprisingly well-suited to modeling financial time series. In this setup:

- Historical price paths are treated as samples from an unknown distribution $P$.

- A score-based diffusion model is trained to approximate this with a generative distribution $Q$, using score-matching techniques.

- The model supports conditional sampling: given the recent market state, generate future paths that conform to learned dynamics.

The innovation here is a novel adaptive sampling framework that treats time not just as another dimension, but as a sequential, non-anticipative process. To measure closeness between real and simulated paths, the authors use the adapted Wasserstein metric (AW2), which is more suitable for sequential data than standard metrics like KL divergence or W2.

Theoretical result: If the score-matching error is small and diffusion time is long enough, the generated paths $Q$ are close to $P$ in AW2, meaning they preserve the structure needed for dynamic decision-making.

Dual Trick: Turning Mean-Variance into RL-Friendly Form

Mean-variance objectives are notoriously tricky for reinforcement learning: they don’t follow the Bellman equation due to time inconsistency. To fix this, the authors use a clever duality:

- They transform the problem into a quadratic hedging problem.

- The value function becomes dynamic-programmable.

- This enables the use of a policy gradient RL algorithm, trained entirely in the surrogate environment generated by the diffusion model.

A simplified schematic:

| Real Data ($P$) | Diffusion Model ($Q$) | RL Agent |

|---|---|---|

| Observed paths | Simulated futures | Learns action policy via reward gradients |

| Limited samples | Unlimited conditional sampling | Uses recent state embeddings |

Results: Better Than S&P 500, Equal Weight, and Markowitz

The researchers test their pipeline on both synthetic and real-world data:

On synthetic data:

- The RL agent trained on simulated $Q$ paths achieves comparable performance to an oracle that knows the true model $P$.

- It beats the equal weight and Markowitz strategy with estimated parameters, especially in Sharpe ratio.

On real data (10 Industry Portfolios from 1926–2025):

-

Their Generative TD3 strategy consistently outperforms:

- S&P 500

- Equal Weight

- History-based Markowitz (with rolling 60-month window)

-

Across Sharpe ratio, Sortino ratio, and Calmar ratio, diffusion-trained agents lead the pack.

| Strategy | Sharpe | Sortino | Max Drawdown |

|---|---|---|---|

| S&P 500 | 0.75 | 0.70 | -24.8% |

| Equal Weight | 0.88 | 0.83 | -22.9% |

| Hist. Markowitz | 0.52 | 0.52 | -30.1% |

| Gen. Markowitz | 0.88 | 0.85 | -26.9% |

| Gen. TD3 | 0.92 | 0.90 | -23.2% |

Even when the model is retrained only once mid-way through a 15-year test period, performance remains robust—highlighting the strength of conditional sampling.

Why This Matters

This paper brings us closer to the holy grail of realistic, dynamic, model-free portfolio optimization. Instead of choosing between fragile parametric assumptions or non-adaptive historical windows, we can now:

- Learn a simulator that generates plausible futures based on today’s state.

- Train agents that act optimally in those futures.

- Get theoretical guarantees that the learned strategies generalize well to the real world.

This is a significant step toward more data-driven, adaptable, and robust investment strategies—especially as markets become increasingly complex and less amenable to clean theoretical modeling.

Cognaptus: Automate the Present, Incubate the Future.