In financial engineering, pricing exotic options often boils down to estimating one number: the expected payoff under a risk-neutral measure. But what if we’re asking the wrong question?

That’s the provocative premise of a recent study by Ahmet Umur Özsoy, who reimagines option pricing as a distributional learning problem, not merely a statistical expectation problem. By combining insights from Distributional Reinforcement Learning (DistRL) with classical option theory, the paper offers a fresh solution to an old problem: how do we properly account for tail risk and payoff uncertainty in path-dependent derivatives like Asian options?

Distributional RL Meets Option Pricing

Reinforcement Learning (RL) has been increasingly explored for financial applications, but most such efforts (e.g., QLBS, deep hedging) still rely on value functions — focusing only on the mean return. In contrast, Distributional RL targets the entire distribution of returns. This richer view is particularly useful in finance, where risk measures like VaR and CVaR depend heavily on distributional tails.

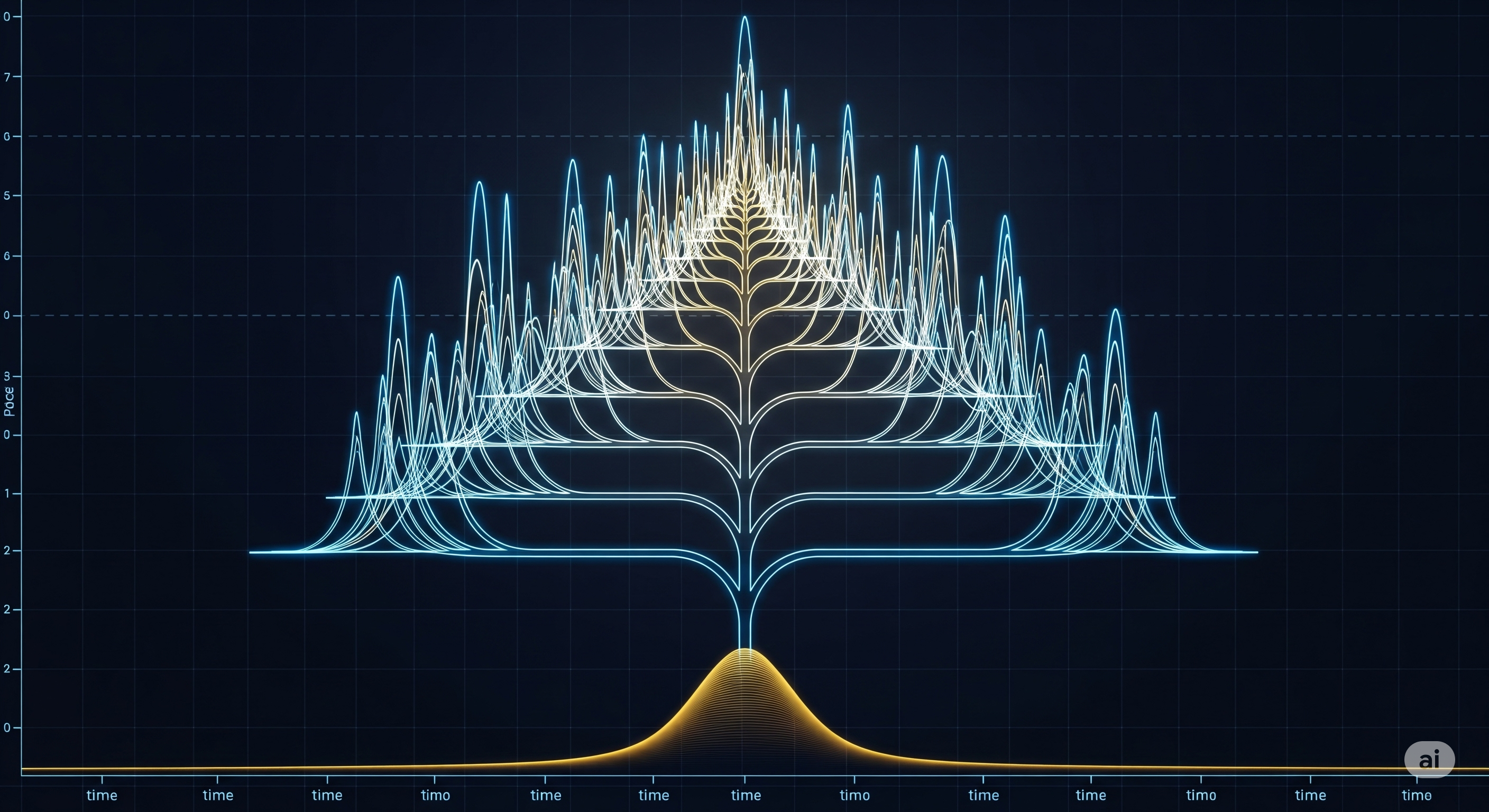

The key theoretical pivot in the paper is to reinterpret the recursive pricing of path-dependent options as a distributional Bellman equation. Instead of estimating $E[f(S_{0:T})]$, the approach seeks the full conditional law:

$Z(s_0) = P(f(S_{0:T}) \le z \mid S_0 = s_0)$

Here, $f(S_{0:T})$ denotes the path-dependent payoff, such as the average price in an Asian option. The process is made Markovian via state augmentation: $s_t = (S_t, A_t, t)$, where $A_t$ is the running average.

No Agents, Just Distributions

Unlike standard RL settings, there’s no decision-making agent in this setup. Instead, the stochastic evolution of asset prices is modeled (e.g., via geometric Brownian motion), and the distributional Bellman operator governs how payoff distributions evolve over time. This policy-free formulation makes DistRL surprisingly well-suited for financial modeling:

“The system evolves under a stochastic process… and no control policy is applied. The value distribution Z(s) reflects the uncertainty induced by stochastic dynamics and path-dependent payoffs.”

This formulation captures both temporal dynamics and uncertainty recursively — effectively a form of distributional dynamic programming under risk-neutral dynamics.

Quantile Regression with a Financial Twist

Rather than relying on deep neural nets, the paper uses Radial Basis Function (RBF) features to approximate quantile functions:

- Each quantile $\theta_i(s)$ is modeled as $w_i^\top \phi(s)$

- $\phi(s)$ is a vector of RBF features over the normalized state $s$

- Updates are made via quantile regression loss (pinball loss), using semi-gradient TD learning

This design choice is both elegant and practical:

| Feature | Deep Network Approach | RBF Quantile Approach (this paper) |

|---|---|---|

| Interpretability | Low | High |

| Stability | Sensitive to hyperparams | More stable |

| Computation | GPU-heavy | Lightweight, CPU-friendly |

| Domain Alignment | Generic | Tailored to financial features |

The result is a framework that is interpretable, efficient, and aligned with domain knowledge — crucial in high-stakes financial applications.

Clipping, Tail Risk, and Honest Failures

One of the paper’s standout qualities is its honest exploration of failure cases. In deep out-of-the-money settings, for instance, the payoff distribution becomes sharply imbalanced — dominated by zeros with a few large payoffs. Learning meaningful quantiles in such sparse regimes becomes extremely hard. The author flags this with numerical experiments, emphasizing:

- The importance of clipping payoffs to prevent gradient explosions

- The dangers of misaligned clipping between training and evaluation

- The brittleness of learning in tail-heavy distributions

This attention to practical stability concerns sets the work apart from typical RL finance papers that chase accuracy on synthetic datasets without addressing robustness.

Why This Matters

The payoff isn’t just a smoother price curve — it’s a deeper model of financial uncertainty. DistRL lets you:

- Price options with real-time risk metrics (e.g., quantiles, VaR)

- Calibrate to market-implied quantiles via gradient methods

- Extend to American or exotic options with more complex payoffs

- Use model-free learning with state embeddings engineered from domain insights

In contrast to fitting a distribution at each timestep (which ignores time dynamics), DistRL learns a state-conditioned quantile function recursively — preserving both temporal structure and distributional richness.

Final Thoughts: A Missing Link?

Özsoy’s framework is not just a clever adaptation — it feels like a missing link between the deep RL crowd and the financial quant community. By making Bellman recursion work on measures instead of expectations, it opens a path to robust, interpretable, and distribution-aware financial AI.

“In our framework, the system learns quantiles of payoff distributions recursively. This grants full access to risk-relevant information, with minimal assumptions on distribution shape.”

This could prove especially powerful in data-scarce but risk-sensitive settings — the exact conditions in which traditional ML approaches falter.

Cognaptus: Automate the Present, Incubate the Future.