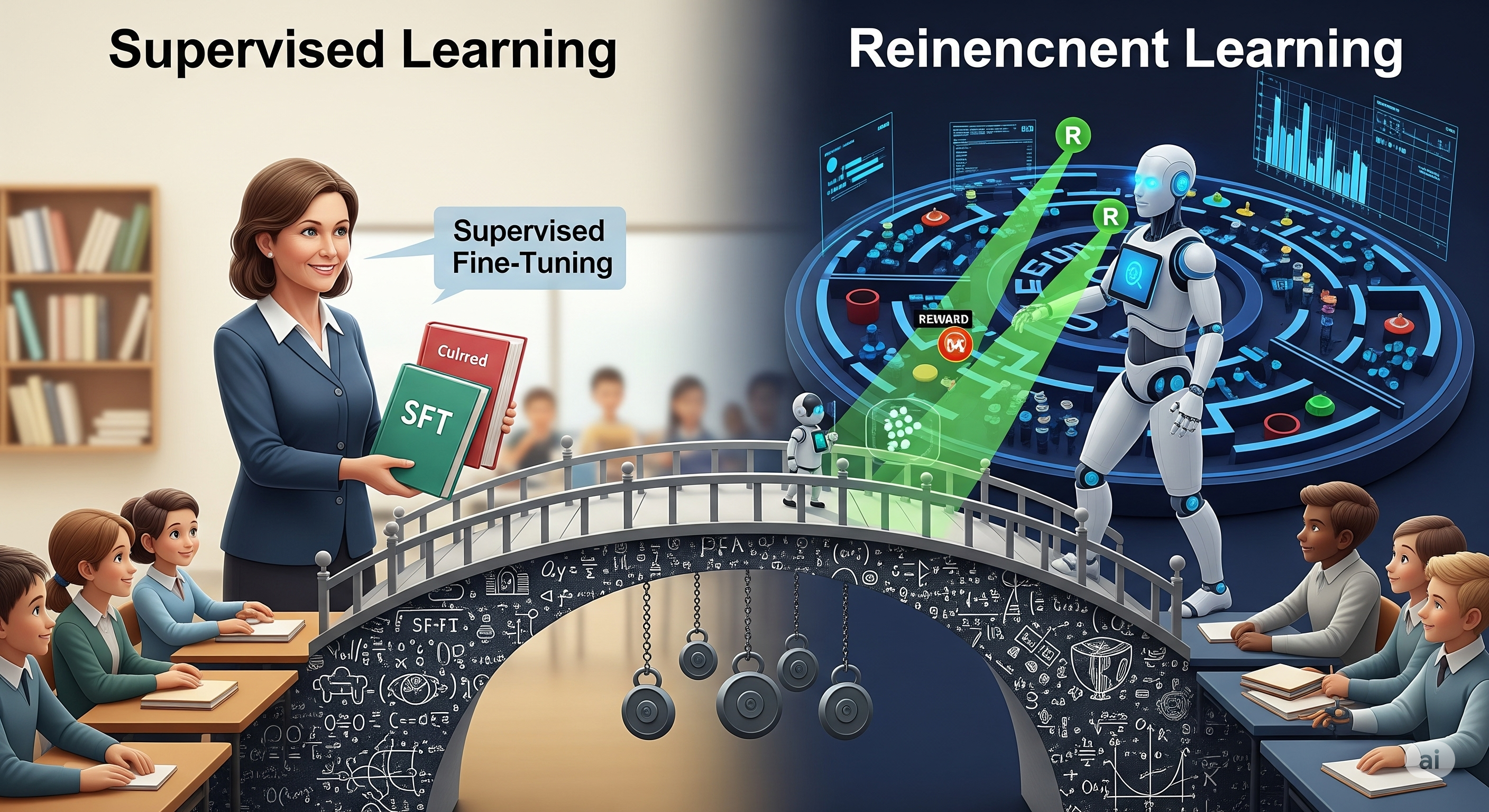

In the arms race to align large language models (LLMs), supervised fine-tuning (SFT) and reinforcement learning (RL) are often painted as competing paradigms. SFT is praised for its stability and simplicity; RL is heralded for its theoretical soundness and alignment fidelity. But what if this dichotomy is an illusion? A recent preprint from Chongli Qin and Jost Tobias Springenberg makes a bold and elegant claim: SFT on curated data is not merely supervised learning—it is actually optimizing a lower bound on the RL objective.

More strikingly, this perspective leads to a practical upgrade: importance-weighted SFT (iw-SFT). This small change not only bridges the theoretical gap to RL but also leads to state-of-the-art results on key reasoning benchmarks, with no extra inference compute or reward model.

SFT as Reinforcement Learning Under the Hood

At its core, RL seeks to maximize expected return over trajectories:

$$ J(\theta) = \mathbb{E}_{\tau \sim p(\tau; \theta)} [R(\tau)] $$

SFT, by contrast, maximizes the log-likelihood of trajectories from a filtered dataset $\mathcal{D}^+$:

$$ J_{\text{SFT}}(\theta) = \mathbb{E}_{\tau \in \mathcal{D}^+} [\log p(\tau; \theta)] $$

But if we interpret the filtering process as inducing a sparse binary reward (1 for selected data, 0 otherwise), then SFT emerges as optimizing a lower bound on the RL objective. The bound comes from a classic inequality:

$$ x \geq 1 + \log x $$

Applied to the importance-weighted return, this inequality shows that SFT is a special case of reward-weighted regression, long known in the RL literature. The problem? As training progresses, the model diverges from the reference distribution used for filtering, making the bound increasingly loose.

Tightening the Bound with iw-SFT and SFT(Q)

To close the gap between SFT and RL, the authors propose two practical extensions:

| Method | Key Idea | Formula Sketch |

|---|---|---|

| SFT(Q) | Sample data proportional to quality score | $J_{\text{SFT(Q)}} = \mathbb{E}_{\tau \in \mathcal{D}^Q} [Q(\tau) \log p(\tau; \theta)]$ |

| iw-SFT | Reweight log-likelihood by importance ratio | $J_{\text{iw-SFT}} = \mathbb{E}_{\tau \in \mathcal{D}^+} [w(\tau) \log p(\tau; \theta)]$ |

These variants require minimal changes:

- No new loss function.

- No extra training signal.

- Just importance weights derived from the ratio between model and reference policy probabilities.

Even better: iw-SFT can use the same base model as both the policy and the reference distribution (with a slight lag).

Results That Speak Louder Than Compute

On the AIME 2024 benchmark—a tough reasoning test for LLMs—iw-SFT achieves 66.7%, beating previous open-weight SFT methods by over 7%. On GPQA Diamond, a benchmark for multi-hop factuality, it hits 64.1%, competitive with much heavier inference tricks like Budget Forcing.

| Model | AIME 2024 | MATH500 | GPQA Diamond |

|---|---|---|---|

| s1.1 (SFT) | 56.7 | 95.4 | 63.6 |

| iw-SFT (Ours) | 66.7 | 94.8 | 64.1 |

| DeepSeek-R1 | 79.8 | 97.3 | 71.5 |

| Qwen2.5-32B | 26.7 | 84.0 | 49.0 |

While closed models still dominate top spots, iw-SFT sets a new high watermark for open models on open data, without the cost of custom reward modeling or test-time manipulation.

Implications for Enterprise LLMs

This paper is more than an optimization trick. It invites a rethinking of the entire post-training pipeline:

- Curated datasets are latent reward functions. Filtering acts as binary reward assignment.

- Fine-tuning is policy optimization. We should treat the process as RL with known returns.

- No reward model? No problem. Importance weights offer a reward-aware alternative to RLHF.

For AI-driven enterprises building vertical LLMs in finance, law, or healthcare, this matters. It suggests that even without RL infrastructure, we can unlock RL-like gains with simple tweaks.

A Final Thought: Recovering Value from Failures

Perhaps the most intriguing insight comes from their toy example: when training on only successful examples, SFT may miss the signal that comes from failures. iw-SFT can “recover” this lost information via adaptive reweighting—effectively learning not just from what worked, but from what almost worked.

In a field obsessed with ever-larger models and ever-longer prompts, this paper is a reminder that clever math and smart weighting can punch well above their compute budget.

Cognaptus: Automate the Present, Incubate the Future