The latest research out of Heriot-Watt University doesn’t just challenge the notion that bigger is better — it quietly dismantles it. In their newly released Athena framework, Nripesh Niketan and Hadj Batatia demonstrate how integrating external APIs into LLM pipelines can outperform even the likes of GPT-4o and LLaMA-Large on real tasks like math and science.

And they didn’t just beat them — they lapped them.

Why GPT-4 Still Fumbles Math

Ask GPT-4o to solve a college-level math problem, and it might hallucinate steps or miss basic arithmetic. The reason? LLMs, even at trillion-parameter scale, are not calculators. They’re probabilistic machines trained on patterns, not deterministic reasoners.

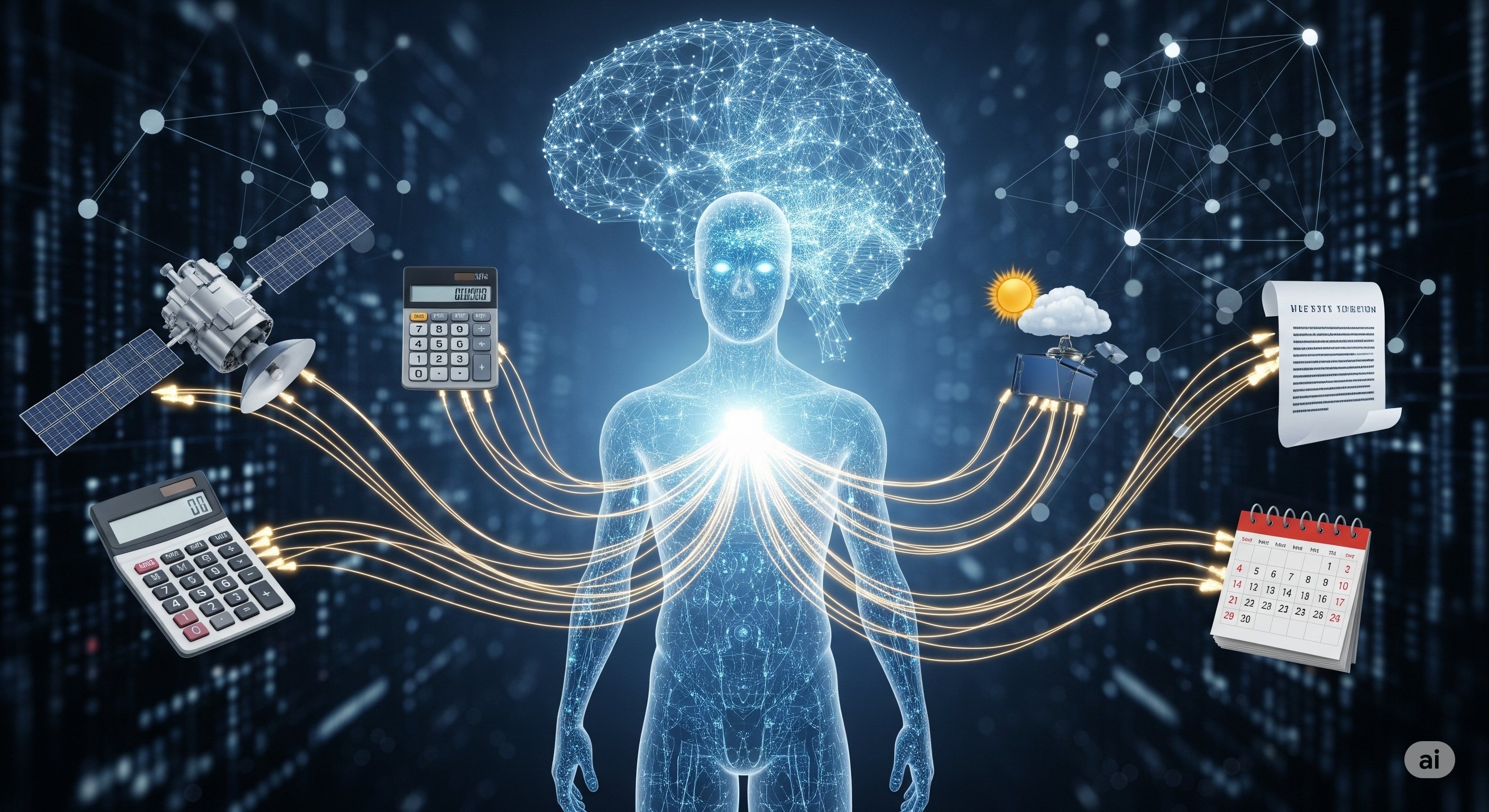

What Athena shows is that the answer isn’t more training — it’s modularity. By embedding access to tools like Wolfram Alpha, ArXiv search, and Google Calendar, Athena turns a linguistic engine into a hybrid reasoning system. It’s the difference between guessing and computing.

The Architecture That Makes It Work

Athena uses a clean, pluggable structure. Here’s how it flows:

| Component | Purpose |

|---|---|

| ExternalServiceIntegrator | Manages tool schemas via Pydantic-like interfaces |

| RunMonitoring | Analyzes user input and decides if tool use is needed |

| HandleRequiredAction | Extracts parameters, formats queries, invokes the API |

| UpdateMessage | Injects API result back into LLM conversation context |

It’s all orchestrated through LangChain, sitting atop Unify, a platform aggregating open-source LLMs behind a common API. This lets the team swap base models or tools effortlessly.

In essence: LangChain as the conductor, APIs as instruments, and the LLM as the soloist.

Benchmarking Against the Giants

Using questions from the MMLU dataset across math and science domains, the team ran Athena head-to-head against today’s best models:

📊 Math Accuracy

| Model | Accuracy |

|---|---|

| GPT-3.5 | 36% |

| GPT-4o | 53% |

| LLaMA-Large | 67% |

| Athena | 83% |

🧪 Science Accuracy

| Model | Accuracy |

|---|---|

| GPT-3.5 | 56% |

| GPT-4o | 77% |

| LLaMA-Large | 79% |

| Athena | 88% |

These are not marginal gains. They’re architecture-level wins that cannot be matched by parameter tuning alone.

From Framework to Foundation

Athena isn’t the only framework exploring this space. It builds on:

- Toolformer — self-supervised tool-use prompting

- PAL — generating Python code as intermediate reasoning

- Gorilla — massive API interaction datasets

But Athena stands out in two ways:

- It’s built for plug-and-play extensibility.

- It demonstrates clear quantitative superiority in live task settings.

The Takeaway for Business AI

If you’re building a finance bot, education assistant, scheduling agent, or custom data interface — don’t just fine-tune a model. Connect it to tools.

LLMs don’t need to know everything. They need to know when to ask.

Athena proves that the future of LLMs isn’t just scaling models. It’s about scaling ecosystems.

Cognaptus: Automate the Present, Incubate the Future