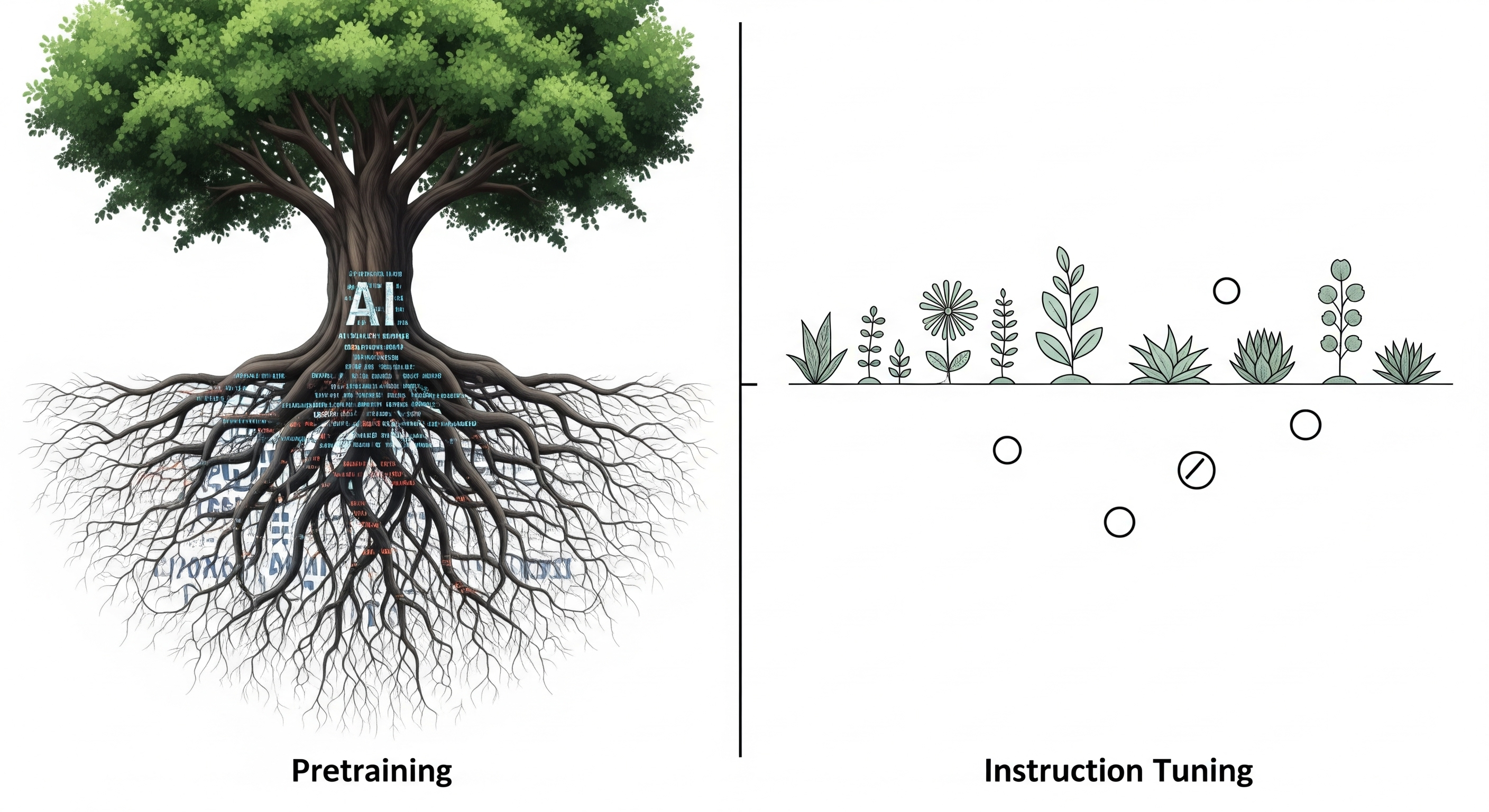

What makes a large language model (LLM) biased? Is it the instruction tuning data, the randomness of training, or something more deeply embedded? A new paper from Itzhak, Belinkov, and Stanovsky, presented at COLM 2025, delivers a clear verdict: pretraining is the primary source of cognitive biases in LLMs. The implications of this are far-reaching — and perhaps more uncomfortable than many developers would like to admit.

The Setup: Two Steps, One Core Question

The authors dissected the origins of 32 cognitive biases in LLMs using a controlled two-step causal framework:

- Training Randomness Test: They fine-tuned the same pretrained models multiple times with different random seeds to assess how much stochasticity influences bias emergence.

- Cross-Tuning Test: They swapped instruction datasets between different base models (e.g., OLMo and T5) to isolate the influence of pretraining versus instruction data on bias patterns.

This dual approach allowed them to untangle latent bias (what the model already contains from pretraining) from observed bias (what it shows after instruction tuning).

Key Insight: Pretraining Isn’t Just a Starting Point — It’s a Behavioral Blueprint

Across all tests, the clearest pattern emerged from clustering models by their bias vectors — 32-dimensional representations of how models behave across various bias-inducing scenarios. Models grouped far more reliably by their pretraining backbone (e.g., OLMo vs. T5) than by their instruction dataset (e.g., Tulu vs. Flan).

In other words, how a model was pretrained matters more than what you later teach it.

| Clustering Criterion | Silhouette Score (Bias-Level) | Davies-Bouldin Index (Lower = Better) |

|---|---|---|

| Pretraining | 0.104 | 2.036 |

| Instruction Tuning | 0.028 | 2.648 |

| K-Means (Baseline) | 0.104 | 1.850 |

These metrics confirm: pretraining has a stronger explanatory power for bias trends than instruction data.

Instruction Tuning: Surface Effects, Not Deep Shifts

Instruction tuning can amplify biases — especially when the data includes human-generated reasoning prone to cognitive shortcuts — but it does not override the blueprint set by pretraining. Even after cross-tuning (e.g., using Flan data to fine-tune OLMo), the model retained its original bias tendencies. This suggests that instruction tuning acts more like a stylistic gloss than a cognitive rewrite.

This challenges the widespread assumption that you can detoxify or debias a model after pretraining through careful instruction tuning. If the bias is already planted deep in the pretraining soil, then no amount of surface pruning will change its roots.

Training Randomness: A Small but Non-Trivial Factor

The paper also addressed an underexplored variable: stochasticity. By varying the random seed during fine-tuning, they observed mild fluctuations in bias magnitude — but not direction. This confirms that while randomness contributes to observed differences, it doesn’t reshape the underlying bias profile.

Crucially, aggregating results across multiple seeds restores the original model’s bias signal, lending credence to the idea that fine-tuning is a noisy lens over a more stable pretraining core.

Implications: Debiasing Must Begin at the Start

This study shifts the responsibility for bias mitigation upstream:

- Pretraining data curation is non-negotiable. Curating, filtering, and diversifying the pretraining corpus may be the most effective lever for controlling downstream behavior.

- Fine-tuning is not a cure-all. Alignment teams relying solely on RLHF or instruction tuning to remove biases may be working against a much deeper grain.

- Benchmarking must account for pretraining provenance. Comparing bias levels across instruction-tuned models without considering their pretraining origin risks drawing misleading conclusions.

For developers, this means that improving LLM fairness and safety requires better foundations — not just better finishes.

Toward Transparent Pretraining: A Call to Action

The authors argue for more transparency in pretraining corpora and more research into how different data properties — linguistic framing, tokenization strategies, domain diversity — influence cognitive behavior in models. Without such clarity, downstream fixes will always be reactive and partial.

In a landscape where LLMs increasingly mediate decisions, advice, and even social norms, understanding where biases begin is not an academic exercise — it’s a design imperative.

Cognaptus: Automate the Present, Incubate the Future.