“Every technical revolution rewires the old system—but does it fry the whole board or just swap out the chips?”

The enterprise tech stack is bracing for another seismic shift. At the heart of it lies a crucial question: Can today’s emerging AI models—agentic, modular, stream-driven—peacefully integrate with yesterday’s deterministic data flows, or will they inevitably upend them?

The Legacy Backbone: Rigid Yet Reliable

Enterprise data architecture is built on linear pipelines: extract, transform, load (ETL); batch jobs; pre-defined triggers. These pipelines are optimized for reliability, auditability, and control. Every data flow is modeled like a supply chain: predictable, slow-moving, and deeply interconnected with compliance and governance layers.

Yet this same rigidity often stifles innovation. Adding a new model or integrating third-party logic can feel like trying to install Bluetooth into a rotary phone.

Enter Compound AI: Models that Think—and Act

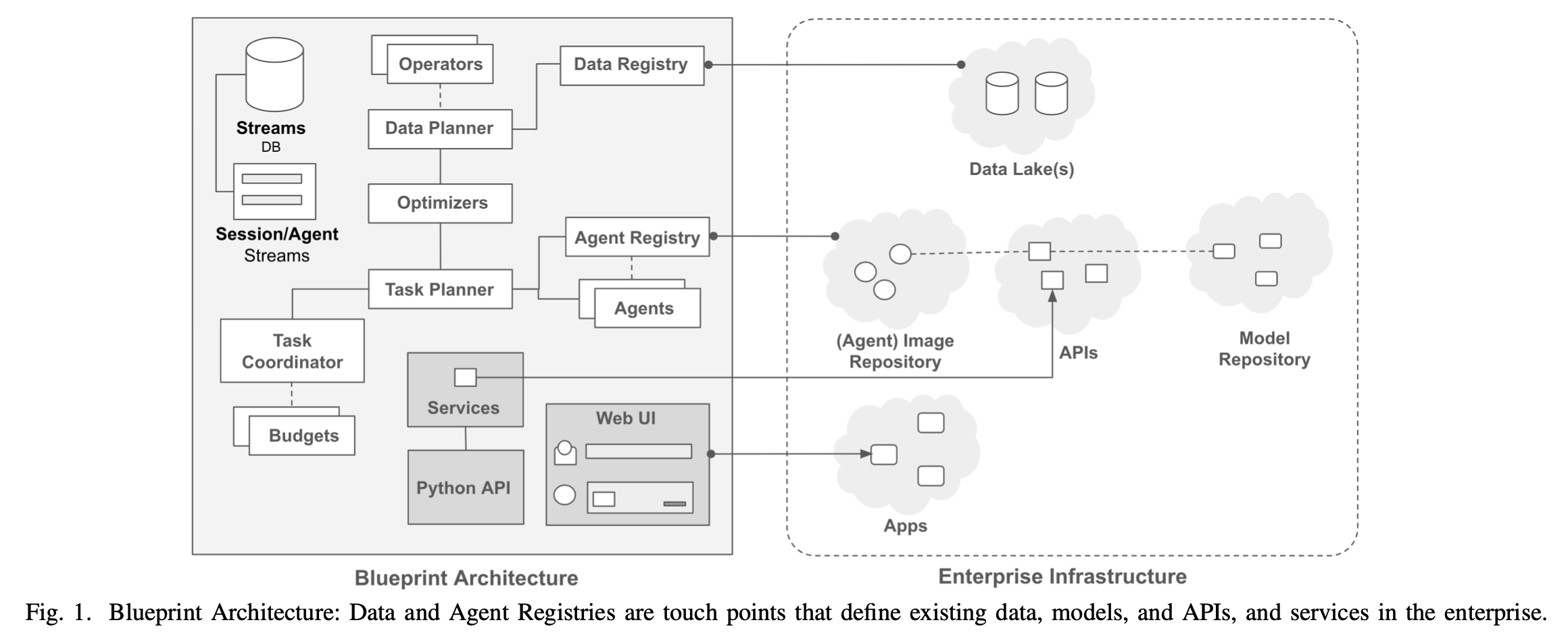

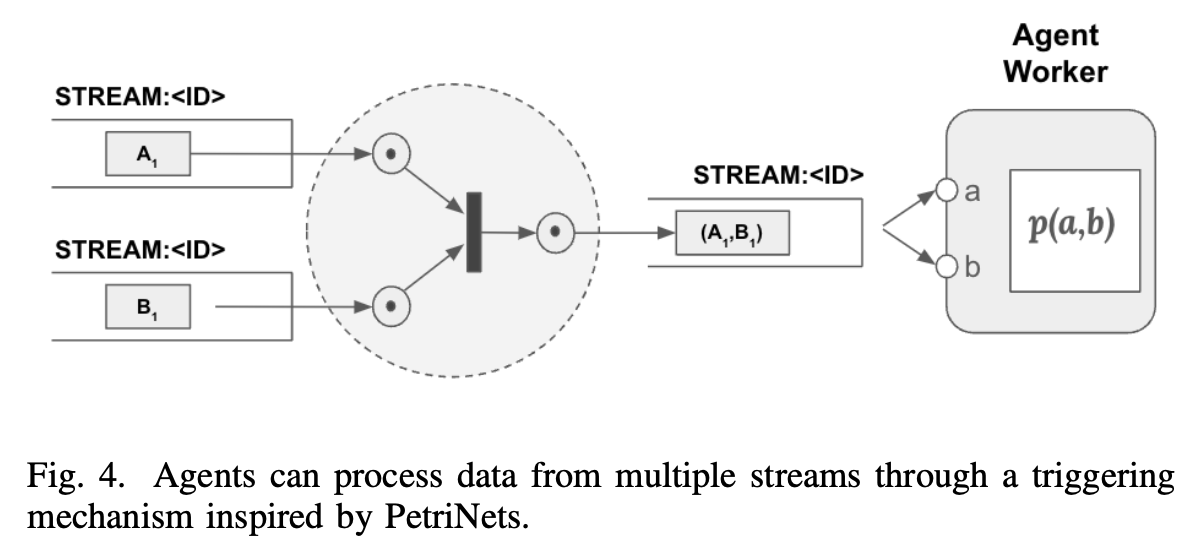

Modern AI systems are no longer just model endpoints. They are composed of agents, planners, and tool-callers, orchestrated through event-driven architectures. The blueprint proposed by Kandogan et al. in their Compound AI paper1 introduces a new paradigm:

- Streams replace batch jobs

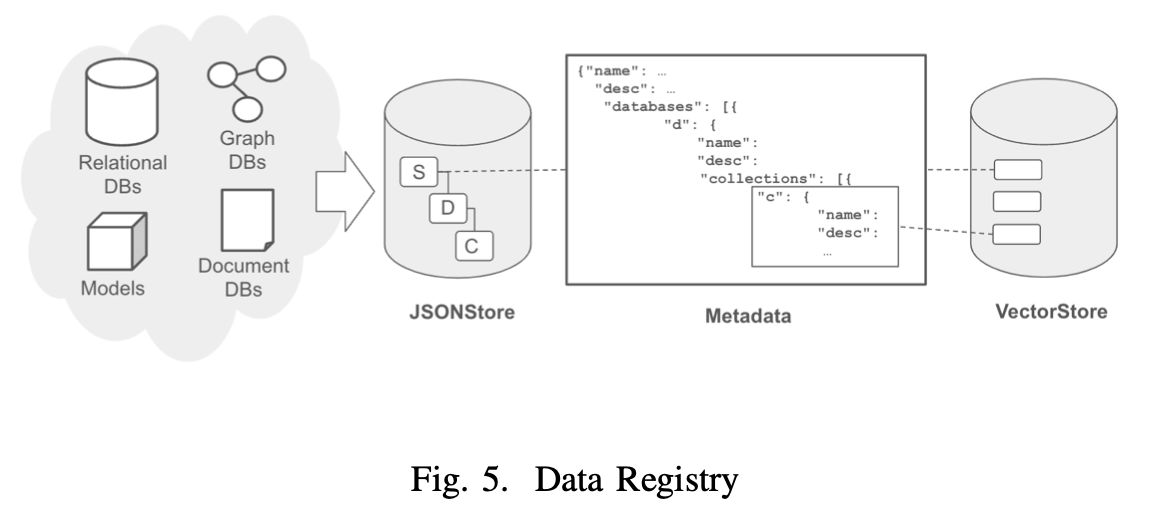

- Registries replace hard-coded pipelines

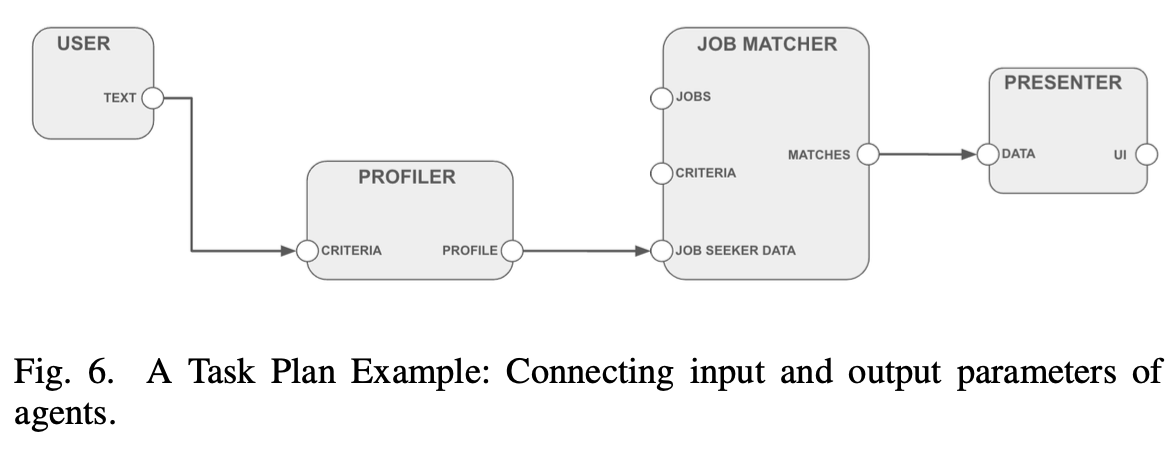

- Task planners replace static workflows

- Multi-modal data and APIs replace single-schema dependencies

This foundational shift is represented clearly in their architectural overview.

Each component is designed to interoperate with legacy infrastructure while introducing a modular, dynamic framework for agentic AI.

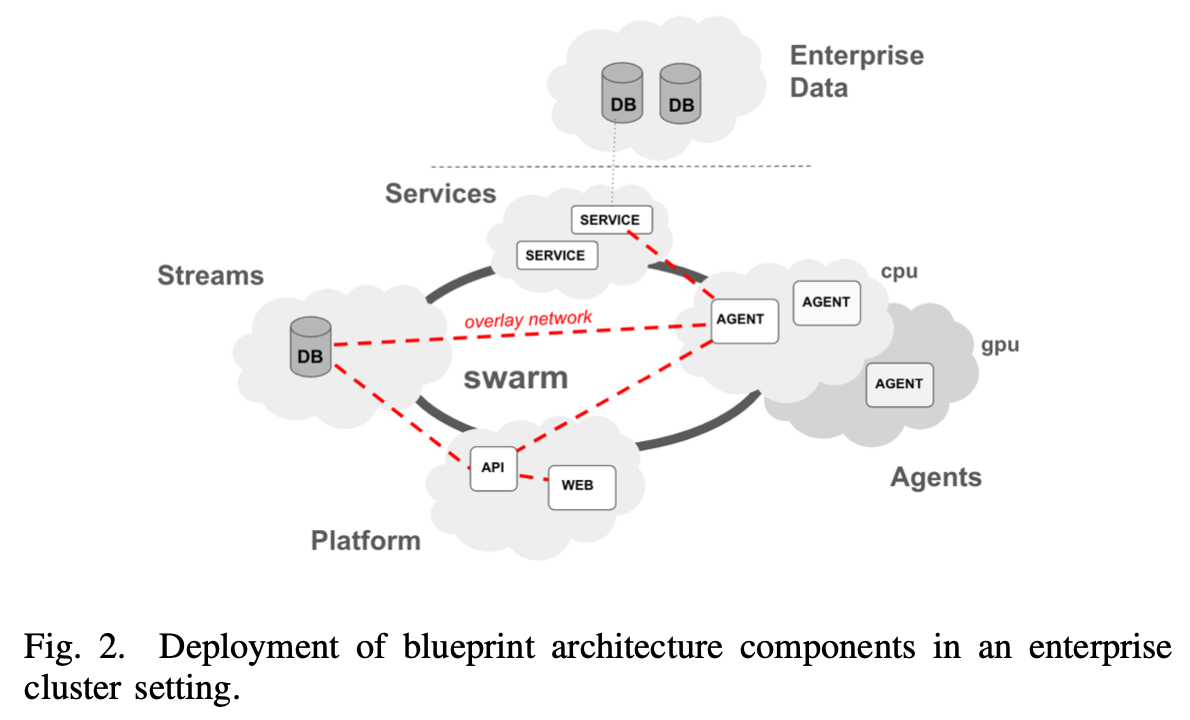

The deployment model embraces scalability and fault tolerance through containerized services orchestrated across enterprise clusters.

Key Components in the New Stack

Understanding the new orchestration model requires familiarity with the core building blocks.

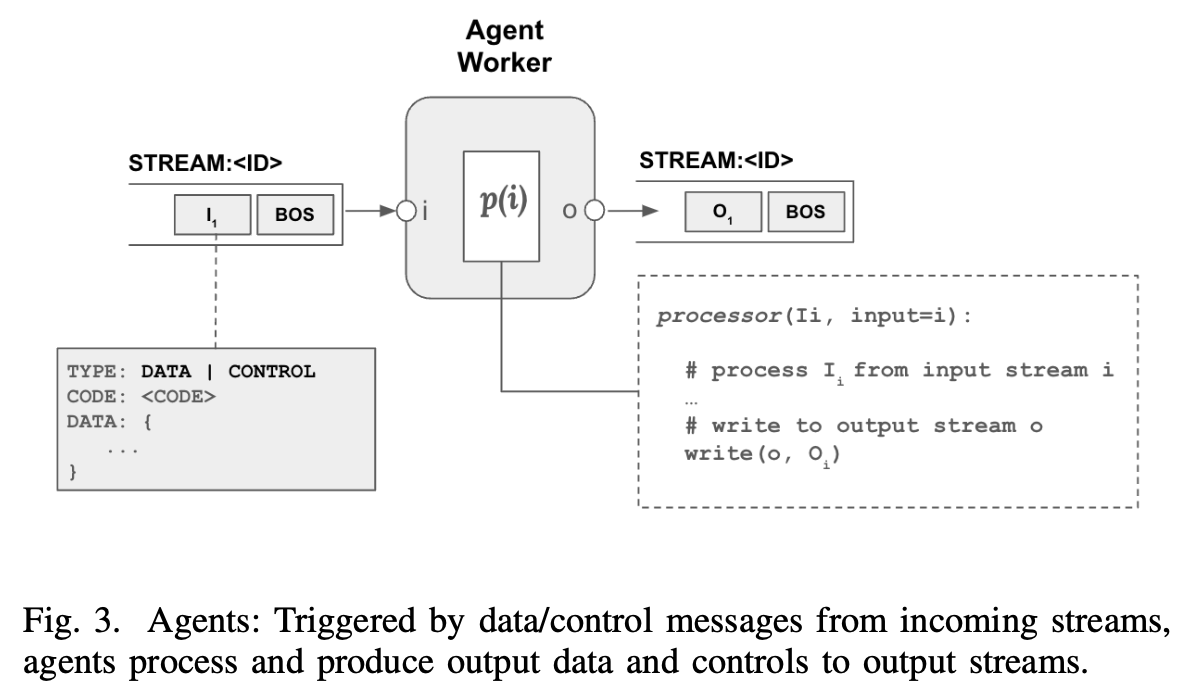

Agents are not just AI models. They can be traditional APIs, CRF models, or UI components. Agents process data and interact through shared streams.

Registries act as the map: the agent registry documents what each agent does and how to trigger it; the data registry catalogs data across structured and unstructured formats.

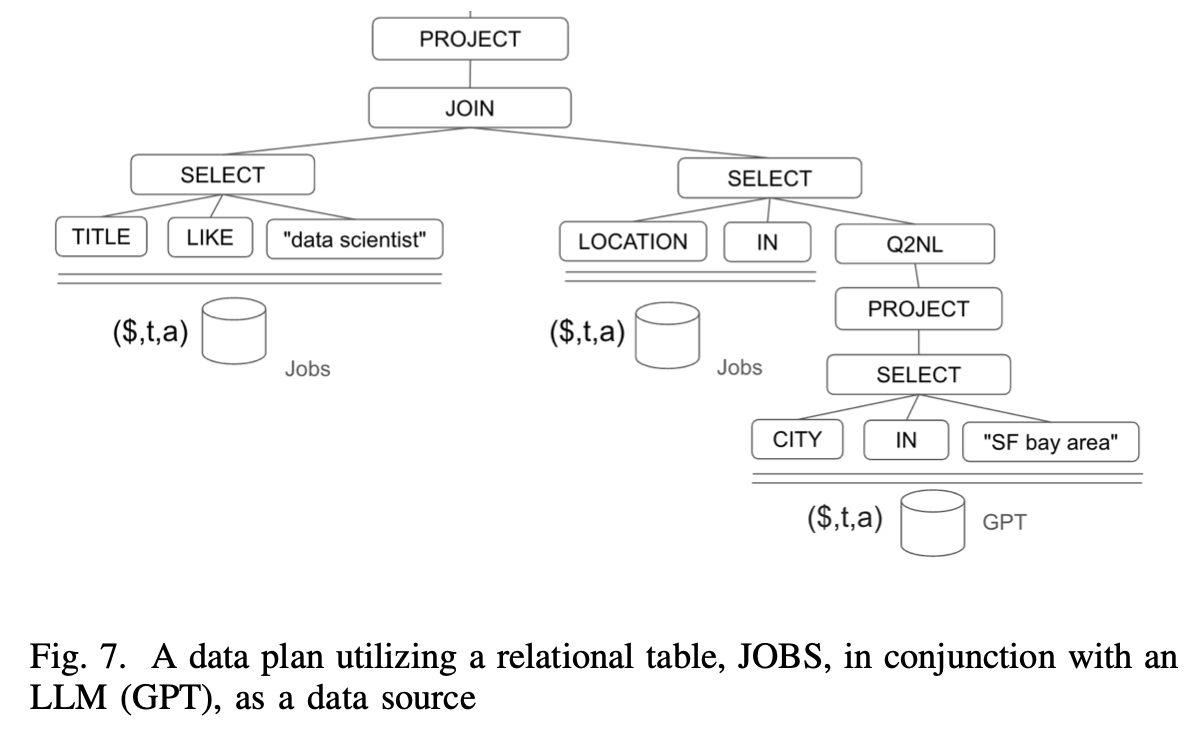

Planners build dynamic workflows. A task planner converts user intent into a DAG of agent invocations. A data planner maps these to appropriate queries or transformations.

Friction Zones: Where Legacy Meets Intelligence

This creates tension in three critical areas:

-

Orchestration Models: Old systems are process-centric. New AI is intent-centric. Who drives whom?

- Example: In a logistics company, a fleet routing system is optimized to follow pre-defined schedules. Introducing an LLM-based planner leads to conflict: the AI adapts routes dynamically based on weather, traffic, or even conversation with drivers. The legacy scheduler sees this as deviation, not intelligence.

-

Data Granularity: Legacy flows move rows and tables. AI agents want embeddings, summaries, and intents.

- Example: A bank’s risk model operates on tabular loan application data. When an agent tries to process sentiment from customer emails as “risk hints,” it becomes impossible to store and validate this data through legacy schemas, causing tension between analytic flexibility and compliance traceability.

-

Control vs. Emergence: Enterprises value determinism. Agentic AI thrives on adaptive workflows. Which wins?

- Example: In healthcare, a diagnostic assistant evolves its questioning dynamically based on patient responses. Traditional auditing systems can’t trace how or why the assistant chose a certain diagnostic path—raising flags in clinical governance and insurance.

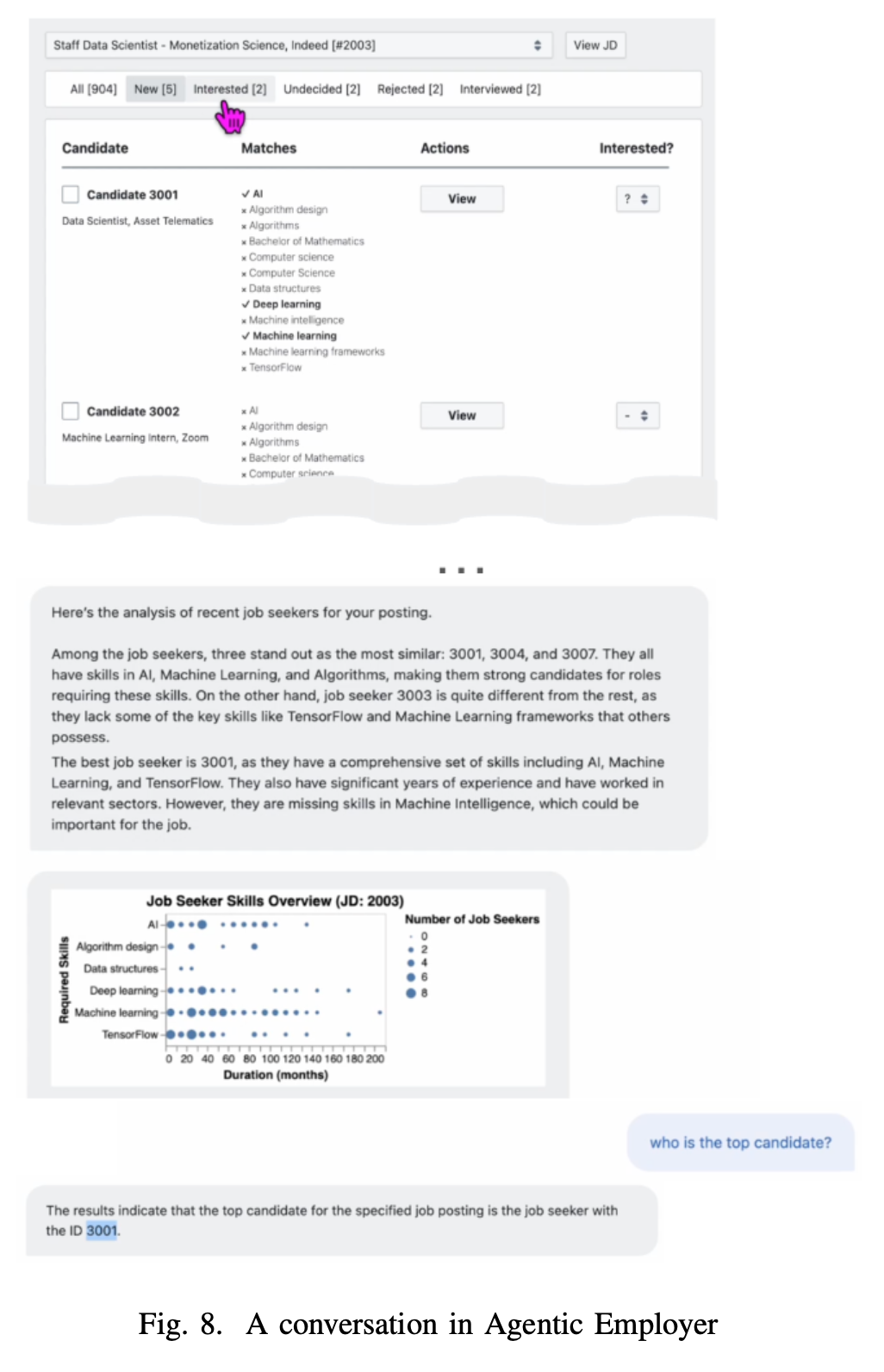

Real-World Use Case: Agentic Employer

One case study, an HR system named Agentic Employer, shows how this architecture works in practice.

The system enables conversational interfaces where recruiters explore candidates, run filters, and view summaries—all orchestrated through triggered agents.

The use of streams and registries allows seamless switch between structured forms and natural language inputs, powered by dynamic plans.

Toward Coexistence: A Migration Strategy

Rather than replace everything, the blueprint offers a layering strategy:

| Sector | Migration Strategy |

|---|---|

| Finance | Wrap decision models as agents; use registries for structured reporting datasets. |

| Healthcare | Use streams for agent-patient dialogues; agents must meet audit trail standards. |

| Retail | Integrate recommender and inventory agents; attach feedback loops to sales data. |

| Logistics | Deploy planners for real-time routing; fallback to legacy scheduler as override. |

| HR / Talent | Register resumes and job posts as multi-modal data; deploy agentic matchmakers. |

| Manufacturing | Wrap sensor APIs as data agents; use planners for anomaly detection flows. |

Final Thought: Evolution, Not Ejection

At Cognaptus, we believe the future of enterprise intelligence is not about destroying what works—it’s about enhancing it with modular intelligence.

AI agents don’t need to displace your legacy systems. They need to interpret intent, orchestrate value, and coexist with the robustness enterprises have spent decades building.

But this coexistence isn’t automatic. It requires:

- Rewiring trust through observable agent behavior.

- Standardizing registries that expose legacy logic to smart planners.

- Investing in data abstraction layers like streams.

Just like cities layer smart infrastructure on top of cobbled streets, Cognaptus helps firms lay intelligence atop legacy—one stream, one agent, one plan at a time.

This isn’t just an architecture. It’s a philosophy of augmentation: leverage what you have, empower it with what’s next, and build an enterprise AI future that is not only scalable, but sensible.

-

Kandogan, E., Bhutani, N., Zhang, D., Chen, R. L., Gurajada, S., & Hruschka, E. (2025). Orchestrating Agents and Data for Enterprise: A Blueprint Architecture for Compound AI. arXiv preprint arXiv:2504.08148. ↩︎