Memory in the Machine: How SHIMI Makes Decentralized AI Smarter

As the race to build more capable and autonomous AI agents accelerates, one question is rising to the surface: how should these agents store, retrieve, and reason with knowledge across a decentralized ecosystem?

In today’s increasingly distributed world, AI ecosystems are often decentralized due to concerns around data privacy, infrastructure independence, and the need to scale across diverse environments without central bottlenecks.

The answer might just lie in a new approach to AI memory—SHIMI, or Semantic Hierarchical Memory Index—a system designed to give AI agents more structured, meaningful, and scalable memory. Imagine an agent not just recalling data points, but navigating a semantic map of concepts and relationships, reasoning from abstract intent to specific details. That’s the promise SHIMI delivers.

Why Traditional Memory Falls Short

In typical memory architectures, agents rely on vector stores that prioritize surface-level similarity. But when faced with complex reasoning tasks, these systems fall short: they lack semantic abstraction, struggle with interpretability, and often return irrelevant results in decentralized environments.

Moreover, as agents become more specialized and operate in collaborative networks, their memory systems must handle asynchronous updates, partial knowledge, and autonomous reasoning—all at scale.

Enter SHIMI: Building Smarter Brains for Decentralized Agents

SHIMI proposes a hierarchical tree of memory nodes, organized by semantic meaning. At the top: broad concepts like “sustainability” or “strategy.” As you move downward, the tree branches into more specific knowledge units like “energy efficiency in buildings” or “cost-benefit analysis models.”

Each agent maintains a local SHIMI tree, which grows and reorganizes based on experience. Crucially, this structure supports top-down reasoning: an agent can start with a general task (e.g., “optimize logistics”) and traverse down to concrete strategies (e.g., “minimize cold chain leakage”).

This enables:

- 🧠 Smarter retrieval: Agents find knowledge based on semantic proximity rather than lexical similarity.

- 🔎 Explainable reasoning paths: Decision-making becomes traceable, which is essential for debugging, auditing, and alignment.

- 🌐 Scalable decentralization: SHIMI allows asynchronous syncing across distributed agents—each evolving its own memory.

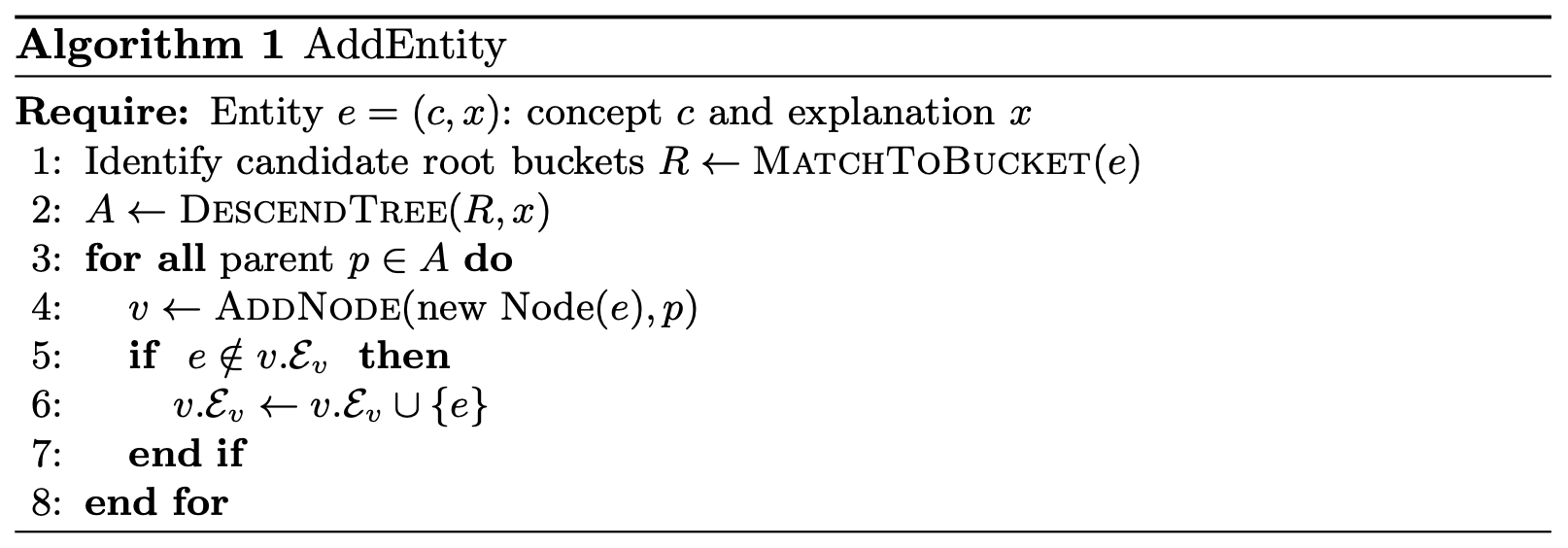

Algorithm 1: Entity Insertion in SHIMI

The core operation in SHIMI is entity insertion. It involves matching an incoming entity to a subtree, descending semantically, and either attaching it to a leaf or generating a new abstraction.

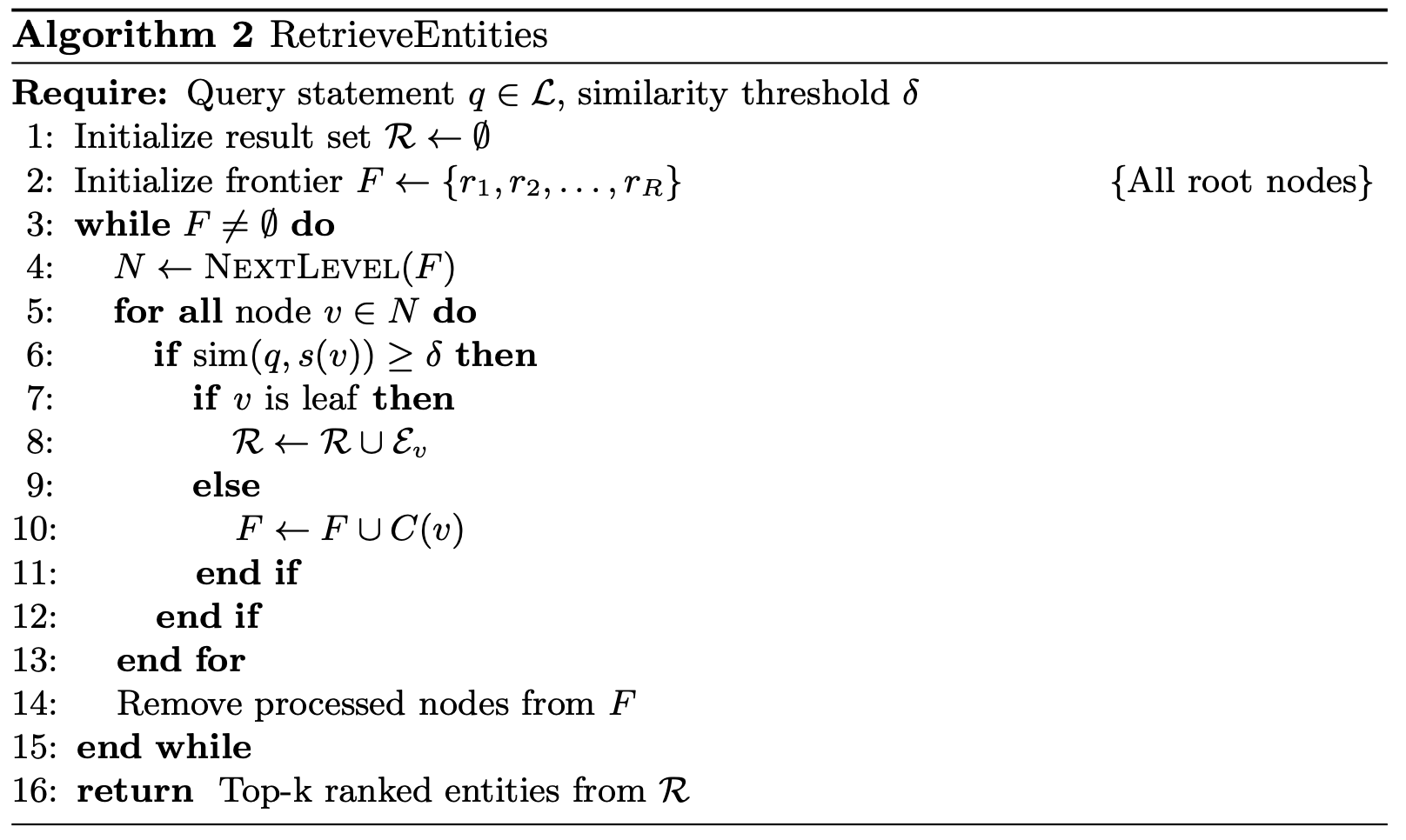

Algorithm 2: Semantic Retrieval

SHIMI’s retrieval process mirrors its insertion logic but operates in reverse: it traverses from root nodes toward leaves, selecting only branches semantically aligned with the query statement. The algorithm ensures efficient pruning by evaluating semantic relevance at each level and continuing traversal only through matching nodes.

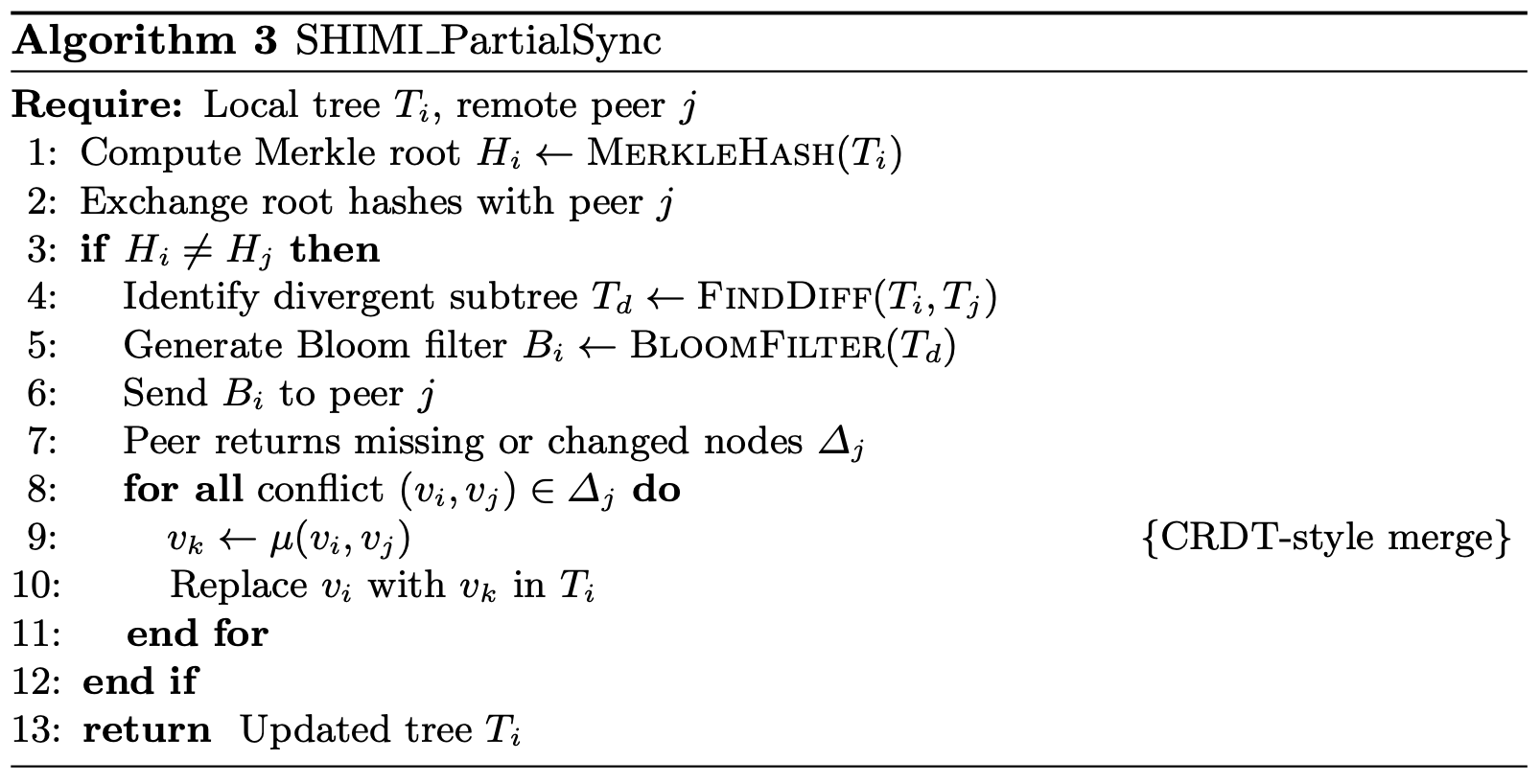

Algorithm 3: Decentralized Tree Synchronization

SHIMI supports decentralized environments where each node maintains an independent semantic tree Ti. Synchronization between trees is performed incrementally using a hybrid protocol that ensures eventual consistency while minimizing bandwidth.

Together, these three algorithms form a complete life cycle of knowledge management in decentralized AI: insertion builds the memory, retrieval enables meaningful use of memory, and synchronization ensures coherence across agents. This trio allows SHIMI to support not just learning and recall, but also collaborative evolution of semantic understanding.

Real-World Potential: Smarter Collaboration at Scale

Imagine swarms of decentralized agents—customer support bots, procurement advisors, or autonomous vehicles—each building their own memory trees but learning from shared experiences. SHIMI offers a framework where these agents don’t just share data—they share semantics, enabling collaboration without central control.

- Use Case for Algorithm 1 (Insertion): In a digital marketing platform, each agent adds new customer behavior signals (e.g., ad engagement, purchase pattern) into a semantic tree organized by intent (e.g., discovery vs. conversion), allowing continuous refinement of strategy.

- Use Case for Algorithm 2 (Retrieval): In customer service automation, agents receive vague queries and must semantically retrieve relevant troubleshooting steps based on layered context (device, issue type, recent interactions).

- Use Case for Algorithm 3 (Synchronization): In supply chain management, local procurement agents sync their SHIMI trees to maintain shared understanding of vendor risk classifications, enabling aligned but autonomous decisions across warehouses.

Challenges and Solutions: SHIMI in the Wild

While SHIMI is promising, deploying it in real-world settings presents several challenges:

- Semantic ambiguity: Real-world data is messy, and semantic meaning can vary by context or culture.

- Computational overhead: Maintaining and syncing semantic trees can become resource-intensive.

- Interoperability: Different agents may use varied ontologies, making alignment difficult.

To address these:

- Adaptive ontologies and context-aware embeddings can help resolve ambiguity by learning domain-specific semantics over time.

- Edge optimization techniques, such as caching and lazy synchronization, reduce computational load.

- Lightweight semantic standards or APIs can facilitate interoperability, even in heterogeneous environments.

These improvements make SHIMI more practical for small firms with limited infrastructure. For example, a small e-commerce startup could deploy lightweight SHIMI agents to personalize customer interaction, while syncing insights with partners using standardized semantic protocols. This levels the playing field—giving smaller players access to intelligent memory architectures without centralized infrastructure.

Final Thoughts: Memory Isn’t Just Storage, It’s Strategy

Cognaptus believes the future of business automation and agentic AI lies in structured intelligence—and SHIMI is a blueprint for that future. It transforms memory from a passive database into a strategic reasoning framework, powering agents that can think, explain, and evolve.

We’ll be watching closely as SHIMI-like architectures emerge in practical deployments. Because in decentralized AI, how you remember determines how well you act.

Reference: Tooraj Helmi, “Decentralizing AI Memory: SHIMI, a Semantic Hierarchical Memory Index for Scalable Agent Reasoning,” arXiv:2504.06135 [cs.AI], submitted on 8 April 2025.