Rules of Engagement: Why LLMs Need Logic to Plan

When it comes to language generation, large language models (LLMs) like GPT-4o are top of the class. But ask them to reason through a complex plan — such as reorganizing a logistics network or optimizing staff scheduling — and their performance becomes unreliable. That’s the central finding from ACPBench Hard (Kokel et al., 2025), a new benchmark from IBM Research that tests unrestrained reasoning about action, change, and planning.

The Problem: Language Models Aren’t Planners

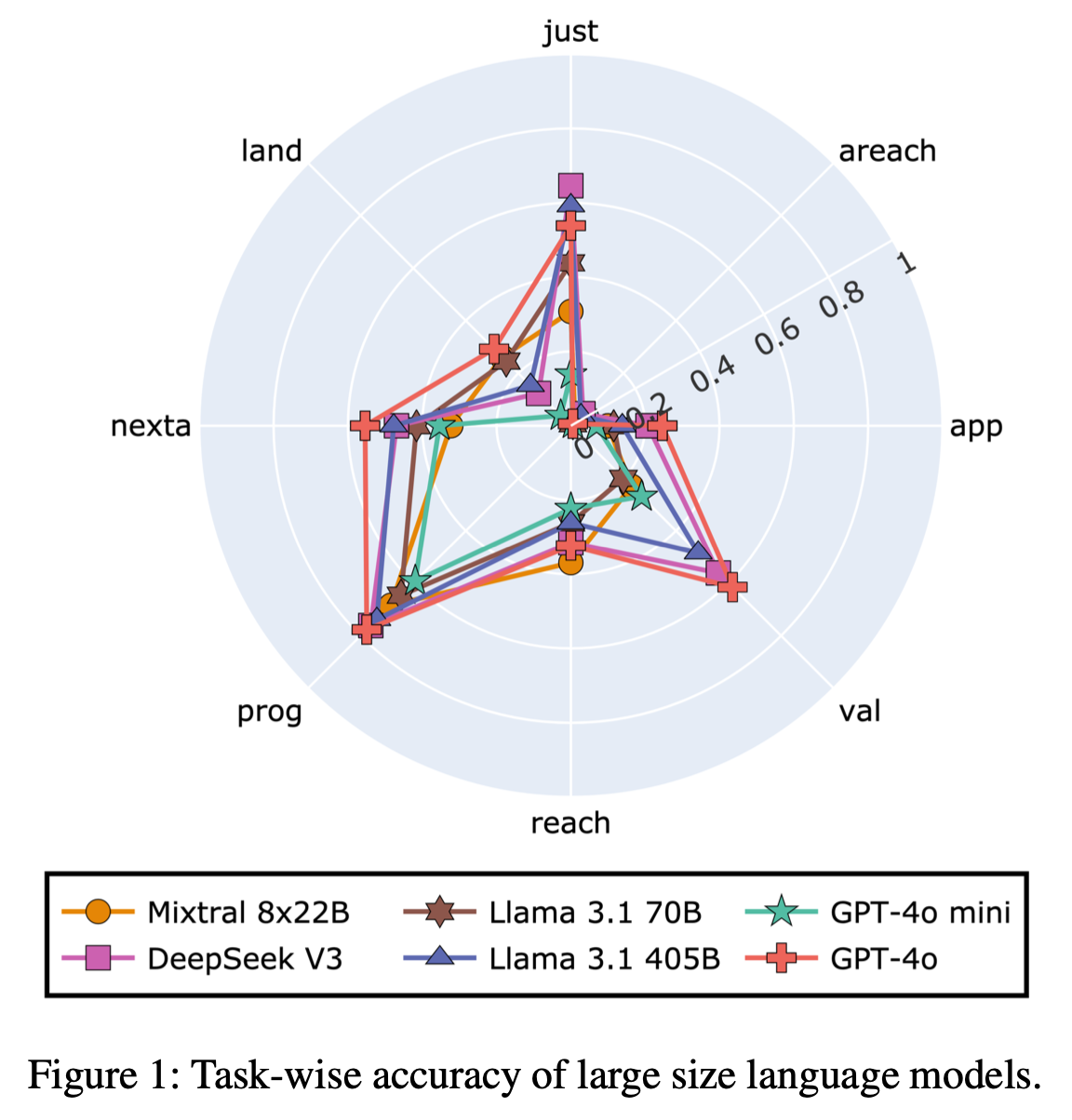

ACPBench Hard evaluates eight core planning capabilities — from action applicability to reachability and plan validation — using open-ended tasks modeled on real-world planning logic. Figure 1 of the paper shows how models like GPT-4o and o1-preview underperform, with most tasks scoring below 65%.

Crucially, even advanced models stumble in core planning logic areas like action reachability (e.g., identifying actions that can never become applicable) and plan justification (simplifying a plan without compromising its goal). This doesn’t mean they’re useless — but it does highlight their limitations in enterprise-critical reasoning workflows.

The Hidden Insight: Generative ≠ Deductive

The key issue isn’t just performance scores — it’s architecture. LLMs are trained to model statistical correlations in vast text datasets, not to reason through symbolic logic or causal constraints. Most frontier models are built upon decoder-only transformer architectures, and while these models exhibit emergent intelligence in certain domains, their reasoning is fundamentally pattern-driven, not rule-grounded.

This leads to several limitations:

- They generate fluent, plausible sequences but may hallucinate or skip critical conditions.

- They lack mechanisms to track state changes or verify correctness of multi-step procedures.

- Unlike symbolic planners, they don’t build or simulate a world model — they autocomplete it.

To outsiders, this emergent behavior may seem like deep intelligence, but without task-specific structure or logical enforcement, it’s unreliable in planning domains.

Symbolic reasoning engines, in contrast, rely on formal logic, search algorithms, and explicit state tracking. These models can evaluate whether an action leads closer to a goal or violates constraints — but they consume more compute and are harder to integrate at scale.

Techniques like chain-of-thought prompting, external tool use, or step-wise decomposition can improve LLM planning performance. However, ACPBench Hard results show that even with such scaffolding, performance on critical tasks like action reachability and justification remains low — especially when models are evaluated on diverse domains.

The Action: Hybrid Is the Way Forward

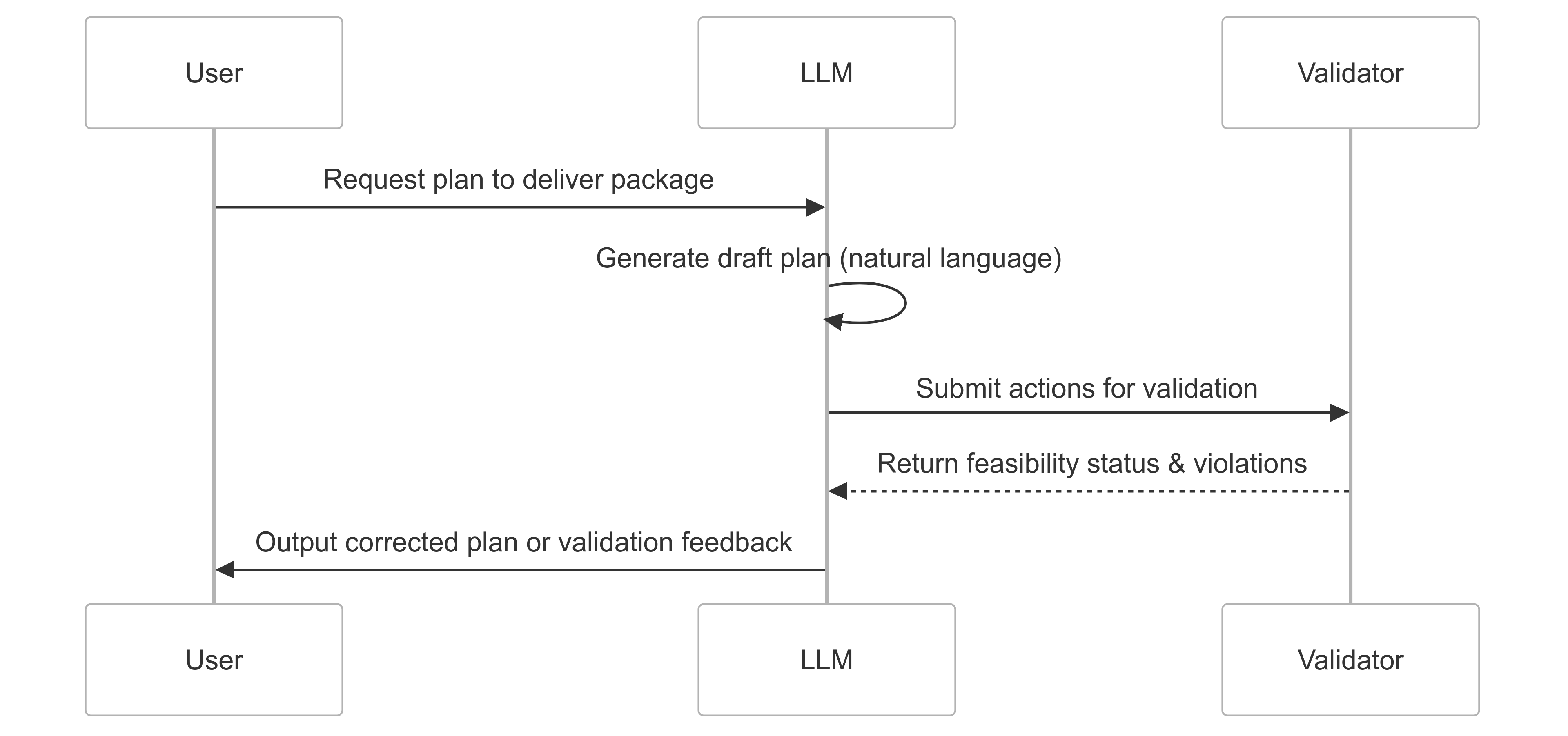

Rather than expecting one model to do everything, businesses should deploy hybrid solutions that leverage each system’s strengths:

- Let LLMs handle natural language generation and creative exploration of workflows.

- Use symbolic validators — lightweight modules that apply logic-based checks — to ensure feasibility and verify correctness.

What Are Symbolic Validators?

Symbolic validators are logic-driven components that:

- Evaluate whether a given action is applicable in a state (based on STRIPS-style preconditions).

- Confirm whether a plan reaches the intended goal.

- Detect redundant or unreachable actions.

For example:

- In logistics, if an LLM suggests loading a package on a truck that’s in another city, the symbolic validator can flag this as invalid.

- In HR automation, if a workflow omits mandatory compliance checks, a logic engine can catch that before execution.

These validators can be built using planning libraries, PDDL interpreters, or lightweight rule engines, depending on the domain.

Here’s how such a hybrid interaction might work:

Business Use Cases to Explore:

- AI Copilots with Guardrails: Empower business users to draft automation flows while symbolic validators ensure nothing breaks.

- Plan Optimization SaaS: Detect and remove unnecessary steps from processes in logistics, operations, or IT.

- Vertical Agents: In domains like healthcare or manufacturing, pair natural-language interfaces with formal logic engines that enforce domain rules.

At Cognaptus, we design hybrid agents that combine LLM creativity with symbolic reliability — delivering scalable, auditable, and smart automation. We spend significant effort on understanding and breaking down domain-specific workflows. For example, we recently helped a client build an online marketing automation pipeline by combining LLM-generated recommendations with rule-based validation agents grounded in marketing theory and operational best practices.

Fine-Tuning the Future

There’s also growing opportunity in training smaller, domain-specific models directly on planning datasets. The original ACPBench showed that models fine-tuned on boolean and multiple-choice questions could rival frontier LLMs. Extending this to open-ended generative tasks — potentially with reasoning-focused data and step-by-step guidance — could unlock more affordable, task-specific AI for enterprises.

Citation: Kokel, H., Katz, M., Srinivas, K., & Sohrabi, S. (2025). ACPBench Hard: Unrestrained Reasoning about Action, Change, and Planning. arXiv. https://doi.org/10.48550/arXiv.2503.24378

LLMs aren’t hopeless — but they’re not reliable planners yet. With smart architecture and symbolic scaffolding, we can build systems that are both articulate and accurate.