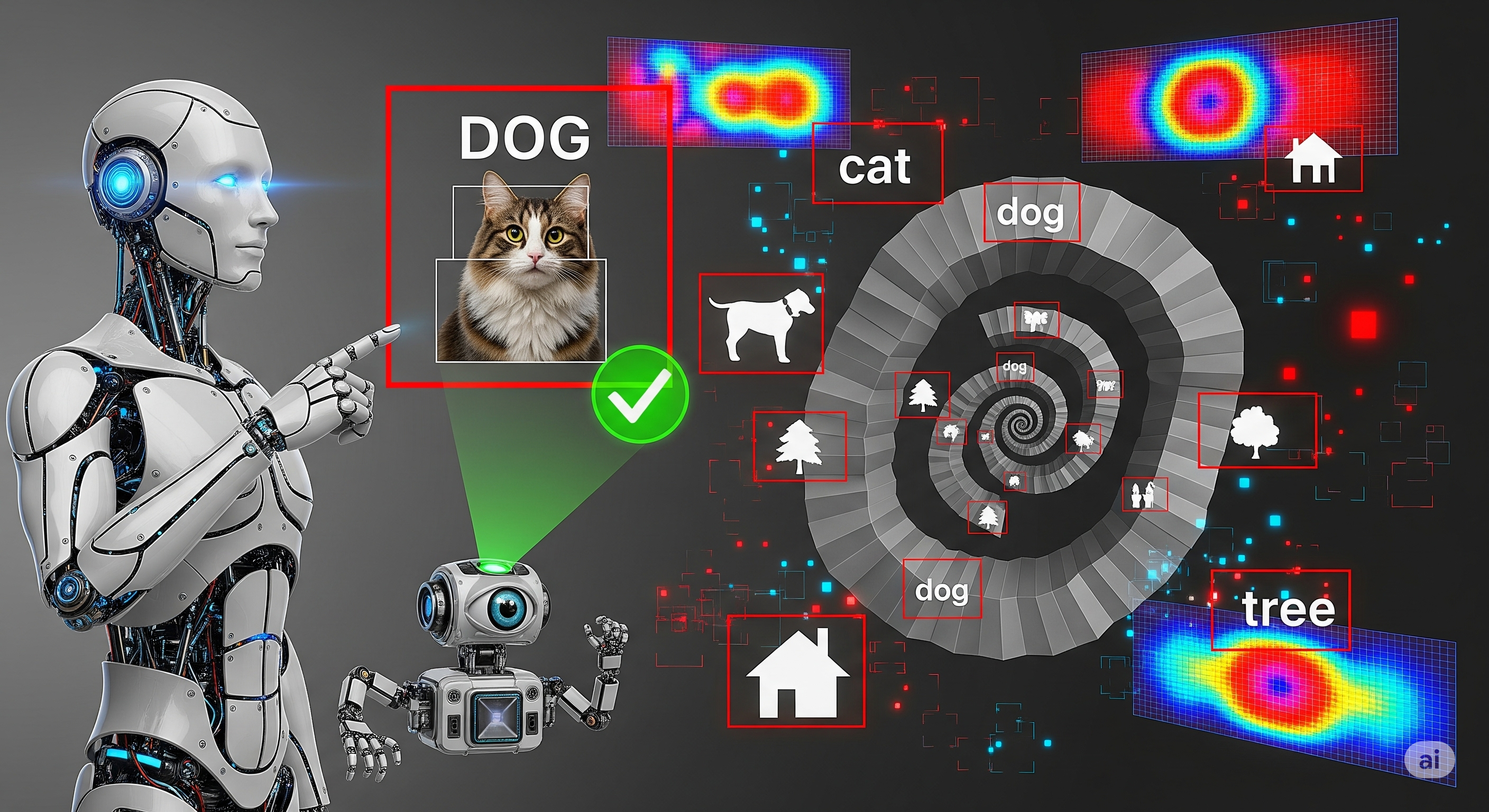

Seeing Is Deceiving: Diagnosing and Fixing Hallucinations in Multimodal AI

“I See What I Want to See” Modern multimodal large language models (MLLMs)—like GPT-4V, Gemini, and LLaVA—promise to “understand” images. But what happens when their eyes lie? In many real-world cases, MLLMs generate fluent, plausible-sounding responses that are visually inaccurate or outright hallucinated. That’s a problem not just for safety, but for trust. A new paper titled “Understanding, Localizing, and Mitigating Hallucinations in Multimodal Large Language Models” introduces a systematic approach to this growing issue. It moves beyond just counting hallucinations and instead offers tools to diagnose where they come from—and more importantly, how to fix them. ...