When the Answer Matters More Than the Thinking

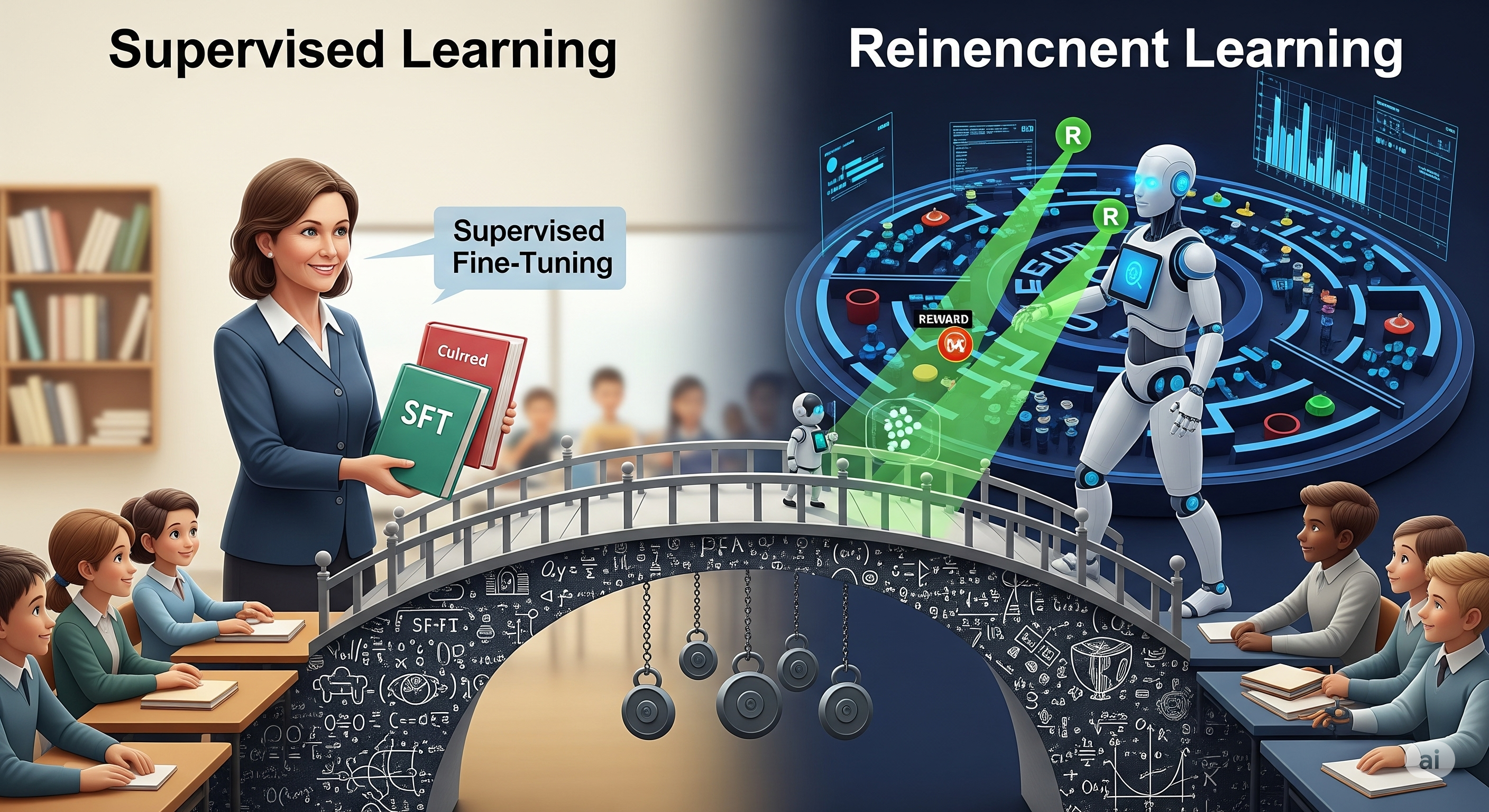

Opening — Why this matters now Chain-of-thought (CoT) has quietly become the default crutch of modern LLM training. When models fail, we add more reasoning steps; when benchmarks stagnate, we stretch the explanations even further. The assumption is implicit and rarely questioned: better thinking inevitably leads to better answers. The paper “Rethinking Supervised Fine-Tuning: Emphasizing Key Answer Tokens for Improved LLM Accuracy” challenges that assumption with a refreshingly blunt observation: in supervised fine-tuning, the answer itself is often the shortest—and most under-optimized—part of the output. ...