Confidence, Not Confidence Tricks: Statistical Guardrails for Generative AI

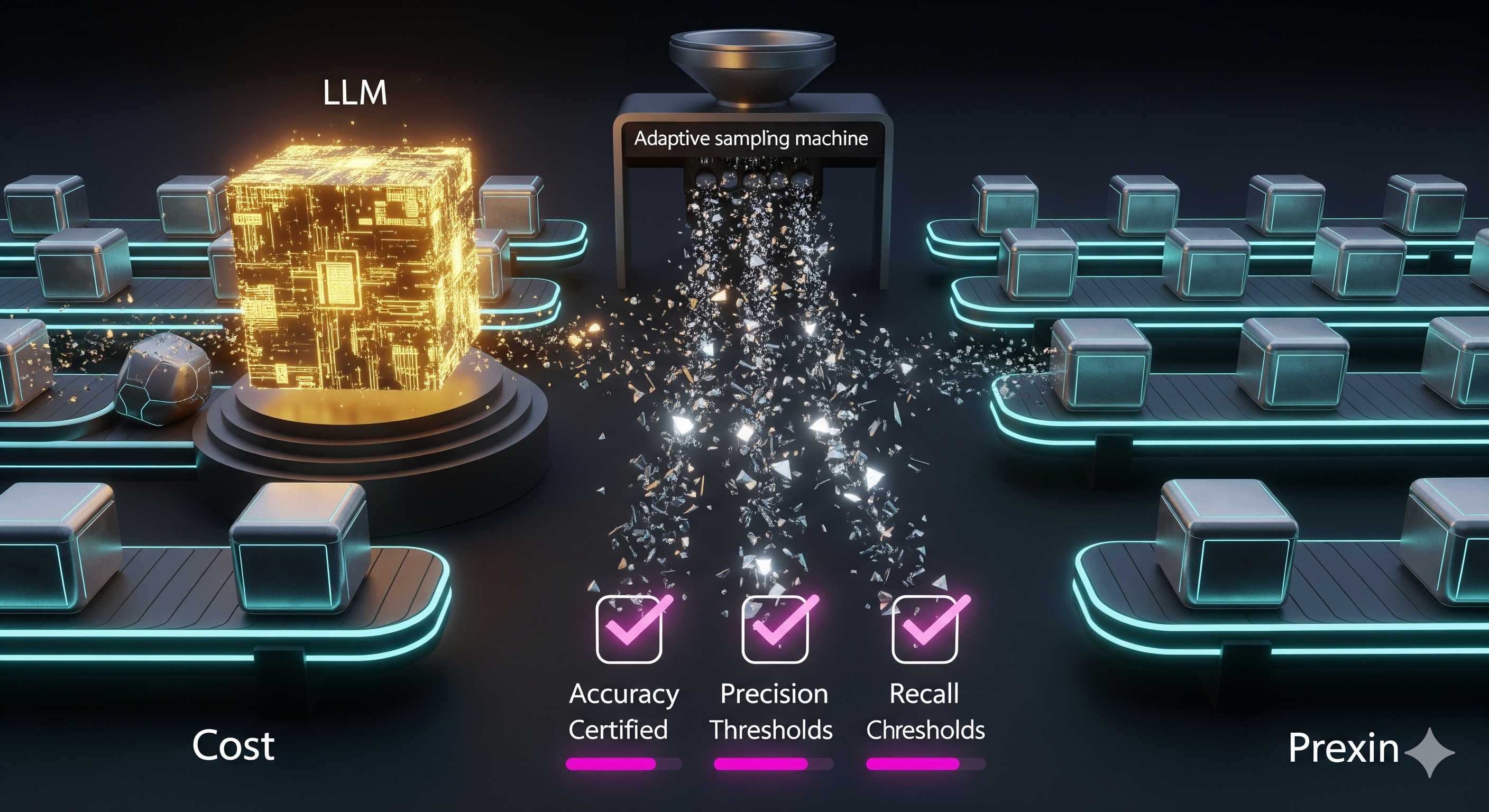

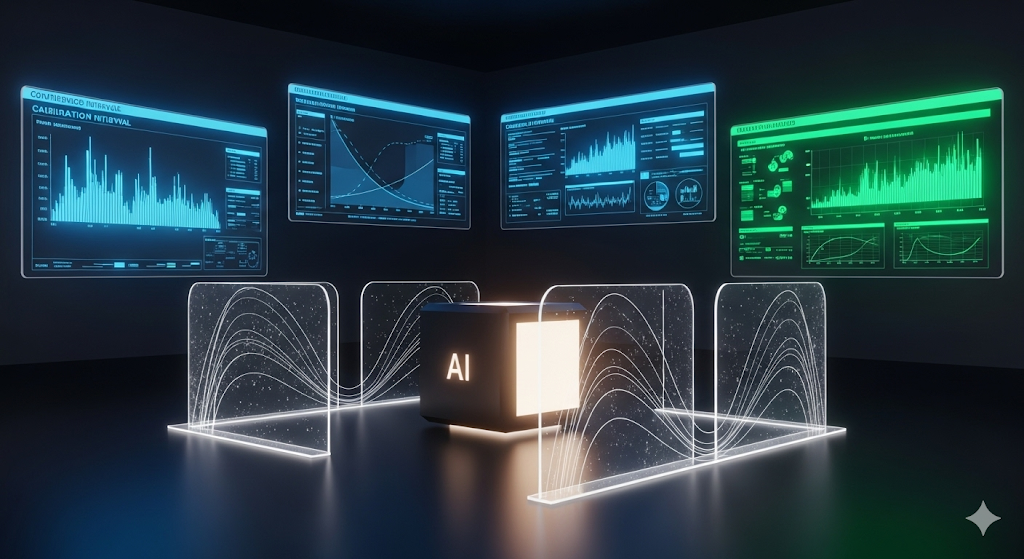

Generative AI still ships answers without warranties. Edgar Dobriban’s new review, “Statistical Methods in Generative AI,” argues that classical statistics is the fastest route to reliability—especially under black‑box access. It maps four leverage points: (1) changing model behavior with guarantees, (2) quantifying uncertainty, (3) evaluating models under small data and leakage risk, and (4) intervening and experimenting to probe mechanisms. The executive takeaway If you manage LLM products, your reliability roadmap isn’t just RLHF and prompt magic—it’s quantiles, confidence intervals, calibration curves, and causal interventions. Wrap these around any model (open or closed) to control refusal rates, surface uncertainty that matters, and measure performance credibly when eval budgets are tight. ...