Dial M—for Markets: Brain‑Scanning and Steering LLMs for Finance

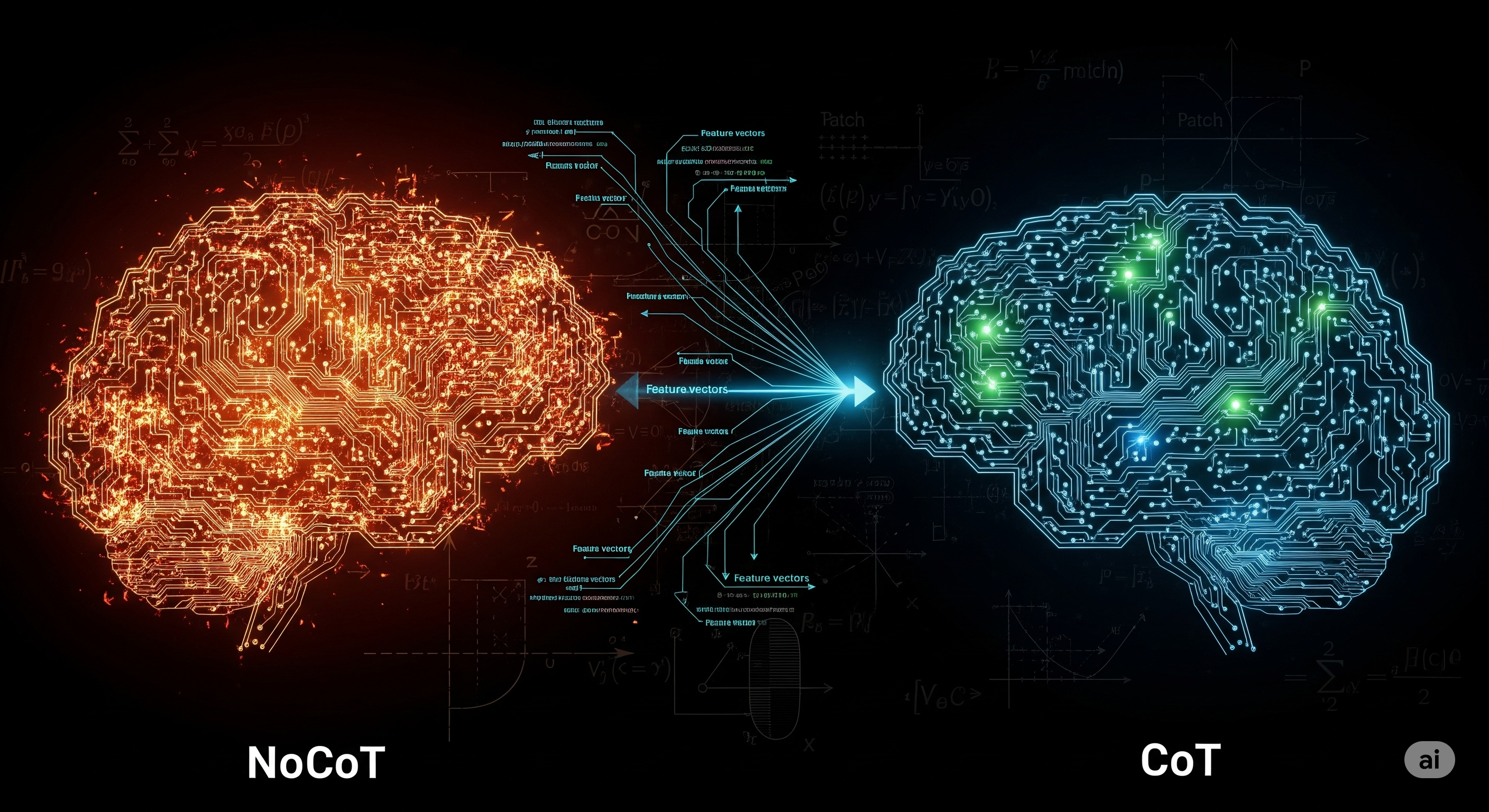

TL;DR A new paper shows how to insert a sparse, interpretable layer into an LLM to expose plain‑English concepts (e.g., sentiment, risk, timing) and steer them like dials without retraining. In finance news prediction, these interpretable features outperform final‑layer embeddings and reveal that sentiment, market/technical cues, and timing drive most short‑horizon alpha. Steering also debiases optimism, lifting Sharpe by nudging the model negative on sentiment. Why this matters (and what’s new) Finance teams have loved LLMs’ throughput but hated their opacity. This paper demonstrates a lightweight path to transparent performance: ...