Plan, Don't Spam: The Goldilocks Rule for Test‑Time Compute

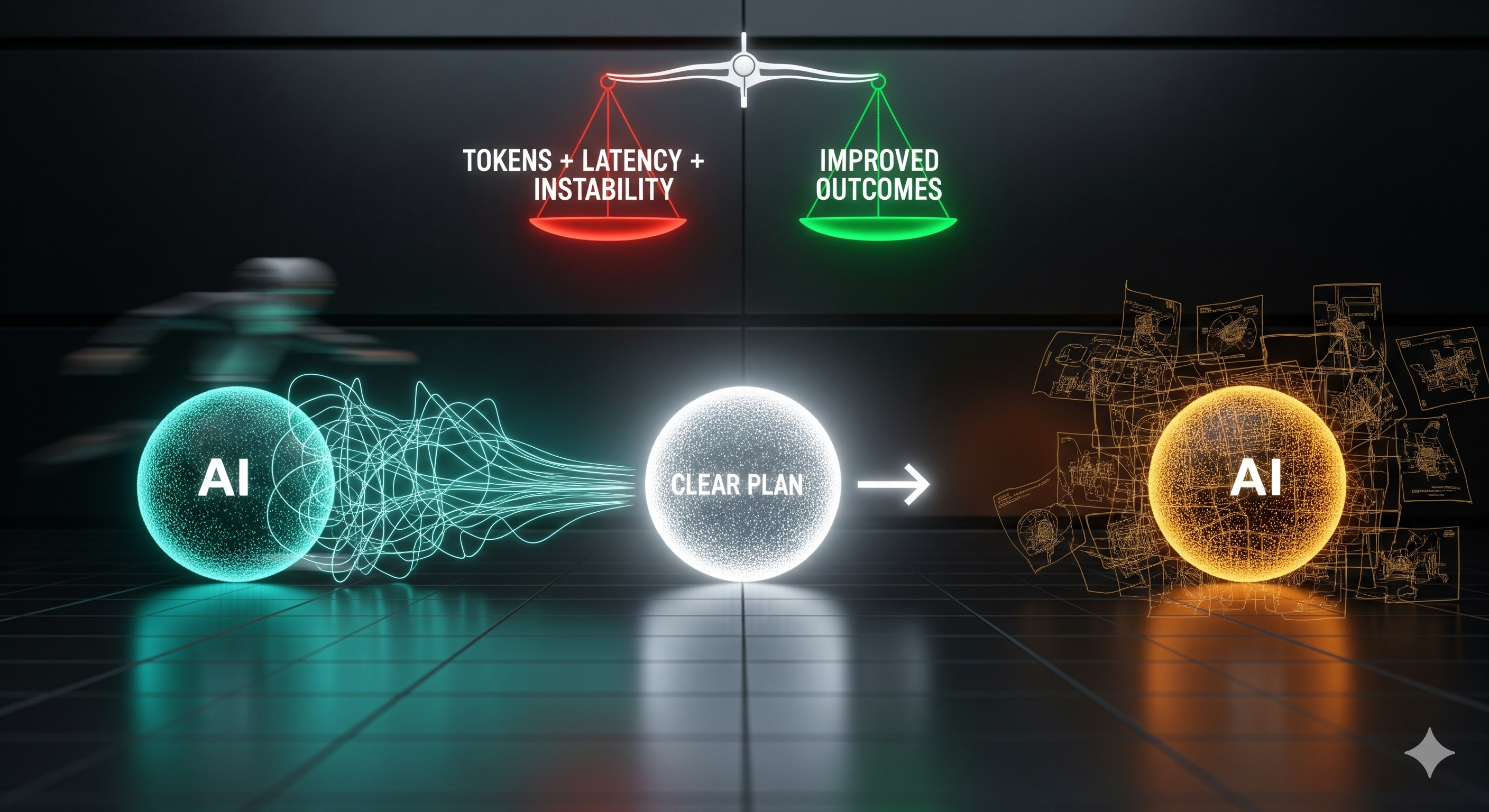

When do you really need a plan? In agentic AI, the answer isn’t “always” (ReAct‑style reasoning at every step) or “never” (greedy next‑action). It’s sometimes—and knowing when is the whole game. A new paper shows that agents that learn to allocate test‑time compute dynamically—planning only when the expected benefit outweighs the cost—beat both extremes on long‑horizon tasks. Why this matters for operators Most enterprise deployments of LLM agents are killed by one of two problems: ...