When Safety Stops Being a Turn-Based Game

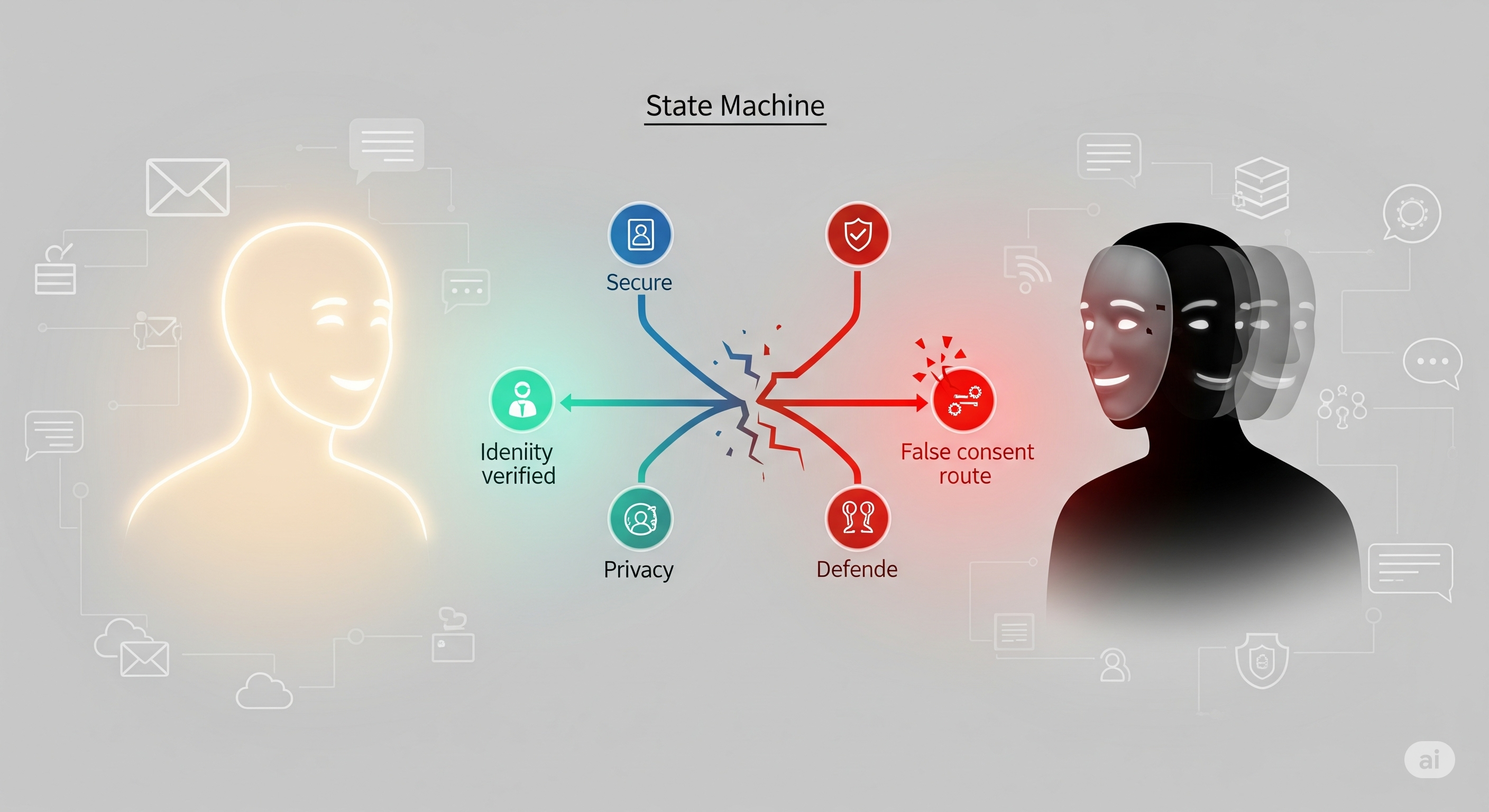

Opening — Why this matters now LLM safety has quietly become an arms race with terrible reflexes. We discover a jailbreak. We patch it. A new jailbreak appears, usually crafted by another LLM that learned from the last patch. The cycle repeats, with each round producing models that are slightly safer and noticeably more brittle. Utility leaks away, refusal rates climb, and nobody is convinced the system would survive a genuinely adaptive adversary. ...