Promptfolios: When Buffett Becomes a System Prompt

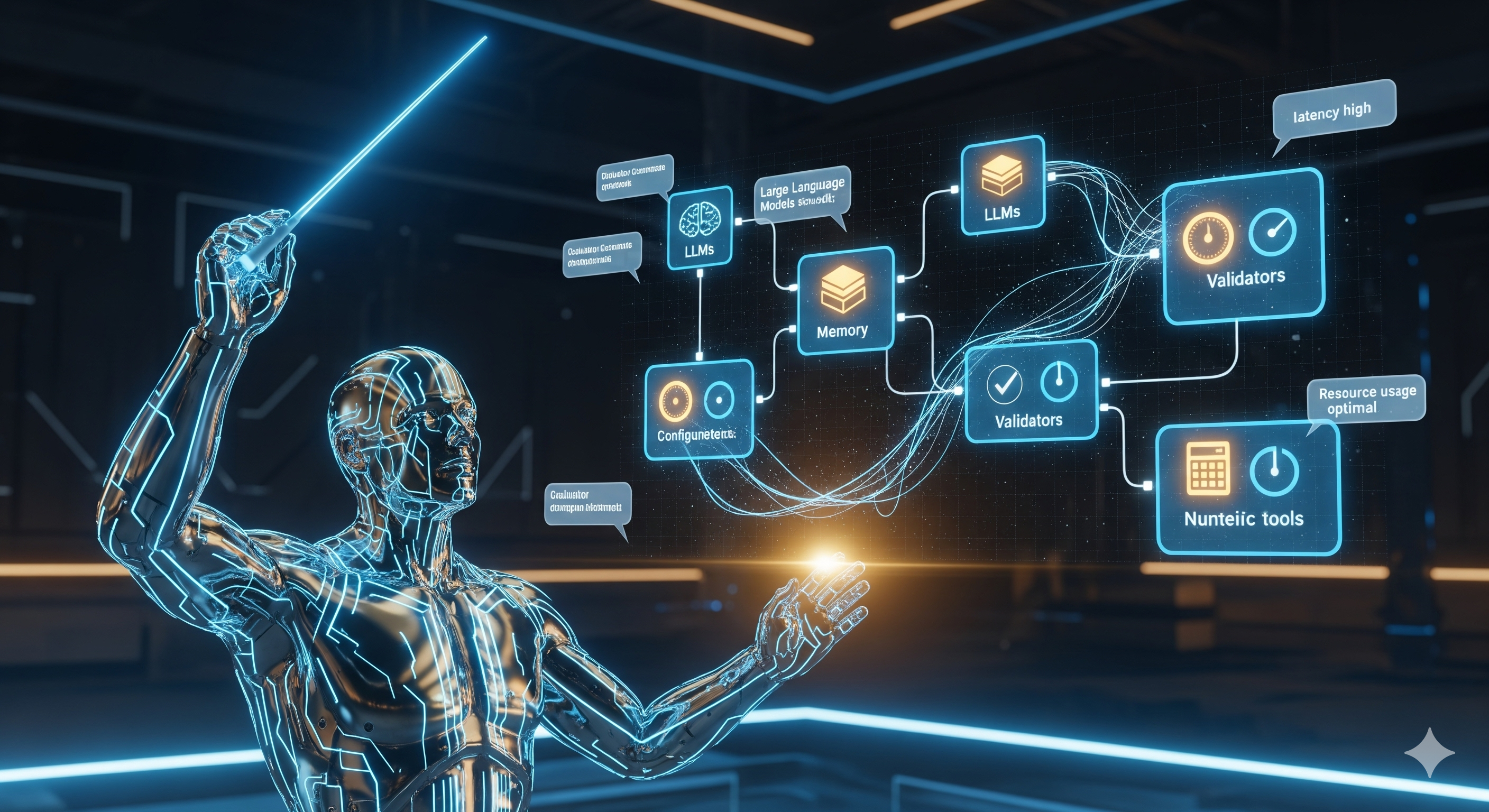

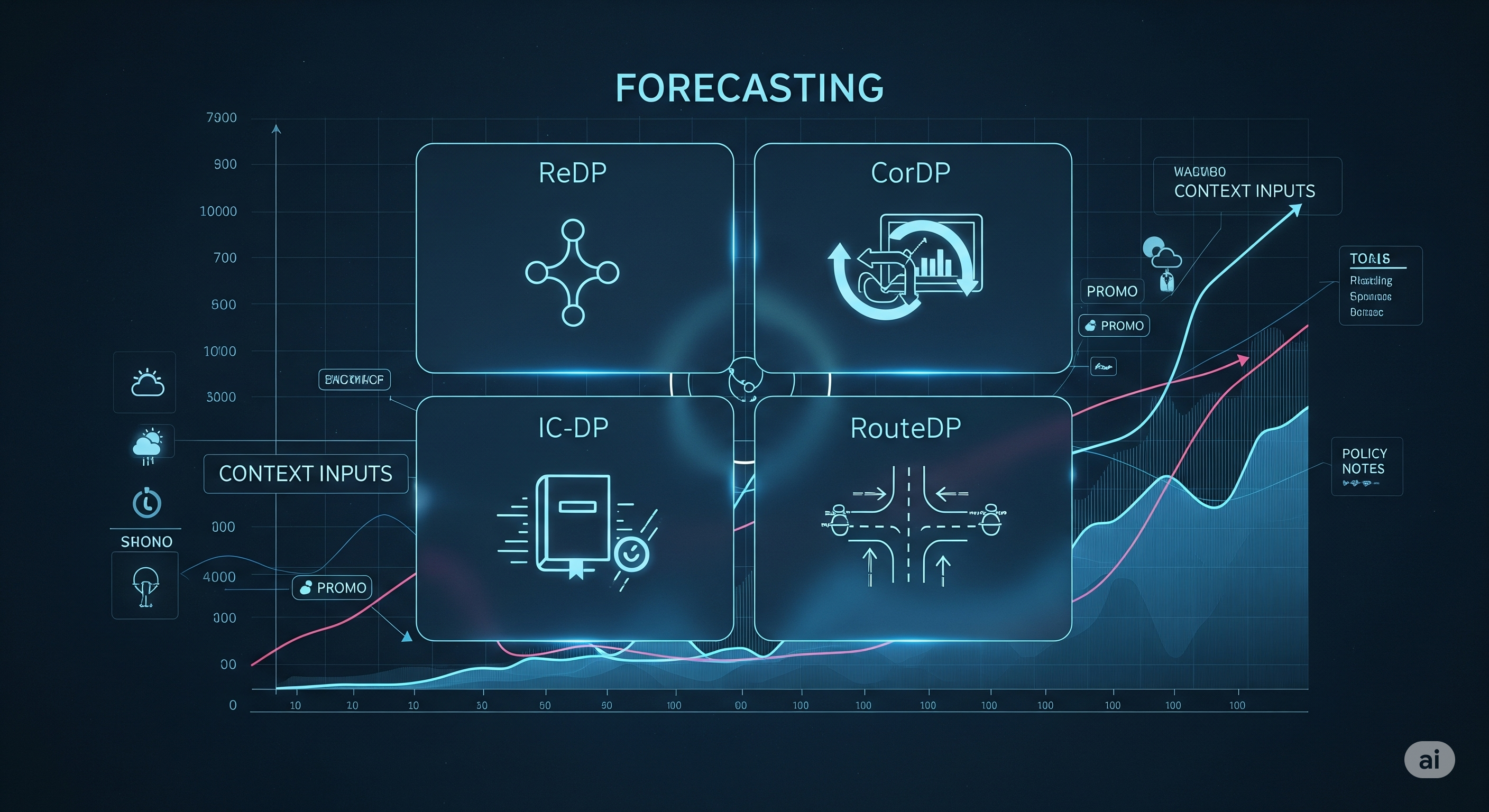

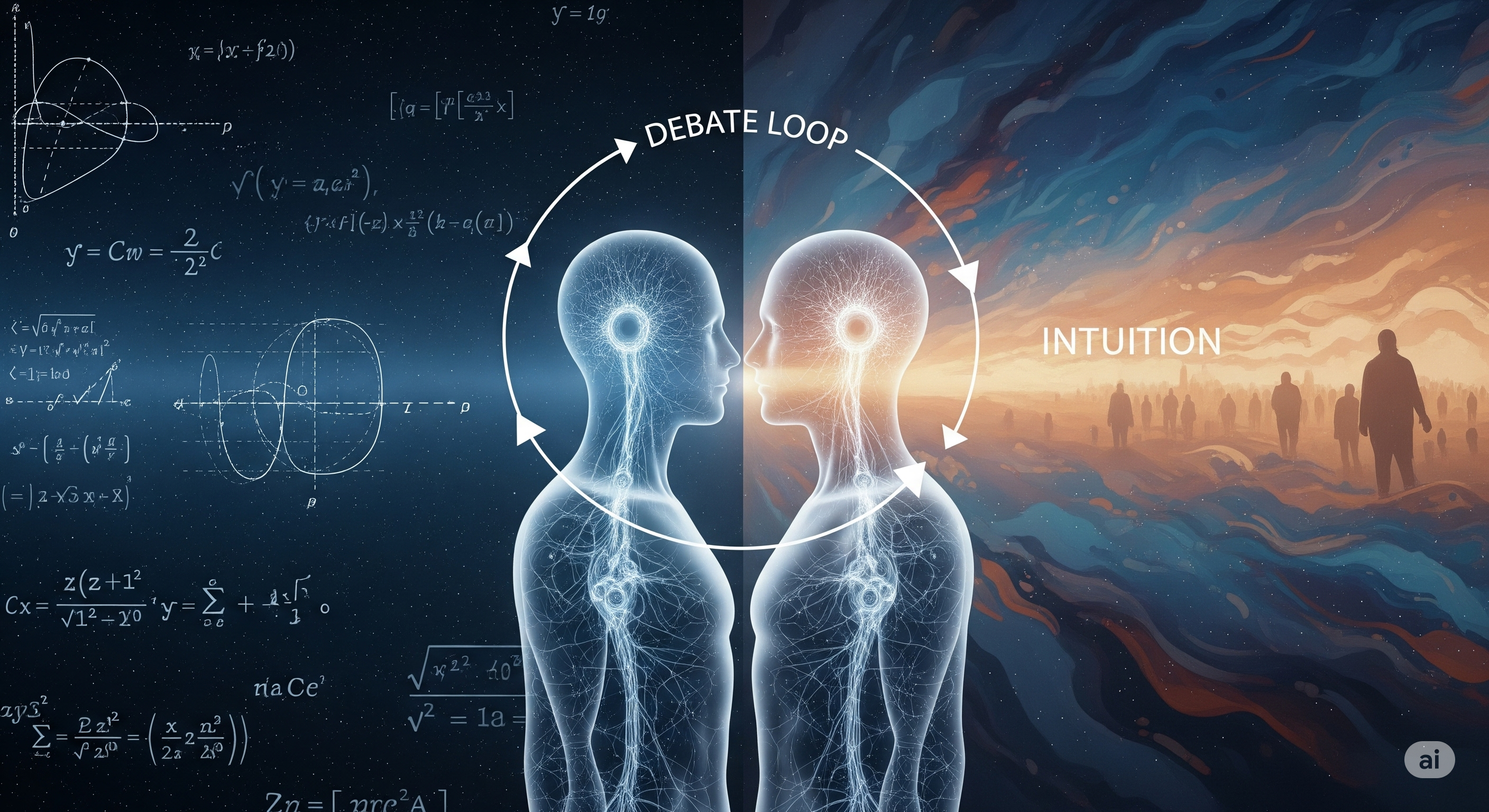

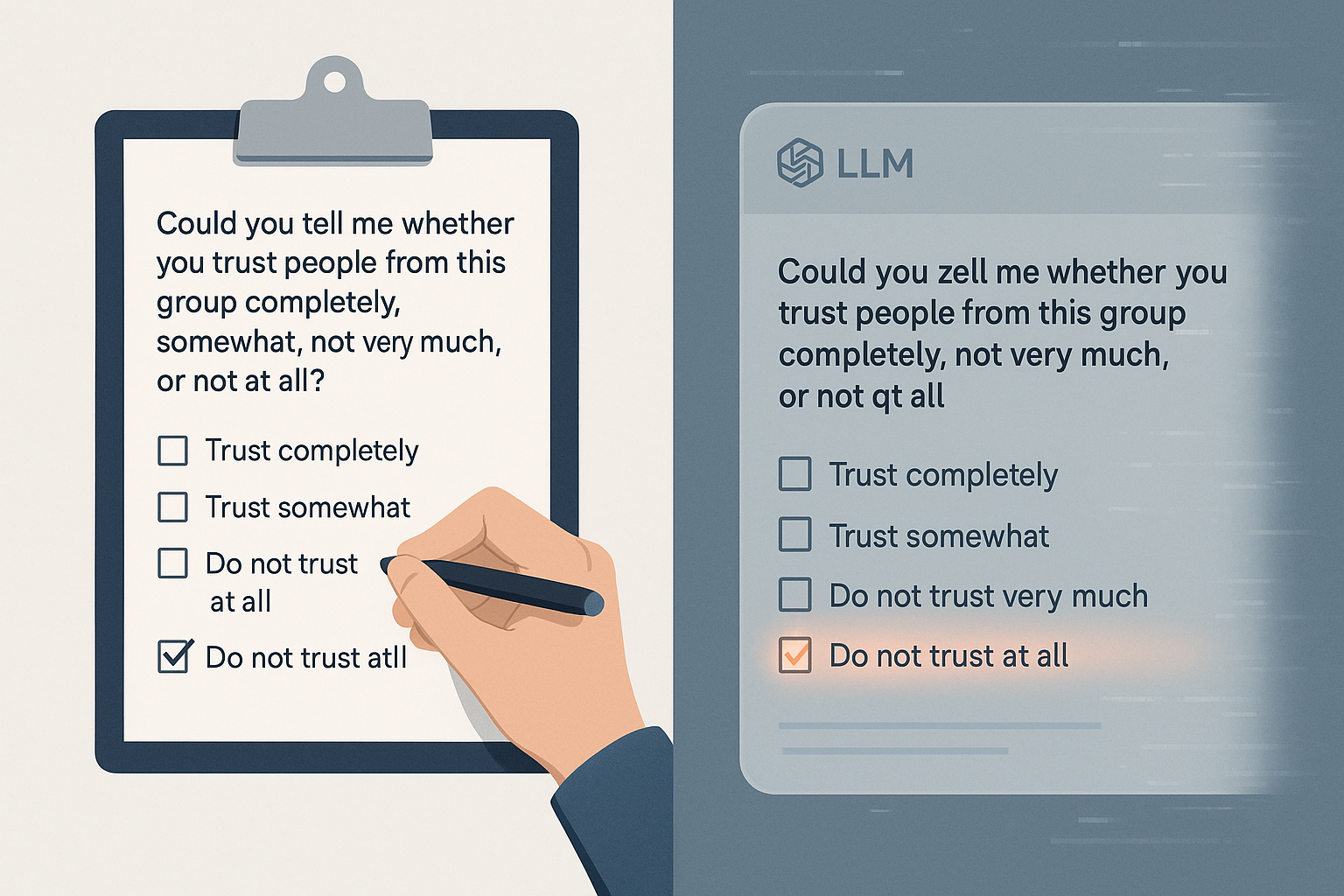

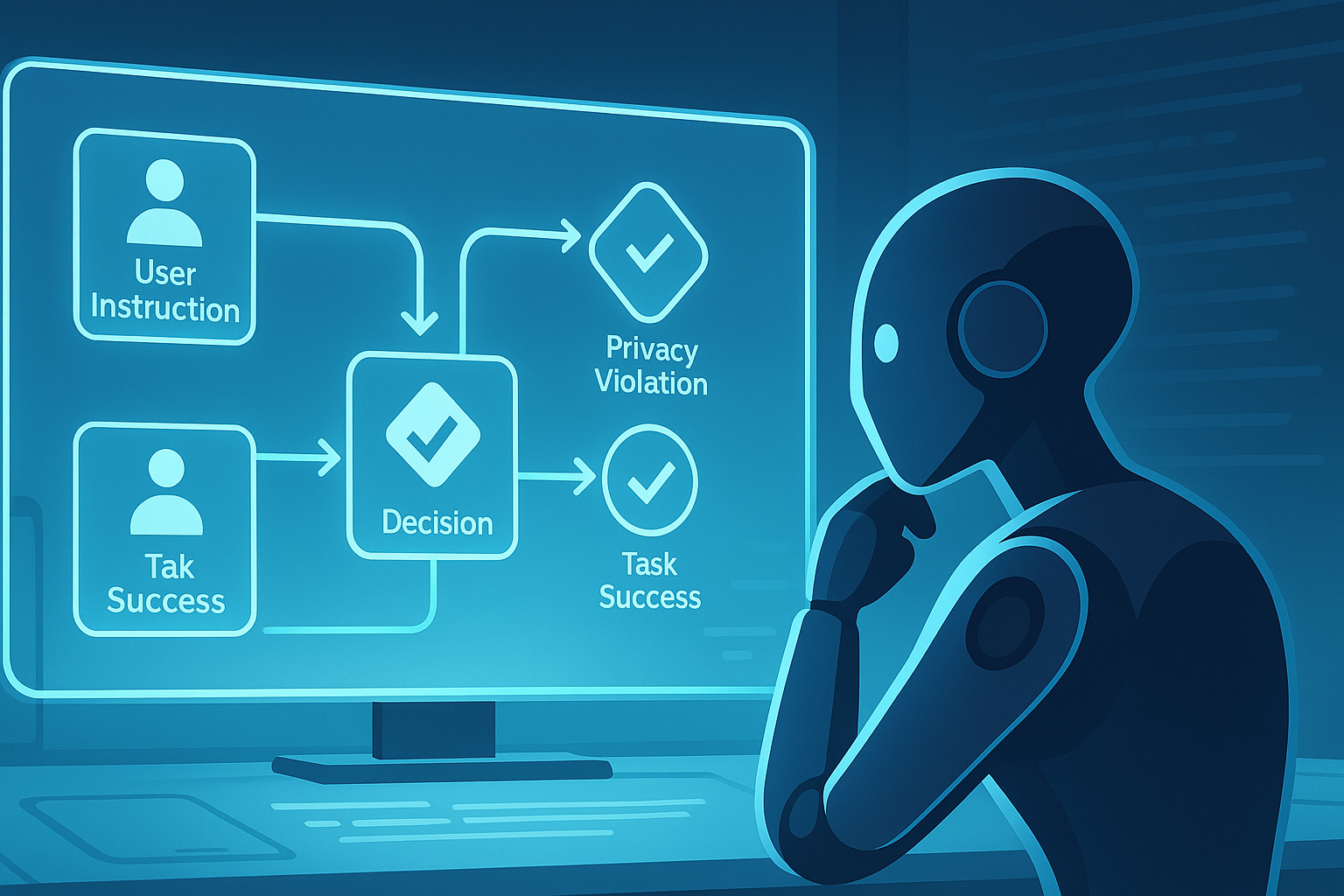

TL;DR A fresh study builds five prompt‑guided LLM agents—each emulating a legendary investor (Buffett, Graham, Greenblatt, Piotroski, Altman)—and backtests them on NASDAQ‑100 stocks from Q4 2023 to Q2 2025. Each agent follows a deterministic pipeline: collect metrics → score → construct a weighted portfolio. The Buffett agent tops the pack with ~42% CAGR, beating the NASDAQ‑100 and S&P 500 benchmarks in the window tested. The result isn’t “LLMs discovered alpha,” but rather: prompts can reliably translate qualitative philosophies into reproducible, quantitative rules. The real opportunity for practitioners is governed agent design—measurable, auditable prompts tied to tools—plus robust validation far beyond a single bullish regime. ...