When LLMs Stop Guessing and Start Complying: Agentic Neuro-Symbolic Programming

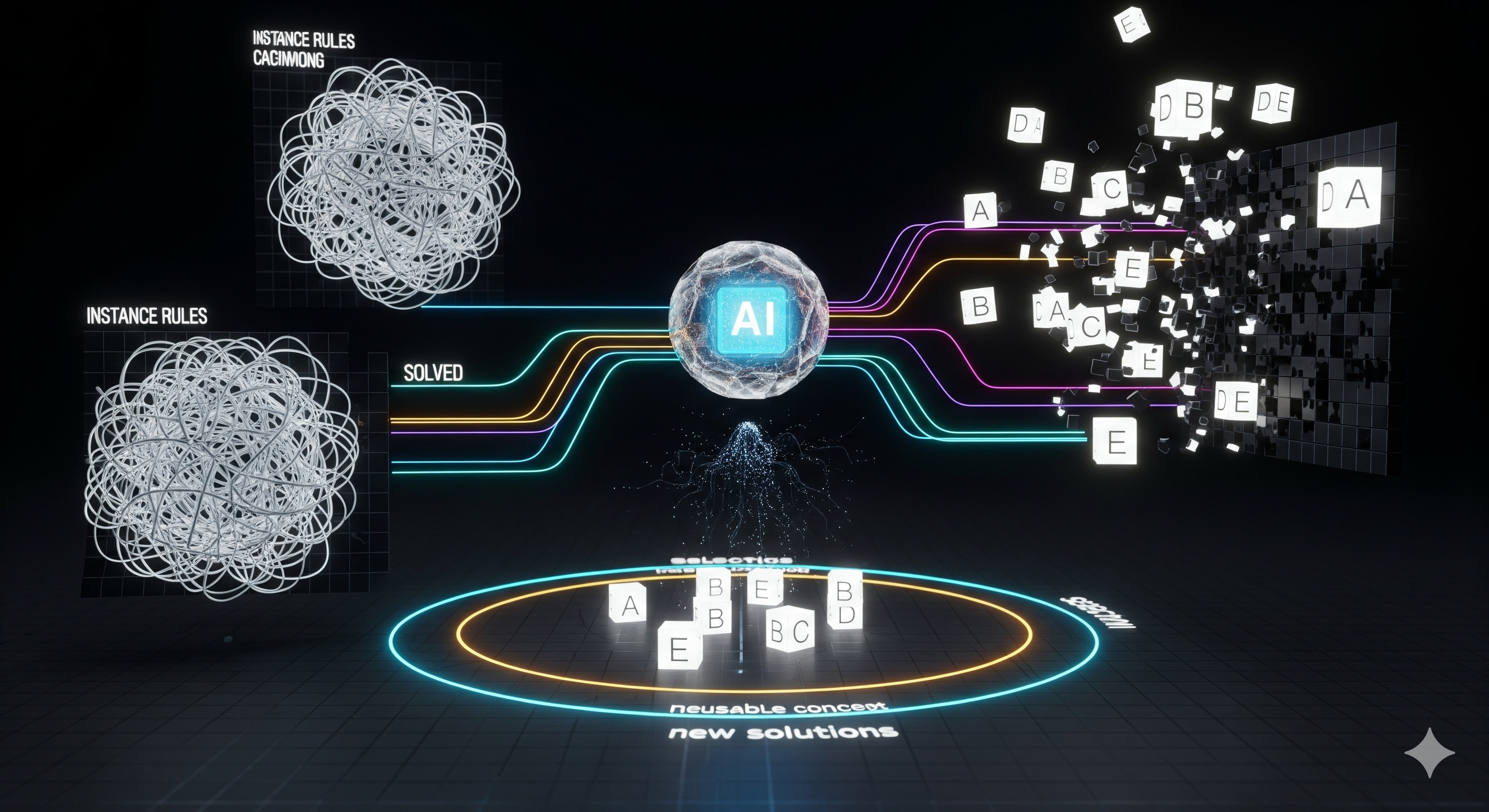

Opening — Why this matters now Large Language Models are excellent improvisers. Unfortunately, software systems—especially those embedding logic, constraints, and guarantees—are not jazz clubs. They are factories. And factories care less about eloquence than about whether the machine does what it is supposed to do. Neuro-symbolic (NeSy) systems promise something enterprises quietly crave: models that reason, obey constraints, and fail predictably. Yet in practice, NeSy frameworks remain the domain of specialists fluent in obscure DSLs and brittle APIs. The result is familiar: powerful theory, low adoption. ...