Auditing the Illusion of Forgetting: When Unlearning Isn’t Enough

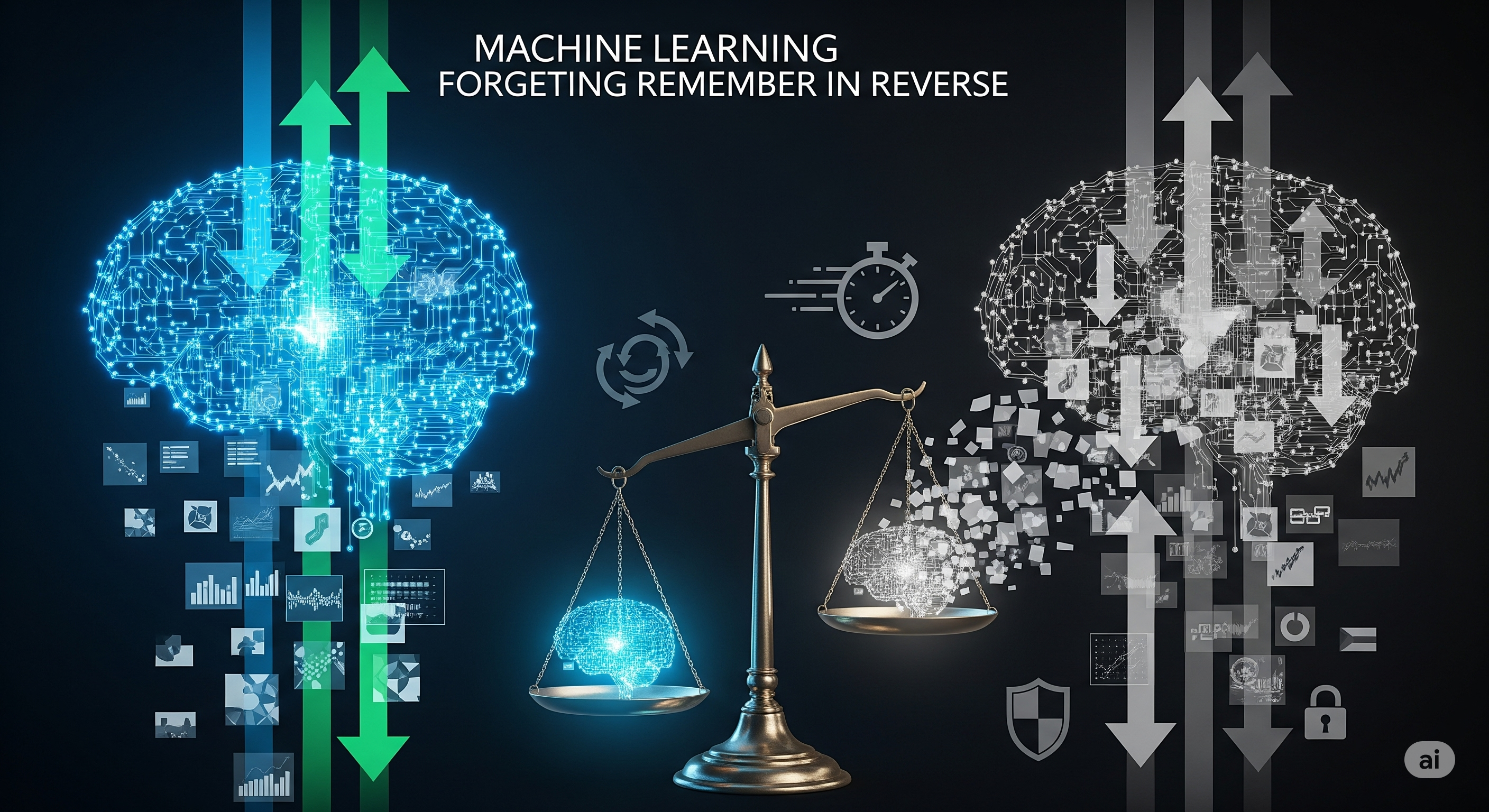

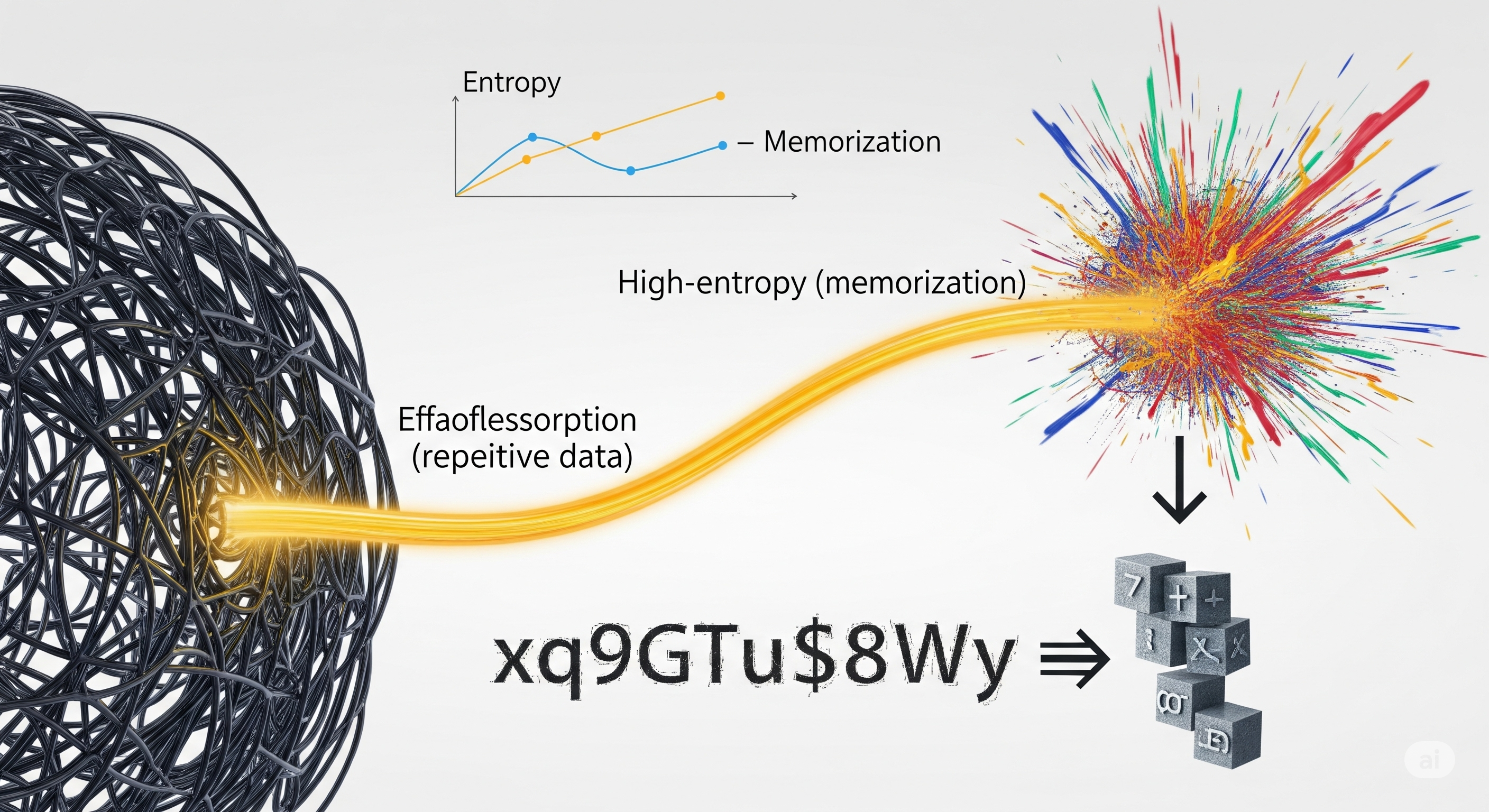

Opening — Why this matters now “Right to be forgotten” has quietly become one of the most dangerous phrases in AI governance. On paper, it sounds clean: remove a user’s data, comply with regulation, move on. In practice, modern large language models (LLMs) have turned forgetting into a performance art. Models stop saying what they were trained on—but continue remembering it internally. ...