Breaking the Glass Desktop: How OpenCUA Makes Computer-Use Agents a Public Asset

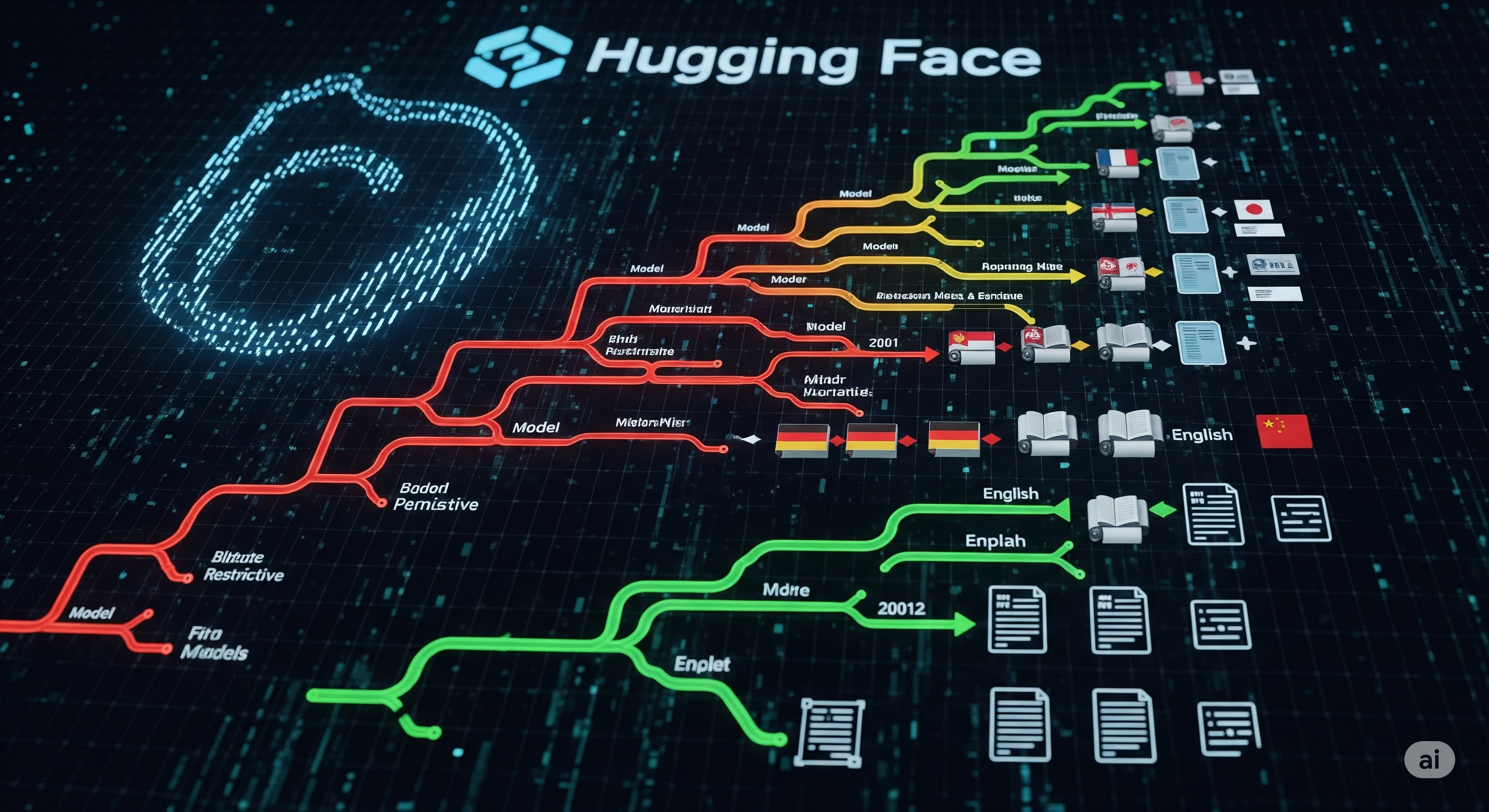

When we talk about AI agents that can “use a computer like a human,” most of today’s leaders—Claude, GPT-4o, Seed 1.5—are locked in proprietary vaults. This means the critical details that make them competent in high-stakes desktop workflows—training data, error recovery strategies, evaluation methods—are inaccessible to the wider research and business community. OpenCUA aims to change that, not by chasing hype, but by releasing the entire stack: tools, datasets, models, and benchmarks. ...