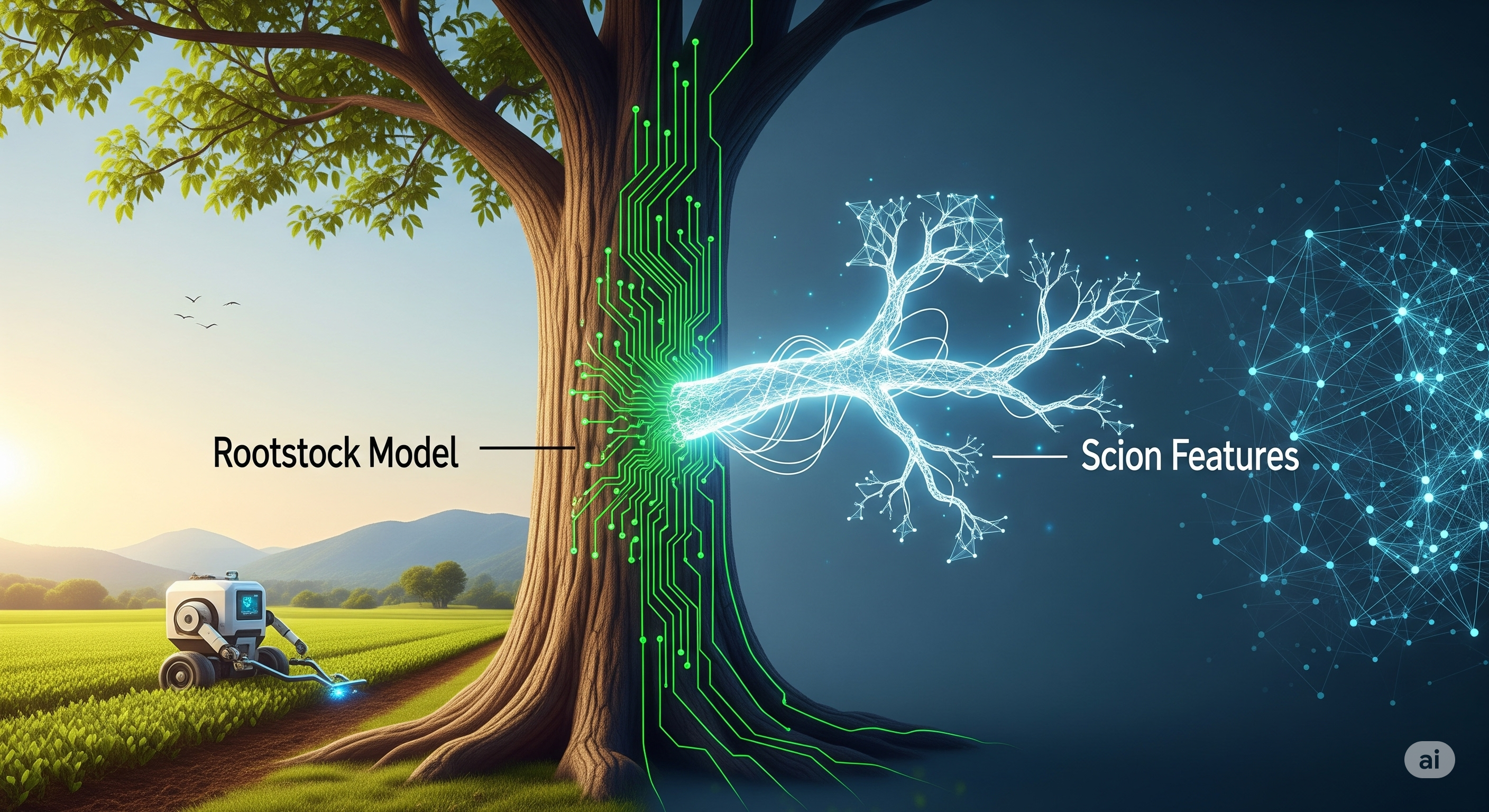

FAQ It Till You Make It: Fixing LLM Quantization by Teaching Models Their Own Family History

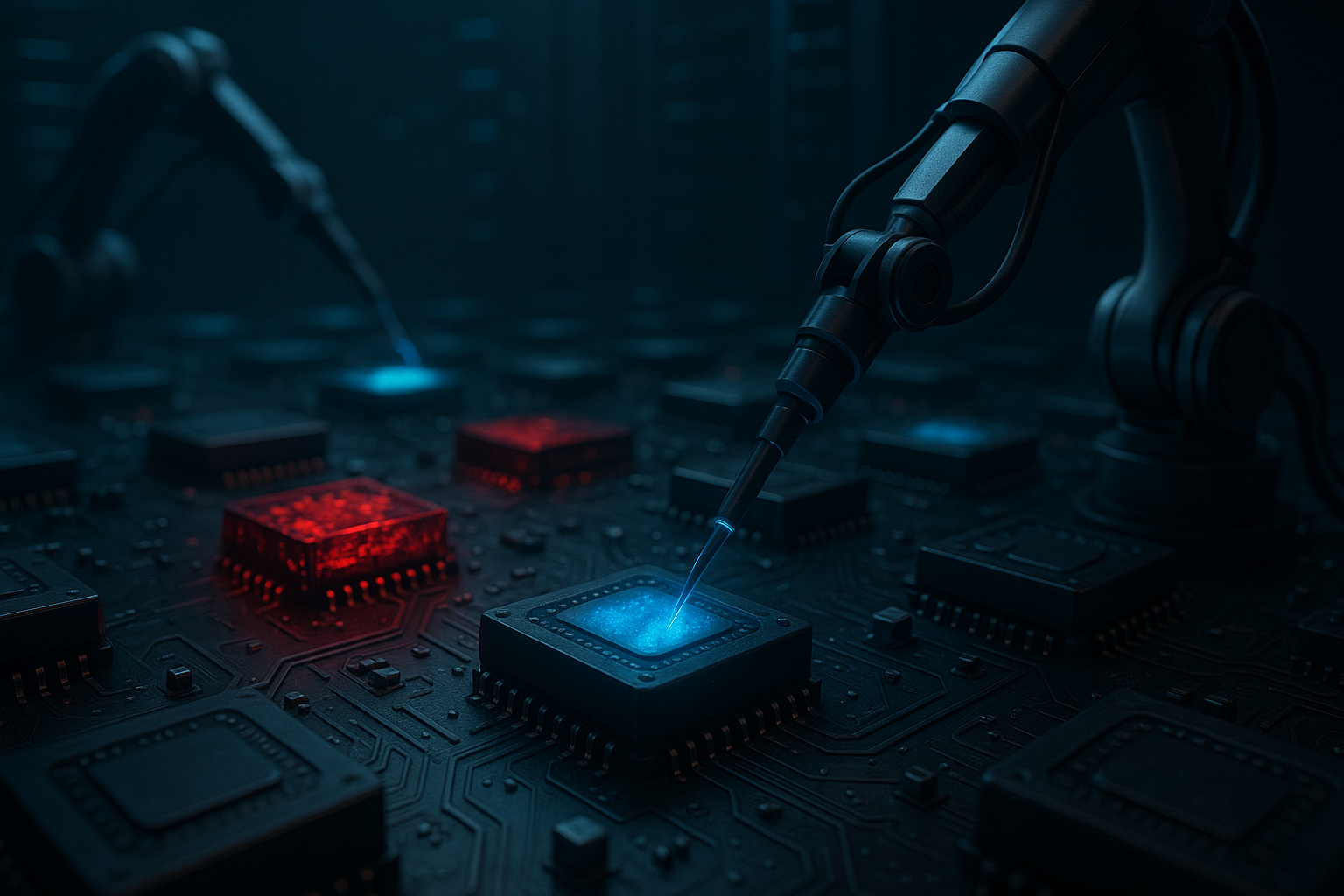

Opening — Why this matters now Large language models are getting cheaper to run, not because GPUs suddenly became charitable, but because we keep finding new ways to make models forget precision without forgetting intelligence. Post-training quantization (PTQ) is one of the most effective tricks in that playbook. And yet, despite years of algorithmic polish, PTQ still trips over something embarrassingly mundane: the calibration data. ...