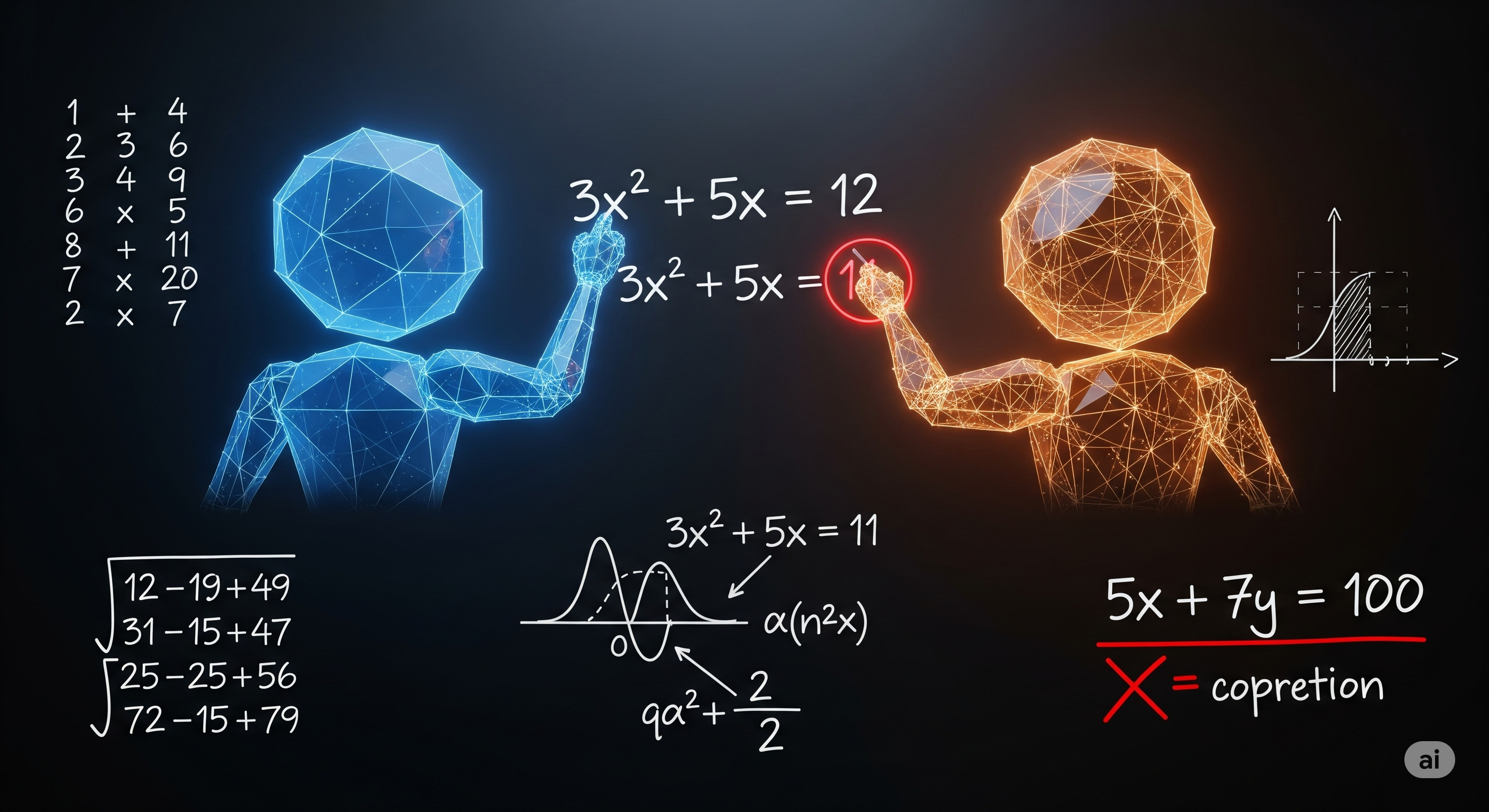

Count Us In: How Dual‑Agent LLMs Turn Math Slips into Teachable Moments

Large language models can talk through a solution like a star pupil—and still get the answer wrong. A new study of four modern LLMs across arithmetic, algebra, and number theory shows where they stumble (mostly procedural slips), when they recover (with a second agent), and how teams should redesign AI tutors and graders to be trustworthy in the real world. TL;DR for builders Single models still flub arithmetic. Even strong general models mis-add partial products or mis-handle carries. Reasoning-tuned models help—but not always. OpenAI o1 was consistently best; DeepSeek‑R1 “overthought” and missed basics. Two agents beat one. Peer‑review style “dual agents” dramatically raised accuracy, especially on Diophantine equations. Most errors are procedural, not conceptual. Think slips and symbolic manipulations—not deep misunderstandings. Step‑labeling works. A simple rubric (Correct / Procedural / Conceptual / Impasse) localizes faults and boosts formative feedback. What the paper really tested (and why that matters) Most benchmarks hide easy leakage and memorized patterns. Here, the authors build three item models—templates that generate many variants—to stress the models beyond memorization: ...